DeepSeek's Hidden Features Professional Developers Love

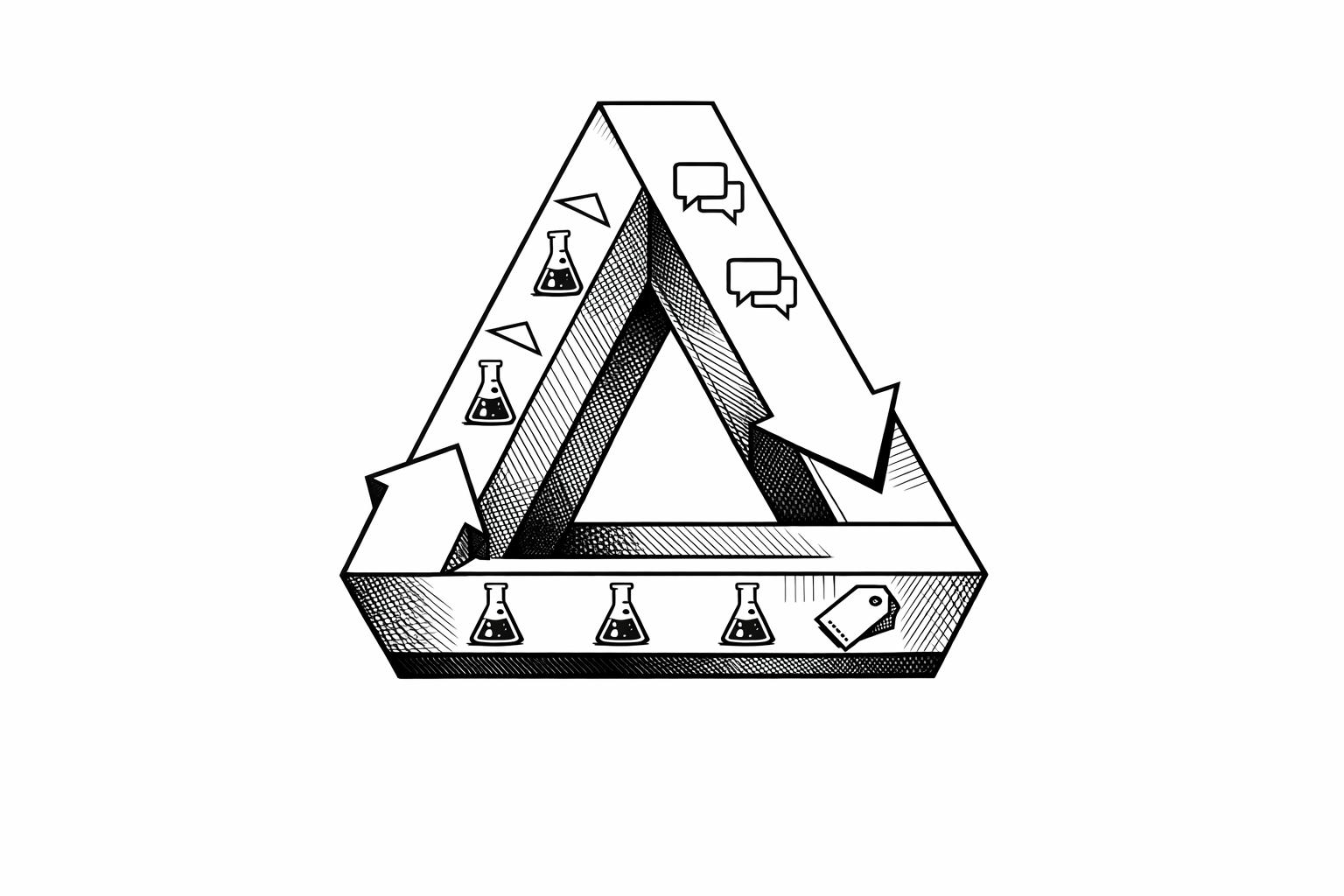

DeepSeek is an open-source AI coding assistant that’s customizable, cost-effective, and packed with tools to boost productivity. Here’s what makes it stand out:

- Advanced Reasoning: Uses reinforcement learning to improve decision-making, cutting repetitive tasks and saving up to 40% of development time.

- Rule-Based Rewards: Fine-tunes AI behavior with clear rules, reducing the need for human feedback and simplifying debugging.

- Model Distillation: Shrinks large models into smaller ones without losing performance, cutting inference costs by up to 99%.

- Emergent Behavior Networks: Handles tasks like fraud detection and customer service by identifying patterns without explicit programming.

- Offline Mode: Works entirely on local hardware, ensuring privacy and security for sensitive projects in industries like healthcare and finance.

Quick Comparison

| Feature | Key Benefit | Best Use Case | Cost Efficiency |

|---|---|---|---|

| Advanced Reasoning | Saves time on repetitive tasks | Debugging, code reviews | Moderate |

| Rule-Based Rewards | Reduces need for human feedback | Fine-tuning AI models | High |

| Model Distillation | Cuts costs while retaining accuracy | Resource-constrained deployments | Very High |

| Emergent Behavior Networks | Identifies patterns automatically | Fraud detection, recommendation systems | Moderate |

| Offline Mode | Ensures privacy and local control | Sensitive data environments | High |

DeepSeek combines affordability with advanced features to streamline workflows, making it a strong choice for developers across industries. Whether you’re debugging or working on confidential projects, it’s designed to save time, cut costs, and protect your data.

DeepSeek V3 A 20-Year Developer’s Honest Review After 30 Hours of Coding

1. Advanced Reasoning Through Reinforcement Learning

DeepSeek-R1 takes AI learning to a new level with its reinforcement learning approach, changing how the model learns and makes decisions. Unlike older methods that depend on pre-labeled datasets, this model improves through trial and error, refining its reasoning based on feedback. This process enables it to tackle complex development scenarios with greater precision.

A standout feature is its use of Group Relative Policy Optimization (GRPO), which eliminates the need for a critic network. This reduces computational demands by 40% compared to traditional methods, resulting in faster processing times and lower costs for development teams.

Practical Impact on Development Workflows

DeepSeek-R1’s reinforcement learning has shown measurable results in real-world applications. For example, it scored an impressive 90.2% Pass@1 on MATH-500 and ranked in the 51.6th percentile on Codeforces for coding tasks. The model’s ability to evolve itself means it continually improves its strategies, enabling better problem-solving and more precise suggestions during code reviews and troubleshooting.

Professional developers have reported significant time savings when using DeepSeek-R1 for tasks like code reviews, architectural planning, and debugging. By learning from ongoing feedback, the model adapts to specific coding styles and project needs, cutting down on repetitive tasks and improving efficiency.

Efficiency in Resource Utilization

DeepSeek-R1 also stands out for its cost-effectiveness. Priced at $0.55 per million input tokens and $2.19 per million output tokens, it’s 27 times cheaper than other proprietary models. Moreover, the total training cost was just $12 million, a fraction of the $40+ million often required for similar models.

Its 14-billion-parameter distilled version delivers exceptional performance, achieving 69.7% on AIME 2024 and 94.3% on MATH-500. This demonstrates that reinforcement learning can produce smaller, more efficient models that still deliver professional-grade results. These advancements mean that powerful AI tools can now operate on less expensive hardware without compromising quality.

Solutions to Common Development Challenges

DeepSeek-R1 effectively addresses several challenges developers face. The model adapts to the specific context of a codebase, making it particularly useful for managing large, intricate systems where traditional tools often fall short.

For teams focused on security, DeepSeek-R1’s reinforcement learning adjusts threat detection algorithms in real time, responding to new attack patterns. By evaluating the long-term effects of coding decisions, it offers solutions that improve maintainability and scalability - helping developers reduce debugging time and enhance overall code quality.

Since its launch in January 2025, DeepSeek-R1 has seen rapid adoption among developers. Over 500 derivative models were created within days, and it has been downloaded 2.5 million times on Hugging Face alone. This quick uptake highlights how well its reinforcement learning features meet the everyday needs of developers.

2. Rule-Based Reward Engineering

DeepSeek takes its reinforcement learning a step further with rule-based reward engineering, a method that fine-tunes AI behavior using predefined rules. Instead of relying on subjective human feedback, this approach evaluates AI outputs against objective criteria, ensuring consistent performance while cutting costs.

For example, DeepSeek-R1-Zero incorporates two types of rewards: accuracy rewards and format rewards. Accuracy rewards confirm that the model's responses are correct, such as solving math problems or passing coding tests. Format rewards, on the other hand, guarantee that responses follow a specific structure - like enclosing a thought process within <think> and </think> tags.

Practical Impact on Development Workflows

This method is a game-changer for developers fine-tuning AI models. Unlike systems that require vast amounts of human-labeled data, rule-based rewards rely on well-defined rules to capture nuanced outputs. This makes decisions more transparent and easier to explain, which is critical for accountability. Developers can quickly trace the reasoning behind the model’s choices, simplifying debugging and optimization.

The efficiency of this approach is evident in DeepSeek-R1-Zero’s performance. After just 8,000 training steps, it delivers results comparable to OpenAI-o1-0912. This streamlined process saves time and resources, making it a highly efficient tool for development workflows.

Efficiency in Resource Utilization

Rule-based reward systems shine when it comes to resource management. They significantly reduce the need for human feedback, saving both time and money. These systems can process large datasets quickly and consistently, cutting down the effort required for decision-making.

Another advantage is their flexibility. Developers can easily update rules or add new parameters without retraining the entire model. This adaptability allows teams to adjust to new requirements or safety standards with minimal disruption, making the system highly responsive to evolving needs.

Support for Privacy and Security

Beyond improving workflows, rule-based rewards also strengthen privacy and security. Transparent decision-making ensures that every output can be traced back to a specific rule, making it easier to identify and address potential vulnerabilities before they escalate.

OpenAI has already embraced this approach, integrating Rule-Based Rewards into its safety protocols since the launch of GPT-4 in July 2024, including in the GPT-4o mini model.

"Our research shows that Rule-Based Rewards (RBRs) significantly enhance the safety of our AI systems, making them safer and more reliable for people and developers to use every day." – OpenAI

This level of transparency and traceability is a powerful tool for security teams, ensuring that AI systems remain robust and secure.

Solutions to Common Development Challenges

Rule-based reward engineering addresses several persistent challenges in AI development. By defining clear, objective rules, it minimizes reward hacking and ensures consistent behavior. This is especially valuable in environments where uniform and predictable decisions are critical.

Additionally, it helps balance helpfulness and safety. For instance, Rule-Based Rewards reduce the likelihood of the AI refusing safe requests while maintaining strong performance on key benchmarks. This ensures that AI systems remain both effective and secure, solving real-world problems without compromising reliability.

3. Model Distillation for Better Performance

DeepSeek uses model distillation to transfer knowledge from a large teacher model to a smaller student model, maintaining high performance while significantly reducing computational costs. This technique involves fine-tuning smaller models with reasoning data from their larger counterparts, ensuring that the compact versions retain most of the original capabilities.

In simple terms, the smaller model learns by mimicking the outputs and decision-making processes of the larger model. A great example of this is DeepSeek's R1 distilled models, launched in January 2025, which utilize Qwen and Llama architectures. For instance, DeepSeek‑R1‑Distill‑Qwen‑32B and DeepSeek‑R1‑Distill‑Llama‑70B deliver impressive results, scoring over 70% Pass@1 on AIME 2024 and exceeding 94% on MATH‑500.

"Model distillation in machine learning (ML) is a technique used to transfer knowledge from a large, complex model (often called the teacher model) to a smaller, simpler model (called the student model)." - Mehul Gupta, Author

Practical Impact on Development Workflows

This approach allows developers to use advanced reasoning capabilities in smaller models, enabling deployment on less expensive hardware without sacrificing performance. Distilled models not only achieve strong benchmark scores but often surpass larger non-reasoning models. For example, DeepSeek‑R1‑Distill‑Qwen‑1.5B showcases targeted optimization, scoring 83.9% on MATH‑500 while achieving 16.9% on LiveCodeBench.

Efficiency in Resource Utilization

DeepSeek's distilled models are designed to retain 90–95% of their teacher models' capabilities while slashing inference costs to less than 1% of the original. They also improve inference speeds by up to 40%, enhancing user experience and reducing operational expenses. Models like DeepSeek‑R1‑Distill‑Qwen‑32B demonstrate how distillation can outperform non-distilled versions in reasoning benchmarks while using far less computational power. These advancements not only lower costs but also open the door to enhanced privacy measures, as discussed next.

Support for Privacy and Security

The efficiency gains from distillation also address privacy concerns. Together AI ensures that DeepSeek‑R1 distilled models prioritize privacy, offering full opt-out controls to prevent sensitive data from being shared with DeepSeek. Unlike DeepSeek's own API, these models are hosted in Together AI's data centers, allowing organizations to experiment safely with both the main DeepSeek‑R1 and its distilled versions. Additionally, these models are fine-tuned on 800,000 examples from the main DeepSeek‑R1 model, enabling effective knowledge transfer without requiring ongoing access to the original training data.

Solutions to Common Development Challenges

Model distillation bridges the gap between performance and resource limitations, simplifying development workflows and addressing real-world challenges. Traditionally, developers had to choose between powerful but expensive models and more affordable options with limited capabilities. DeepSeek's distilled models provide consistent, reliable performance across various hardware setups, making them ideal for practical applications. Together AI enhances this flexibility with multiple distilled variants, such as DeepSeek‑R1 Distilled Llama 70, which outperforms GPT‑4o with 94.5% accuracy on MATH‑500 and matches o1‑mini on coding benchmarks. Similarly, DeepSeek‑R1 Distilled Qwen 1.5, a compact 1.5B-parameter model, excels in math tasks while maintaining high efficiency.

sbb-itb-58f115e

4. Emergent Behavior Networks

Building on advancements in reinforcement learning and model distillation, DeepSeek's emergent behavior networks are reshaping development workflows in ways that go beyond traditional AI methods.

Emergent Behavior Networks are among DeepSeek's most advanced tools. These systems demonstrate capabilities that weren’t explicitly programmed. Instead, when trained on large and diverse datasets, they begin to generalize, often exhibiting new behaviors that enhance development outcomes. This ability places these networks a step ahead of conventional AI systems.

Unlike traditional AI that sticks to predefined rules, DeepSeek’s emergent behavior networks create complex patterns through simple interactions. This opens up possibilities in areas like customer service automation, fraud detection, and recommendation systems, where adaptability and creativity are key.

Practical Impact on Development Workflows

Emergent behavior networks have shown their value in real-world scenarios where rule-based systems fall short. For instance, customer service chatbots powered by DeepSeek have started handling complex queries by synthesizing information in ways that weren’t pre-programmed. This adaptability has led to noticeable gains in customer satisfaction. These chatbots adjust to various customer needs without relying on scripted responses.

In fraud detection, these networks have uncovered patterns that traditional methods often miss. By analyzing vast datasets, they identify subtle correlations and behaviors that point to fraudulent activity. However, to make the most of these capabilities, it’s essential to implement strong monitoring systems. This ensures anomalies are caught early and integrates explainability tools to clarify how decisions are made.

Efficiency in Resource Utilization

DeepSeek’s emergent behavior networks also deliver significant resource savings. Techniques like parameter reduction, quantization, and pruning make these systems highly efficient. These processes not only reduce overfitting but also enhance generalization while cutting down on computational demands.

For example, a major bank reduced inference time by 73% by applying quantization and pruning techniques. Similarly, an e-commerce platform slashed resource consumption by 40% using the same methods. Quantization alone can shrink model sizes by 75% or more, and pruning can eliminate 30–50% of parameters without affecting performance.

Solutions to Common Development Challenges

Emergent behavior networks address key challenges like scalability and adaptability. By promoting self-organization, they enable complex behaviors to emerge from simple rules without centralized control. In distributed systems, such as social networks, these networks take advantage of the "small world" property, where the average shortest path between nodes grows logarithmically with network size - making them ideal for large-scale applications.

These networks thrive in dynamic environments. For example, recommendation engines can identify new product trends or user segments as data evolves, without requiring manual updates or reconfigurations. This ongoing adaptability ensures systems remain relevant as conditions change.

To fully unlock these benefits, developers need to set clear boundaries for AI behavior, ensuring that while beneficial emergence is encouraged, undesirable actions are prevented. Rigorous testing across diverse scenarios during development also helps maintain control and effectiveness. In critical business settings, combining AI insights with human oversight ensures ethical considerations are upheld while delivering innovative solutions. These dynamic capabilities solidify DeepSeek’s role in turning complex challenges into manageable solutions.

5. Offline Mode and Privacy Controls

DeepSeek's offline mode brings a new level of control and security to professional AI development. Once downloaded, the system operates entirely on local hardware, making it a perfect fit for industries like government, finance, and healthcare that require air-gapped environments for sensitive tasks.

"As developers, we need tools that respect our privacy, work offline, and adapt to our hardware constraints. DeepSeek R1 delivers all three while being surprisingly accessible." - JOEL EMOH, Full-Stack Developer

Practical Impact on Development Workflows

By eliminating the need for constant internet access, offline mode allows developers to work on confidential projects without compromising sensitive data. Whether in air-gapped environments or remote locations, this feature ensures uninterrupted productivity. With offline response times ranging from 50–200ms and a processing speed of 25–30 tokens per second on mid-range hardware, DeepSeek outpaces cloud-based models, which typically deliver response times of 100–500ms.

Support for Privacy and Security

DeepSeek's offline capabilities prioritize privacy by keeping all data on the local machine. This approach not only meets stringent compliance standards in sectors like healthcare and finance but also incorporates hardware-level encryption and detailed access controls to safeguard sensitive information.

| Aspect | Cloud-Based Models | DeepSeek R1 Offline |

|---|---|---|

| Data Transmission | External Servers | Local Machine Only |

| Privacy Risk | High | Minimal |

| Compliance Flexibility | Limited | Highly Adaptable |

| Network Dependency | Required | Not Necessary |

This local-first approach significantly reduces privacy risks while enhancing operational security.

Efficiency in Resource Utilization

Running AI models offline offers clear advantages in managing resources and cutting costs. Organizations can save on cloud expenses while optimizing local computational power. Moreover, offline deployment allows for greater control over model customization and fine-tuning, enabling teams to seamlessly integrate DeepSeek into their existing workflows.

"Switching to DeepSeek R1 offline was a game-changer. The performance is smooth, and I no longer worry about internet connectivity or data privacy." - Alex T., Senior Developer

These resource and privacy benefits make offline mode an ideal solution for addressing common challenges in AI development.

Solutions to Common Development Challenges

DeepSeek's offline mode directly tackles hurdles faced by professionals in sensitive fields. For example, medical researchers can process patient data securely, and financial analysts can perform complex calculations without risking exposure of proprietary information. Distributed teams working in remote or restricted environments benefit from consistent AI support. Additionally, local processing simplifies the implementation of custom security measures tailored to specific regulatory needs.

"Local processing now reduces complex tasks from minutes to seconds." - Maria K., AI Researcher

With its licensing under Apache 2.0, DeepSeek R1 is designed for unrestricted commercial use, making it a secure and efficient choice for modern development workflows.

Feature Comparison Table

This table provides a concise overview of DeepSeek's key features, helping developers weigh their options based on resource use, performance, privacy, and ideal applications. By understanding these trade-offs, teams can make smarter decisions about which features align best with their project goals.

Performance metrics highlight notable differences in resource demands and efficiency. For instance, model distillation achieves a remarkable balance by retaining 90–95% of its teacher model's capabilities while slashing inference costs by up to 99%. This makes it a top choice for cost-sensitive deployments.

Reinforcement learning, on the other hand, excels in complex decision-making tasks but comes with higher computational costs. Its trial-and-error approach requires extensive interaction data, making it resource-intensive but invaluable for optimizing long-term outcomes.

Offline mode focuses on local processing, drastically reducing ongoing resource usage. It also provides robust privacy protection, making it ideal for industries like healthcare and finance, where data sensitivity is paramount. While it doesn’t aim to optimize performance retention, its ability to function without constant computation or cloud dependencies makes it a standout for secure environments.

| Feature | Resource Requirements | Performance Retention | Privacy Level | Best Use Case |

|---|---|---|---|---|

| Offline Mode | Low (storage-focused) | N/A | High (local processing) | Sensitive data environments |

| Model Distillation | Moderate to High | 90–95% of teacher model | Moderate | Cost-efficient deployment |

| Reinforcement Learning | High (training intensive) | Variable by algorithm | Low to Moderate | Complex decision-making |

Scalability is another important factor. Model distillation shines here, enabling deployment on edge devices with minimal performance loss. This makes it particularly attractive for mobile or resource-constrained environments.

Cost and implementation complexity further differentiate these features. With global AI spending projected to hit $98 billion, selecting the right combination of features is critical for staying within budget. Offline mode minimizes long-term costs by eliminating cloud dependencies, while model distillation reduces computational expenses during deployment. Reinforcement learning, while requiring a higher upfront investment, can yield significant benefits in autonomous systems and decision-heavy applications.

From an implementation standpoint, offline mode is relatively straightforward, requiring a one-time setup and minimal maintenance. Model distillation involves moderate complexity during training but simplifies deployment. Reinforcement learning, however, is the most complex, as it demands defining reward functions and managing environmental interactions.

For organizations prioritizing data security and compliance, offline mode offers unmatched advantages. Its local processing capabilities minimize external data transmission risks, making it particularly appealing for sectors like government, healthcare, and finance.

When it comes to performance optimization, the choice depends on project needs. Teams working on mobile or edge deployments will find model distillation highly effective due to its resource efficiency. Meanwhile, projects requiring real-time decision-making can benefit from the adaptability of reinforcement learning. Offline mode, by contrast, ensures stable performance regardless of connectivity.

Conclusion

DeepSeek brings a fresh perspective to AI-assisted development with its standout features like advanced reasoning, rule-based rewards, model distillation, and offline capabilities. These tools don’t just enhance productivity - they also help cut costs, making it a strong contender against traditional AI solutions. Its open-source design allows for full customization, while the offline mode ensures robust privacy for sensitive data, catering to industries like healthcare, finance, and government.

One of DeepSeek's key strengths lies in its ability to process data locally, eliminating the need for recurring subscription fees and bolstering privacy. For developers working with sensitive information, this feature is a game-changer. Additionally, its continuous refinement through real-time user feedback ensures that the platform evolves to meet the needs of its users, creating a smoother and more efficient development experience.

To get started, identify your specific goals - whether debugging, data analysis, or algorithm optimization. Explore DeepSeek’s specialized features and integrate them into your workflow to fully capitalize on its potential. Its open-source framework empowers you to customize models to fit your exact requirements, making it a versatile tool for diverse projects.

As the DeepSeek community grows, it opens doors to new insights and opportunities for collaboration. From its sophisticated reasoning capabilities to its secure offline processing, every feature is designed to streamline workflows and improve cost efficiency. DeepSeek isn't just another tool in the developer's kit - it's a strategic advantage ready to elevate your next project.

FAQs

What makes DeepSeek's reinforcement learning unique compared to traditional AI methods, and how does it benefit developers?

DeepSeek's reinforcement learning (RL) approach takes a unique route by enabling the AI to learn through direct interaction with its surroundings. Instead of depending entirely on pre-labeled datasets, the model evolves through trial and error. A reward system encourages correct actions while guiding adjustments when errors occur. Over time, this iterative learning sharpens the AI's decision-making abilities.

For developers, this translates into an AI that excels at tackling complex challenges, adapting to new tasks, and providing outputs that are easier to understand and trust. By continuously refining itself, DeepSeek empowers developers to handle projects with greater efficiency and confidence.

What are the benefits of using DeepSeek's offline mode for sensitive industries like healthcare and finance?

DeepSeek's offline mode prioritizes data protection by keeping sensitive information confined to your local environment. This approach is particularly crucial for sectors like healthcare and finance, where strict privacy regulations require rigorous compliance.

Operating offline minimizes the chances of data breaches, gives you complete control over your information, and helps align with industry-specific regulations. This ensures that sensitive data remains secure at every stage of your workflow.

How does DeepSeek use model distillation to balance high performance with lower computational costs, and why is this important for limited-resource environments?

DeepSeek uses model distillation to achieve strong performance while cutting down on computational requirements. In simple terms, this method transfers what’s learned from larger, more complex models into smaller, streamlined ones. The result? These smaller models can still perform accurately and make sound decisions.

This technique proves particularly useful in settings where resources are limited - think edge devices or low-power systems. With restricted computing power and the need for energy efficiency, DeepSeek ensures AI applications can operate effectively. This makes advanced technology more practical and affordable for a variety of applications.