The main mistakes students make when doing homework with the help of AI

It’s hardly a secret that AI technologies have quickly made their way into schools, study groups, and even late-night homework marathons.

Students can now use tools like grammar checkers, essay generators, or even an AI solver or helper to complete assignments more efficiently.

ALSO READ: ReAct Prompting Technique

Some even look for a website to pay someone to do my homework when deadlines pile up. It sounds like a win at first, but the truth is more complicated.

As someone who is very interested in AI literacy, I have seen both the amazing things that can happen and the bad things that may happen when people use these technologies without knowing their limits.

People often forget that using AI without a plan can hinder a student's learning more than it helps.

Not because AI is "bad" in and of itself, but because humans haven't yet learned how to utilize it intelligently or made it a habit.

That's what this article is about. We're going to talk about the most typical mistakes students make when they use AI to help them with their assignments.

Some of them are just mistakes, while others are more subtle tendencies that make it harder to think critically over time.

The idea isn't to scare people away from utilizing AI; it's to show them better methods to use it safely.

Part 1: Relying too much on AI

1. Not Enough Critical Thinking

One of the first signs that students are utilizing AI for homework that worries me is that their ability to think critically is slowly going away.

It's subtle at first—maybe you use ChatGPT to brainstorm a few topics for a brief essay, or you ask an AI tool to solve a math problem and duplicate the methods without actually looking at them.

But after a while, this ease of use becomes a crutch.

Critical thinking isn't simply a word that people use in school; it's the ability to look at material, question what you believe you know, and come up with your own thoughts.

If pupils start believing what AI makes, they won't be able to practice this important ability.

Think of a history assignment that wants you to look at original sources.

AI might offer you a nice summary, but it won't teach you how to think critically, look at things from other points of view, or find new ways to connect events.

These are the mental reps that help you comprehend better, and they don't come from copying and pasting.

2. Not fully understanding the material

Another common problem is thinking you understand.

When AI gives a solution fast and with confidence, it's easy to think that the problem has been "solved."

But just knowing the answer doesn't mean you understand why it's the solution.

For example, let's look at a biology problem.

If a student asks AI a question about photosynthesis, it could be able to answer it in a few seconds.

But if they don't interact with the explanation, they are less likely to remember that information or use it in a different situation, like a lab practical or a conceptual exam.

AI tools don't automatically make you smarter.

If you don't take the time to think about what you've done, you might think you've mastered it.

What is the long-term risk? Falling behind when genuine understanding is put to the test.

Part 2: Using AI in the wrong way

1. Concerns about plagiarism and ethics

Let's get one thing straight: it's not wrong to use AI to help you with your schoolwork.

But if you turn in AI-generated stuff as your own work without changing it or giving credit, you may get in a lot of trouble in school.

A lot of pupils don't know that duplicating an AI's answer word for word can be considered plagiarism.

You still have to follow citation and ownership standards even if an AI wrote it.

Some colleges have begun to change their rules to make it clear that this kind of behavior is not acceptable.

And let's be honest: if you didn't write it, should you really get a grade for it?

This is also a bigger moral concern. Are you learning anything at all if you let AI come up with your ideas or write for you?

It's not enough to merely turn in homework; you also need to work on your voice, your thinking, and your ability to speak coherently.

When pupils skip that part, they are cheating themselves the most.

And while AI shouldn't replace real learning, it’s perfectly smart to take advantage of real-world support—like scholarships and grants for Nigerian students in 2025 that can ease financial stress and help you focus on your education.

2. Using AI to Fix Things Quickly

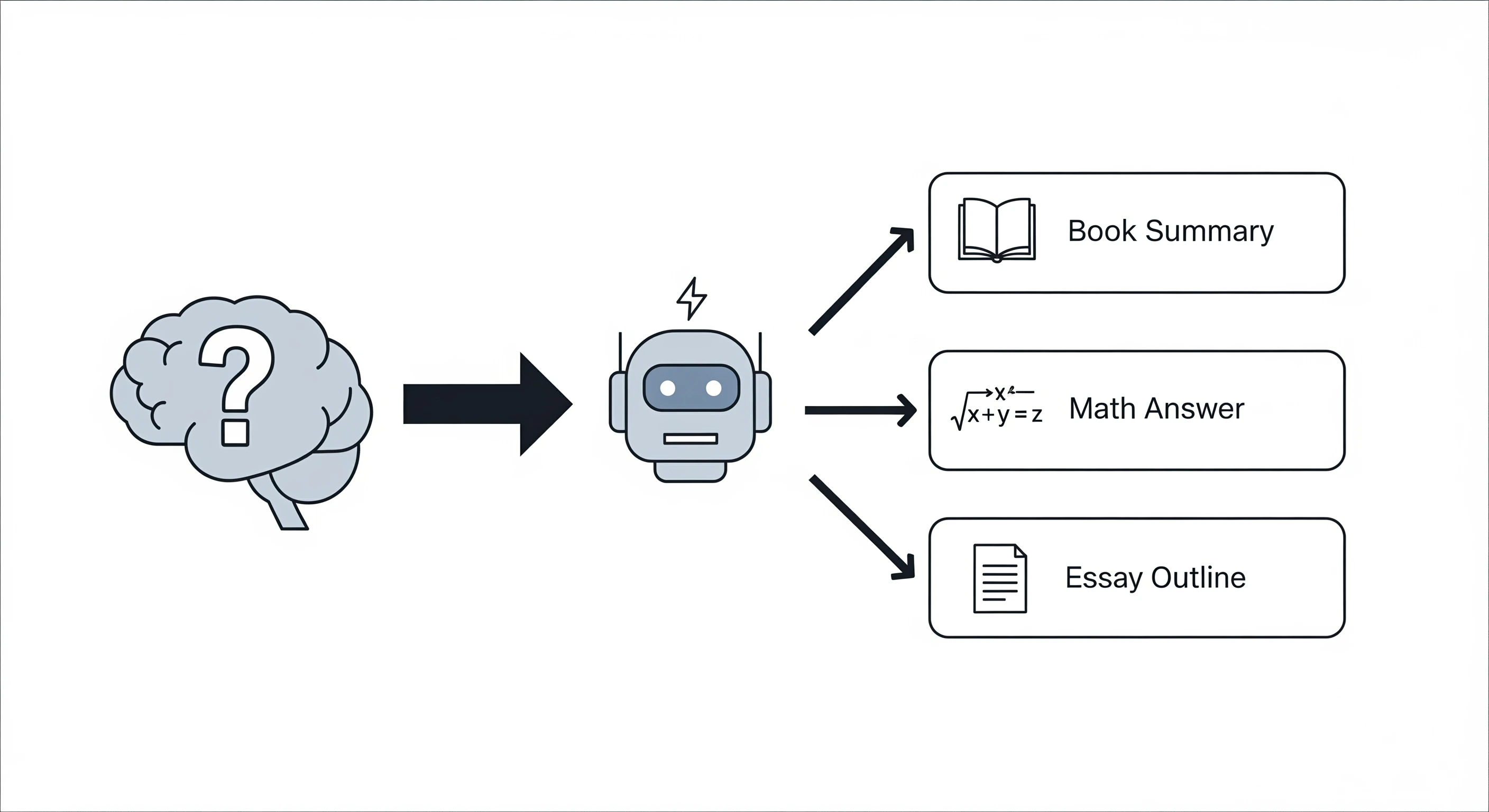

Another temptation is to use AI techniques for a "quick fix."

Did you not read the book? Get a summary from ChatGPT.

Don't want to do the math problem? Put it into Wolfram Alpha and copy the answer.

Want an outline for your essay in five minutes? Of course, an AI can do it for you.

But this way of thinking has effects.

If you use AI as a quick fix instead of a way to learn more, you're going to have problems in the future.

You might be able to do the work, but what about a test with no books?

Or when you have to talk about something in a group and discover you don't really get it?

AI is not a cheat code; it's a utility.

If you use it to avoid learning, it won't be there to help you when things go tough.

Part 3: Not Following Directions and Rules

1. Not Making AI Output Fit the Assignment

I've seen this happen over and over again: students ask AI to

"write an essay" or "explain a topic,"

and then they send in the result without checking to see whether it really fits what the assignment is asking.

That will get you bad grades, not because the AI's answer is wrong, but because it's not on subject.

There are typically precise requirements for assignments, such as a certain structure, citation style, word count, or a prompt that asks you to think about or analyze a work.

AI doesn't automatically take that into consideration unless you tell it to in very specific terms. Even then, it might not get it right.

A lecturer might, for instance, call for an argumentative essay using three peer-reviewed references in APA format.

You're not doing what the assignment says if you turn in a generic five-paragraph opinion paper made by AI.

That's not a problem with the technology; it's a mistake by the user.

In the end, AI isn't your teacher. It won't check the rubric for you again. It's still your fault.

2. Not Checking and Changing AI-Generated Content

Another typical mistake is to skip the editing phase completely.

Students often act like AI output is a final work, but it's really more like a first draft at best.

Language models, even the best ones, can make mistakes, like getting facts wrong, using uncomfortable language, or oversimplifying things.

AI can also sound flat or robotic, which is bad.

Professors will notice if every student in a class uses the same tool and writes in the same way. And believe me, they do.

That’s why it’s so important to add your own voice to the rewriting process.

Look over what the AI supplied you.

Does it show what you know? How do you write?

Does it answer the question correctly? If not, change it. Put things back in order. Add some depth.

Take ownership of it.

For students who want guidance in balancing AI-generated drafts with authentic academic work,

Studybay can be a useful resource for learning how to refine, edit, and personalize content effectively.

Part 4: Long-Term Consequences

1. Less Interest in Learning Material

AI's ease of use can be a good and bad thing.

It's nice to get a quick answer or overview when you're stuck, but if you rely on AI too much, it might slowly make you less interested in the topic itself.

Retention declines when engagement drops.

You have to read something for your literature class.

You don't just read the material quickly or try to figure out what the themes are; you ask an AI for a synopsis and the important points.

Yes, it does save time.

But you missed the chance to study through the text and see how the language works, how the characters change, and how the story creates suspense.

That's where real learning takes place.

Also, if students rely on AI every time they run into a problem, they stop doing the things that help them learn: dealing with confusion, making mistakes, asking questions, and finding their own answers.

Those times are important. They change how we think, not just what we know.

2. Lack of Skills for Future Applications

Here's the bigger problem: relying too much on AI can hurt the abilities you'll need for your future job, not just your grades.

Being able to think critically, be creative, talk to others, and make decisions are not only things you learn in school; they are things you need to know for life.

And you won't get stronger if you let AI do too much of the work.

It's like using a calculator for every arithmetic issue.

You won't know what to do if you don't learn how the operations operate when the calculator isn't available or when the situation doesn't fit the normal pattern.

The same is true for writing, solving problems, and even coming up with ideas.

If you continually rely on AI, you're not learning how to think in a way that helps you adapt, find solutions, and get things done in the real world.

So, AI can be a part of the process, but it should never take your place.

Final Thoughts

AI is changing how students do their homework, and there's no doubt that it might help them learn more.

But with that promise comes a lot of work.

When students use AI too much, misuse it to avoid work, neglect assignment directions, or stop working on their own growth, the tool becomes a trap instead of a help.

The most important thing to remember?

AI can't take the place of thinking, learning, or growing.

It's a supplement, and a strong one, but only if you use it on purpose.

If you're a student who uses AI to help you with your schoolwork, take a moment to think about how you're utilizing it.

Are you still thinking critically? Are you changing, altering, and making the work your own? Are you learning the skills you need for life after school?

The students who will do best in this new world with AI will be the ones who know when to use technology and when to think for themselves.