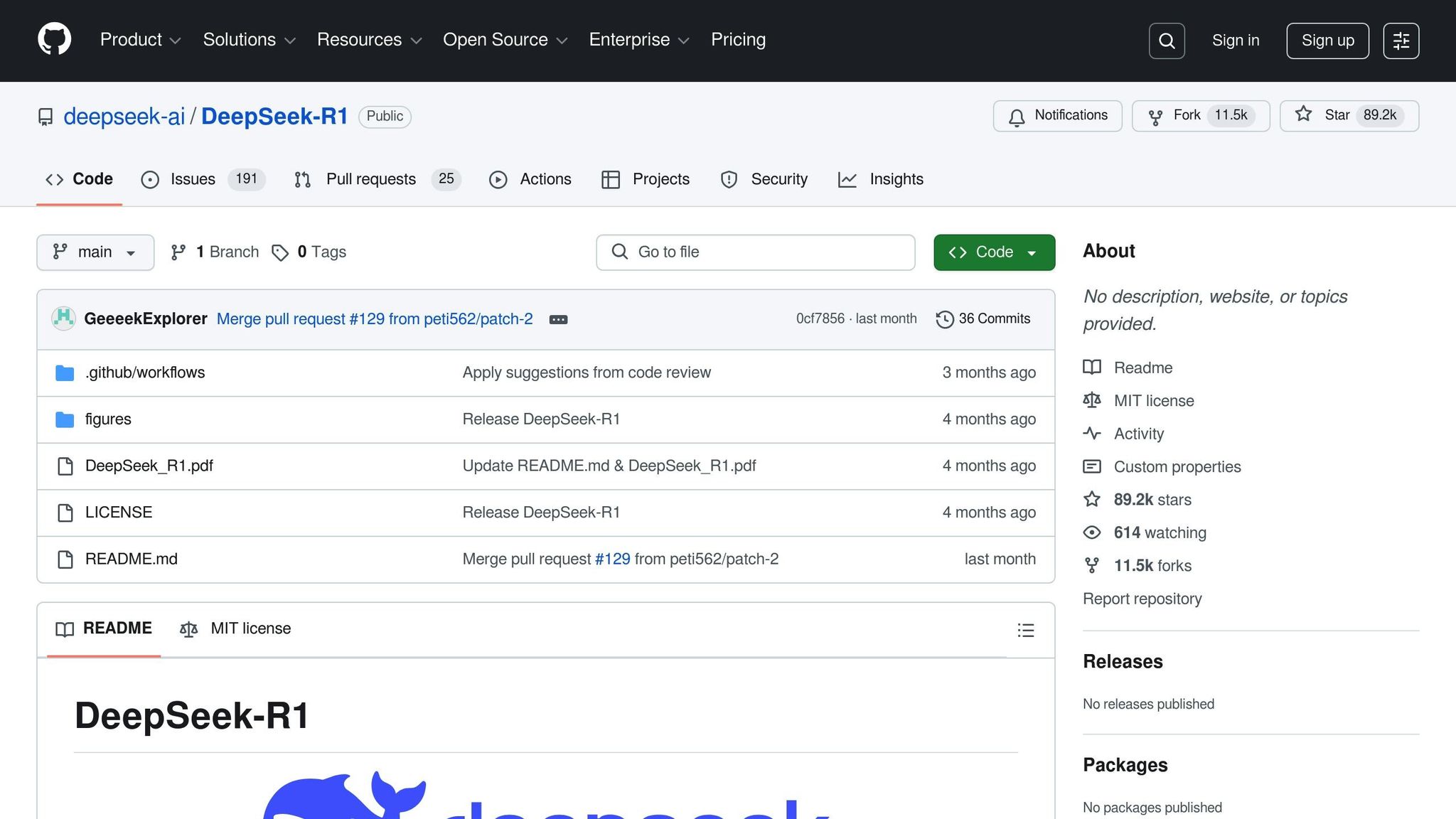

Run DeepSeek R1 Locally: Seven-Minute Setup

Want to run DeepSeek R1 on your computer? Here's how to set it up in just seven minutes. Running it locally offers key benefits:

- Data Privacy: Keep sensitive data on your system.

- Faster Processing: No internet delays.

- Offline Access: Operate without constant connectivity.

Key Requirements:

- Hardware: Match your model size (e.g., NVIDIA RTX 3060 for 1.5B).

- Software: Use Ubuntu 22.04 with CUDA, cuDNN, and Docker.

Quick Setup Steps:

-

Install Ollama:

curl -fsSL https://ollama.com/install.sh | sh -

Download Models:

ollama pull deepseek-r1 -

Run Tests:

ollama run deepseek-r1 "2+2"

For larger models, ensure robust hardware like NVIDIA H100 GPUs. Optimize performance with quantization and batch processing. Follow these steps to enhance security, speed, and efficiency for your business.

How to setup DeepSeek R1 locally (for free with Ollama)

1. System Requirements Check

Before diving into the DeepSeek R1 installation, it's crucial to ensure your hardware and software meet the necessary requirements. Here's what you need to know.

Required Hardware

The hardware specifications depend on the model size you plan to use. Below is a breakdown of the key requirements:

| Model Size | CPU Requirements | RAM | GPU (VRAM) | Storage |

|---|---|---|---|---|

| 1.5B (Basic) | Intel Core i7/AMD Ryzen 7 (8 cores) | 16GB (32GB ideal) | NVIDIA RTX 3060 (12GB) | 512GB NVMe SSD |

| 7B-8B (Standard) | Intel Xeon/AMD EPYC (16–24 cores) | 32GB (64GB ideal) | NVIDIA RTX 4090 or A5000 (24GB) | 1TB NVMe SSD |

| 14B-32B (Advanced) | Dual Intel Xeon Platinum/AMD EPYC (32–64 cores) | 128GB (256GB ECC) | NVIDIA A100 or H100 (40GB+) | 2TB NVMe SSD |

Additional Notes:

- Use NVMe PCIe Gen 4 SSDs to achieve the best performance.

- If you plan to run models on the CPU alone, aim for at least 48GB of RAM.

- For systems with lower VRAM, model quantization is necessary to reduce memory demands.

Once your hardware is ready, move on to the software setup to make the most of your configuration.

Compatible Operating Systems

DeepSeek R1 is optimized for Ubuntu 22.04. To prepare your system, make sure you install the following:

- CUDA and cuDNN for GPU acceleration.

- NVIDIA drivers (the recommended version is

nvidia-driver-520). - Docker with NVIDIA support for containerized environments.

- Git for managing repositories.

- A Python virtual environment for dependency isolation.

Tip: Start with the 1.5B model to verify your setup before scaling up to larger models.

Advanced Model Requirements

For those working with the DeepSeek-R1-Zero variant, which boasts a massive 671 billion parameters, keep in mind its unique mixture-of-experts architecture. Each token processed activates parameters equivalent to 37 billion. This model demands extremely robust hardware, including multiple NVIDIA H100 or A100 GPUs with at least 80GB of VRAM each. Be sure your infrastructure can handle this level of performance before proceeding.

2. Setting Up Ollama

Ollama simplifies the management of DeepSeek R1 by taking care of tasks like downloading models, quantization, and execution. This makes local deployment easier, even for those without technical expertise.

Installation Steps

To install Ollama on a Linux system, open your terminal and run the following command:

curl -fsSL https://ollama.com/install.sh | sh

Once the installation is complete, confirm everything is working by running:

ollama run deepseek-r1

If you encounter any issues, ensure that essential system utilities like pciutils and lshw, the CUDA toolkit, and the latest GPU drivers are installed.

After this, you'll need to configure your GPU to meet the requirements of DeepSeek R1.

GPU Setup

Your GPU setup plays a critical role in determining the performance of DeepSeek R1. Here's a quick overview of the available model versions and their VRAM requirements:

| Model Version | VRAM Required | Quantized VRAM (4-bit) | Recommended GPU |

|---|---|---|---|

| Distill-Qwen-1.5B | 3.9 GB | 1 GB | NVIDIA RTX 3060 (12GB or higher) |

| Distill-Qwen-7B | 18 GB | 4.5 GB | NVIDIA RTX 4090 (24GB or higher) |

| Distill-Llama-8B | 21 GB | 5 GB | NVIDIA RTX 4090 (24GB or higher) |

Here are some tips for optimizing your GPU configuration:

- Model Selection: If your GPU has limited VRAM, start with the Distill-Qwen-1.5B model. It requires just 3.9 GB of VRAM in its standard form or 1 GB when quantized.

- Resource Management: To make larger models work on less powerful hardware, take advantage of 4-bit quantization. This reduces VRAM usage significantly.

- Performance Optimization: For the best performance, use high-end GPUs. If you're working with limited resources, the 7B model offers a good balance between performance and hardware requirements.

Note: While these models can technically run on GPUs with lower specifications than recommended, additional adjustments - like tweaking batch sizes or processing settings - may be needed to maintain stable performance.

3. Installing DeepSeek R1

Once you have Ollama installed and your GPU ready to go, follow these steps to install DeepSeek R1.

Model Download

Start by downloading the default model using:

ollama pull deepseek-r1

If you need a specific model size, use one of these commands:

ollama pull deepseek-r1:7b # Downloads the 7B parameter model

ollama pull deepseek-r1:1.5b # Downloads the lightweight 1.5B model

After downloading, launch the model with:

ollama run deepseek-r1

Once the model is running, it's time to organize your installation files.

File Management

Keep your installation files structured for easier access and maintenance. Here's a suggested setup:

| Component | Location | Purpose |

|---|---|---|

| Model Files | ~/.ollama/models |

Stores the model weights |

| Configuration | ~/.ollama/config |

Holds system settings and preferences |

| Logs | ~/.ollama/logs |

Tracks performance and debugging info |

For production environments, consider these best practices:

- Keep track of model versions along with their configurations.

- Regularly analyze logs to monitor performance.

- Update your installations to stay current.

Verifying Your Setup

Confirm everything is working as expected. Use the following commands:

nvidia-smi # Checks if your GPU is detected

ollama serve # Starts the Ollama server for API access

If you encounter GPU-related issues, you might need to install additional system dependencies. For Linux systems, run:

sudo apt install pciutils lshw

sbb-itb-58f115e

4. Testing and Fixing Issues

Basic Tests

Once you’ve installed DeepSeek R1, it’s time to run some basic tests to ensure everything is functioning as expected. Here are a few diagnostic commands to get started:

# Test model responsiveness

ollama run deepseek-r1 "What is 2+2?"

# Check model loading speed

time ollama run deepseek-r1 "Hello"

# Verify GPU utilization

nvidia-smi -l 1

Keep an eye on key metrics like response time, GPU memory usage, and how long it takes for the model to load.

Common Problems and Solutions

If the tests highlight any problems, here are some common troubleshooting steps to help you pinpoint and resolve the issues.

Driver-Related Issues

Start by confirming your NVIDIA drivers are correctly installed and up to date:

nvidia-smi --query-gpu=driver_version --format=csv

Memory Management

Memory-related errors can be tricky, so it’s good to stay ahead of them. Check your system’s available memory using these commands:

free -h

nvidia-smi -q -d MEMORY

Troubleshooting Guide

Here’s a quick guide to address common issues:

| Issue | Solution | Verification Step |

|---|---|---|

| Connection Errors | Check your network status | ping api.deepseek.com |

| Model Verification Fails | Redownload the model files | ollama pull deepseek-r1 --force |

Additional Diagnostics

For more in-depth troubleshooting, use the following commands:

-

Network Connectivity

Check your network stability and firewall settings:

traceroute api.deepseek.com netstat -an | grep LISTEN -

Resource Monitoring

Keep an eye on your system's resources and GPU temperature:

# Monitor system resources top -n 1 nvidia-smi -q -d TEMPERATURE -

Model Verification

Test the model’s ability to handle queries:

ollama run deepseek-r1 "Explain quantum computing"

Handling Model Response Issues

If you notice a decline in the quality of the model’s responses:

- Be more specific with your instructions.

- Ensure you’re using the latest version of the model.

- Experiment with parameters like temperature and top_p to refine the output.

Addressing HTTP Request Problems

For issues related to HTTP requests:

- Use retry logic with exponential backoff to handle transient errors.

- Set a timeout value of 30 seconds for requests.

- Double-check the format of your input data before sending it.

These steps should help you maintain smooth operation and resolve any hiccups along the way.

5. Speed and Performance Tips

Once DeepSeek R1 is running smoothly, it's time to fine-tune its speed and performance. These advanced techniques can help streamline your workflow and improve overall efficiency.

Memory Usage Tips

Managing memory efficiently is crucial for getting the best performance out of DeepSeek R1. Given the model's size, proper memory allocation ensures smooth operation without overloading your hardware.

One effective method is quantization, which can shrink the model's memory requirements by up to 75% while maintaining its functionality. You can also use GGUF formats, mixed-precision training, and gradient checkpointing to reduce memory strain.

If you're looking to upgrade hardware, consider investing in DDR5 RAM, PCIe 4.0 NVMe SSDs, or high-performance GPUs. These components can significantly boost processing power and memory efficiency, especially for batch tasks.

Better memory management leads to smoother batch processing and overall faster performance.

Batch Processing Setup

Breaking tasks into smaller, manageable units allows for parallel processing, which maximizes resource usage and speeds up workflows.

Resource Management

| Strategy | Implementation | Benefit |

|---|---|---|

| Data Partitioning | Split datasets into smaller chunks | Boosts processing efficiency |

| Message Queuing | Use tools like RabbitMQ or Kafka | Enables decoupled processing |

| Dynamic Scaling | Leverage tools like KEDA | Allocates resources on demand |

Advanced Optimization Techniques

For low QPS (queries per second) scenarios, the Multiple Task Processing (MTP) feature can improve speed by up to 20%. Additionally, techniques like Expert Parallelism (EP) and Data Parallelism (DP) can enhance GPU usage and minimize redundancies.

If you're working with multi-node setups, consider using 100+ Gbps connectivity, reentrant batch processing with checkpointing, and thin messaging protocols. These strategies can significantly improve performance and ensure efficient resource utilization.

Conclusion: Quick Setup for Business Use

With the system fully configured and optimized, deploying DeepSeek R1 locally can significantly enhance business operations.

Setting up DeepSeek R1 on-site boosts security while reducing processing delays by up to 30%.

Key Business Advantages

Local deployment safeguards sensitive information and accelerates processing speeds. For instance, in healthcare, diagnosis times can improve by 20–30%.

"Local AI ensures that sensitive information remains on the user's device, protecting it from unauthorized access or breaches during transmission...this keeps personal data secure and gives users more control over their information." – webAI

Implementation Strategy

To ensure seamless integration, prioritize areas where AI can deliver immediate results. As noted by industry experts, "Early adopters are reaping tremendous benefits". With a setup process that takes just seven minutes, DeepSeek R1 offers a strategic edge - especially for mid-sized businesses aiming to optimize operations.

| Deployment Benefit | Business Impact |

|---|---|

| Data Privacy | Keeps sensitive data on-site |

| Processing Speed | Improves task efficiency |

| Cost Efficiency | Eliminates recurring subscription fees |

| Offline Capability | Operates without internet access |

Begin with a pilot in one department, applying the same principles often used when testing smaller AI models. Companies like Brave have demonstrated the potential of local AI solutions, achieving 200% user growth within six months of deployment.

To maximize ROI and performance, focus on maintaining well-organized, accurate data. This approach ensures your setup not only meets technical requirements but also delivers measurable business improvements.

FAQs

What are the advantages of running DeepSeek R1 locally instead of online?

Running DeepSeek R1 on your local device comes with several standout benefits:

- Stronger Privacy: Keeping your data on your own system minimizes the chances of leaks or breaches tied to cloud services.

- Improved Speed: Without relying on the internet, you'll experience faster response times and smoother performance.

- Full Control: Tailor the tool's settings and functionality to suit your unique requirements.

- Lower Costs: Skip the ongoing expenses of cloud services, making it a more economical choice in the long run.

These advantages make local deployment a smart option for businesses aiming to integrate AI tools securely and efficiently into their workflows.

How can I boost DeepSeek R1's performance on my current hardware?

How to Optimize DeepSeek R1 on Your Current Hardware

If you want to get better performance from DeepSeek R1 without investing in new hardware, here are a couple of smart strategies:

- Leverage GPUs or other accelerators: Hardware accelerators like GPUs are built to handle demanding AI tasks more efficiently. Using them can significantly speed up processing and minimize delays.

- Adjust model settings to fit your hardware: Fine-tuning the model can make a big difference. For instance, techniques like quantization can reduce the model's size, improving speed without sacrificing accuracy. Alternatively, you might explore using distilled models, which are streamlined versions of the original model that deliver faster results while maintaining quality.

With these tweaks, you can boost DeepSeek R1's performance without needing to overhaul your hardware setup.

What should I do if I run into GPU compatibility issues while setting up DeepSeek R1?

If you're running into GPU compatibility problems while setting up DeepSeek R1, here are some steps you can take to troubleshoot:

- Confirm system requirements: Double-check that your GPU meets the necessary specifications. This includes having enough VRAM and ensuring CUDA compatibility. For the best experience, GPUs like the Nvidia RTX 3090 are highly recommended.

- Update your GPU drivers: Outdated drivers are a common cause of errors. Head to your GPU manufacturer's website to download and install the latest driver updates.

- Verify your CUDA version: Ensure the CUDA version installed on your system is compatible with both your GPU and DeepSeek R1. A mismatch between versions can often lead to setup issues.

Following these steps should address most GPU-related problems. If you're still facing issues, don't hesitate to reach out to the DeepSeek R1 support team for additional help.