Ultimate Guide to OpenAI Sora 2: Everything You Need to Know in 2025

Let me tell you about something that's genuinely exciting in the AI world right now.

OpenAI just dropped Sora 2, and it's not your typical "meh, another AI tool" moment.

This is the real deal—a video generator that actually understands physics, creates synchronized audio, and lets you star in your ownAI-generated videos.

Sounds wild, right?

Let me break it down for you.

ALSO READ: How AI Recommends Content for Teams

What Exactly Is Sora 2?

Think of Sora 2 as your personal Hollywood studio that lives in your phone.

You describe what you want to see, and it creates a short video—complete with sound effects, background music, or even dialogue.

But here's what makes it special: the videos actually make sense.

Objects don't randomly teleport.

Physics works the way it should.

A basketball that misses the hoop bounces off the backboard like in real life.

The original Sora (released February 2024) was OpenAI's first attempt at video generation.

It worked, but it was basic.

Sora 2? That's their "we figured this out" moment.

The Features That Actually Matter

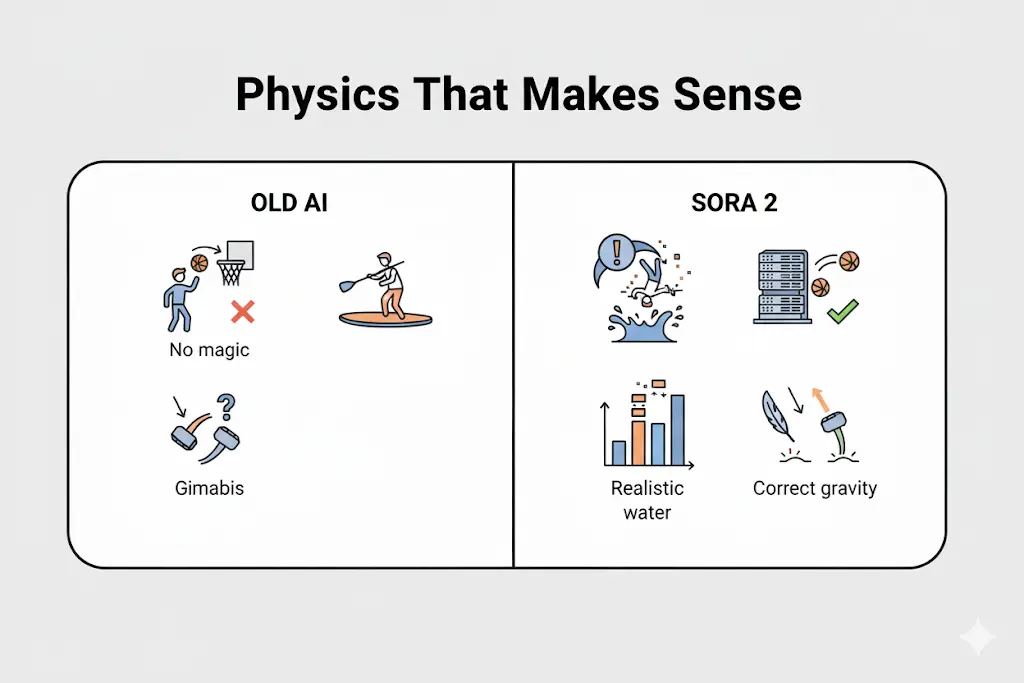

Physics That Makes Sense

Here's something you'll notice immediately: Sora 2 videos feel real because they follow actual physics.

- Complex movements work: Olympic gymnastics routines, backflips on paddleboards, figure skating with a cat (yes, really)

- Objects behave correctly: Things bounce, fall, and move like they should

- Water and buoyancy: Watch someone flip on a paddleboard and see the water respond realistically

- No more magic teleporting: Earlier AI models would cheat—miss a basketball shot? The ball just appears in the hoop. Sora 2 shows the miss

Audio That's Actually Good

This is huge. Most AI video tools give you silent clips. Sora 2 generates:

- Background soundscapes that match your scene

- Realistic sound effects (footsteps, splashes, wind)

- Actual dialogue with synchronized lip movements

- Ambient noise that makes scenes feel alive

Cameos: Put Yourself in Any Video

This feature is honestly mind-blowing.

You record a short video once (for verification), and then you can drop yourself into any Sora-generated scene.

Your appearance, your voice, everything stays accurate.

How it works:

- One-time video and audio recording in the app

- You control who can use your likeness

- Revoke access anytime

- Remove any video with your cameo (even drafts others made)

Multiple Visual Styles

Sora 2 isn't locked into one look:

- Realistic: Like real footage

- Cinematic: Hollywood movie quality

- Anime: For that animated style

Whatever vibe you're going for, it adapts.

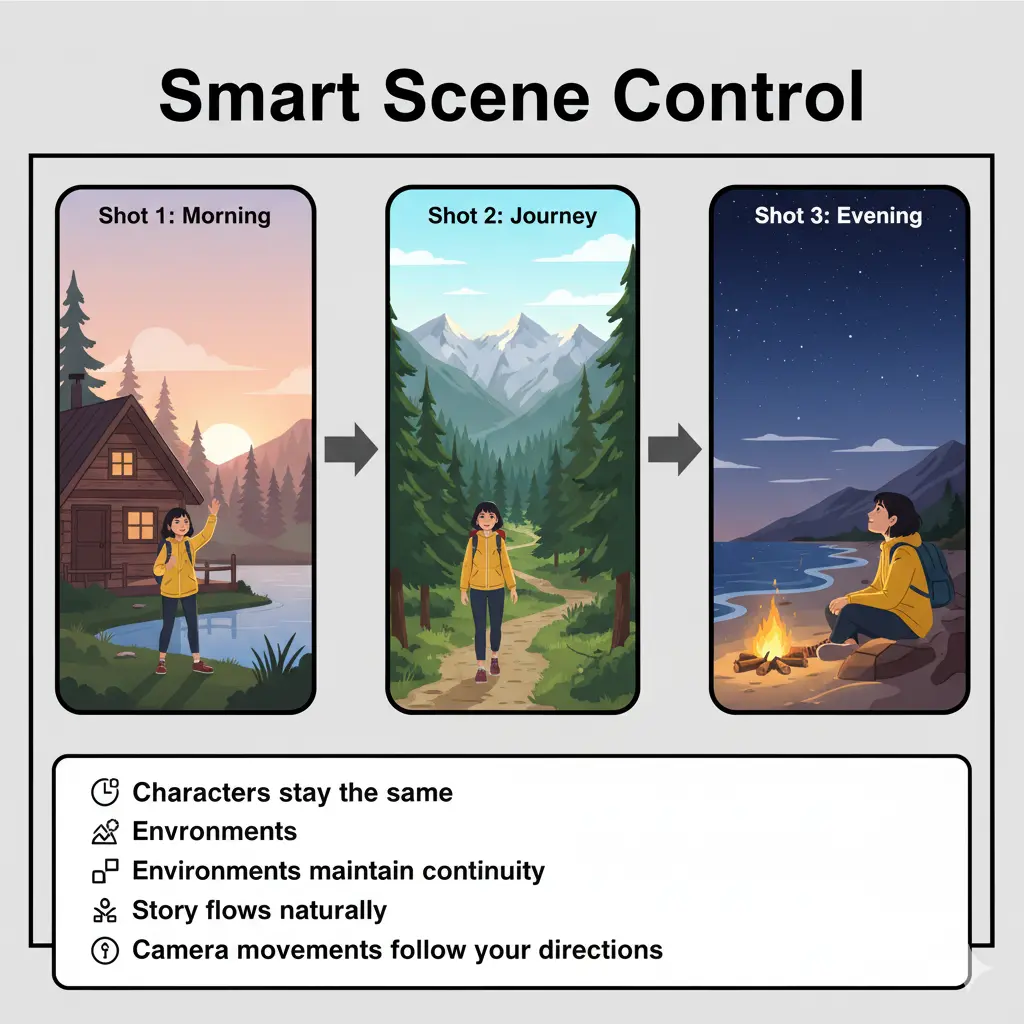

Smart Scene Control

You can give detailed instructions across multiple shots, and Sora 2 keeps everything consistent:

- Characters stay the same throughout

- Environments maintain continuity

- Story flows naturally from shot to shot

- Camera movements follow your directions

How to Actually Get Sora 2

Here's the practical stuff you need to know.

For iPhone Users

Right now:

- Download the Sora app from the iOS App Store

- Sign in with your OpenAI account (same one you use for ChatGPT)

- Sign up for the waitlist

- Get a push notification when your access is ready

Starting locations:

- United States

- Canada

- More countries coming soon

For Everyone Else

You can also access Sora 2 through sora.com once you get your invite.

Same account, works in your browser.

Android users: No official word yet, but it's likely coming. For now, you can use the web version.

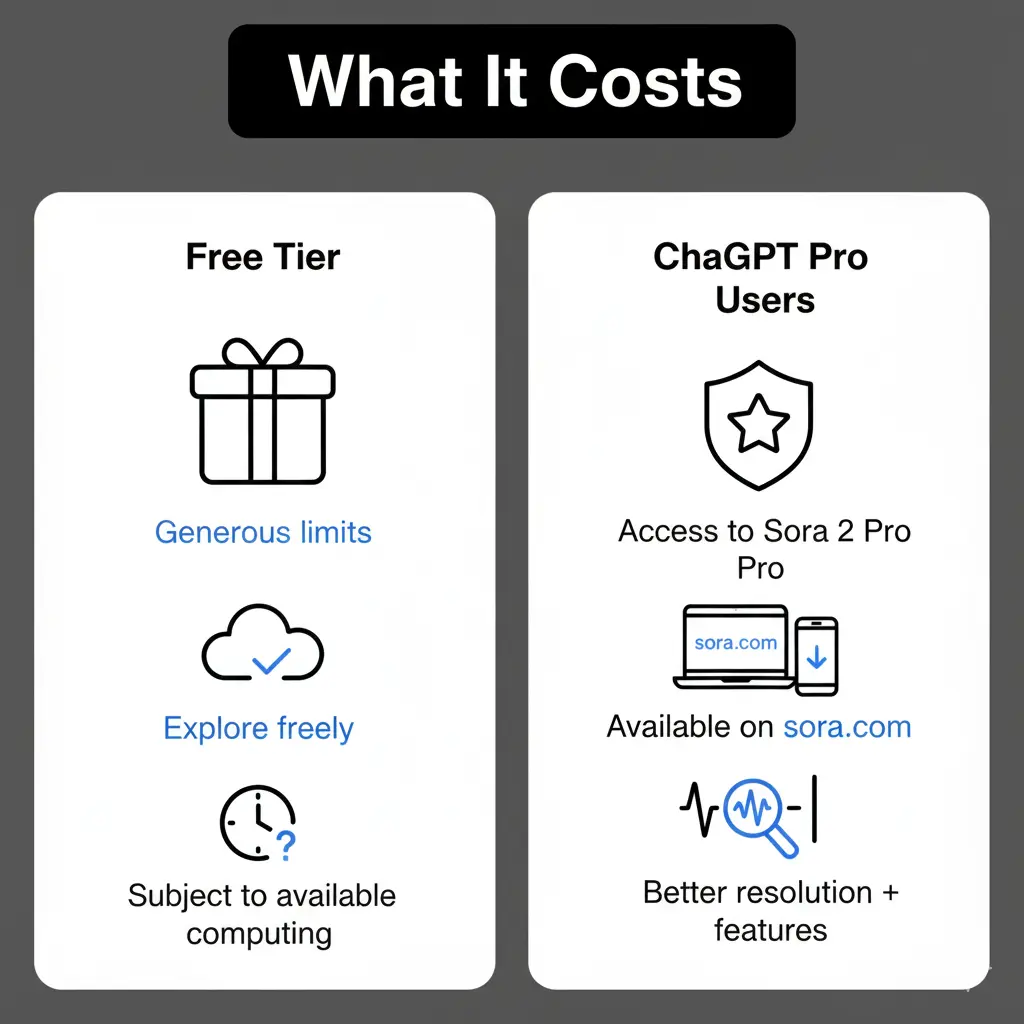

What It Costs

OpenAI is keeping it simple:

Free Tier:

- Generous limits to start

- Create and explore freely

- Subject to available computing power

ChatGPT Pro Users:

- Access to Sora 2 Pro (higher quality model)

- Available on sora.com (coming to app soon)

- Better resolution and more features

API Access:

- Coming for developers

- Pricing not announced yet

The honest take: They might eventually charge if you want extra generations beyond your limit. That's it. No hidden subscription traps.

Sample Prompts to Get You Started

Let me give you some prompts that actually work. The key is being specific about what you want.

Nature & Landscapes

"A sunrise over misty mountains, golden light breaking through clouds, a eagle soars across the frame, gentle wind sounds and distant bird calls, camera slowly pans right following the eagle's flight"

Why it works: Specific subject (eagle), clear setting (mountains at sunrise), defined camera movement (pan right), audio details (wind, birds)

Action Scenes

"A skateboarder lands a kickflip on a city street, board spins perfectly, wheels hit concrete with a clean snap, urban background with morning traffic sounds, slow-motion capture at the moment of landing"

Why it works: Describes the trick, specifies the physics (board spin, landing), includes environment sounds, defines camera speed

Fantasy & Imagination

"A dragon glides between ice spires in a frozen canyon, wingtips create swirling snow trails, low afternoon sun casts golden light on blue scales, deep wing beats and crystalline ice sounds, camera tracks alongside at dragon's speed"

Why it works: Clear subject and environment, specific lighting, movement details, synchronized camera motion

Everyday Realistic

"A person sits at a outdoor cafe table, sipping coffee and watching people walk by, sunny afternoon with dappled shade, gentle cafe chatter and soft jazz in background, camera holds steady on subject's face as they smile"

Why it works: Simple, believable scene with clear audio elements and camera direction

Quick Tips for Better Prompts

- Be specific about movement: "Camera pans left" not just "moving camera"

- Include audio details: "Footsteps on gravel, distant car horn" makes it real

- Describe lighting: "Golden hour sunlight" or "overcast soft light"

- Keep it simple for starters: Don't try to cram too much into one scene

- Mention camera style: "Handheld and shaky" vs "smooth gimbal shot"

What Sora 2 Struggles With (Let's Be Honest)

No AI is perfect. Here's where Sora 2 can mess up:

- Crowd scenes: Multiple people talking at once gets confused

- Complex collisions: Lots of objects hitting each other

- Very fast camera moves: Rapid pans or spins can break

- Long scenes with many characters: Consistency drops

The fix? Keep prompts shorter, motion simpler, fewer characters, more explicit camera instructions.

The Social App Angle

OpenAI built Sora as a social app, which is interesting.

The feed works differently:

- Shows content from people you follow

- Prioritizes videos you might remix for your own creations

- Uses natural language to customize what you see

- NOT optimized for endless scrolling (by design)

You can:

- Remix other people's videos

- Share directly or via DM

- Follow creators you like

- See clear labels when something is a remix

For teens specifically:

- Daily generation limits

- Stricter cameo permissions

- Parental controls available

- Human moderators for bullying cases

Safety Features You Should Know About

OpenAI is taking safety seriously here:

Content Protection:

- Automated filtering for harmful content

- Human moderators reviewing reports

- Can't generate certain restricted content

- Clear community guidelines

Your Likeness Control:

- You approve who uses your cameo

- Revoke access anytime

- See all videos with your likeness (even drafts)

- Report misuse immediately

For Parents:

- Parental controls via ChatGPT

- Override scroll limits

- Turn off algorithm personalization

- Manage messaging settings

Should You Actually Use This?

Here's my honest take.

Use Sora 2 if:

- You create content for social media

- You need quick video mockups

- You want to experiment with video ideas before filming

- You're exploring creative storytelling

- You want to make fun videos with friends

Maybe skip it if:

- You need professional Hollywood-level output

- You're working on long-form content (it maxes at 10 seconds currently)

- You need precise control over every frame

- You don't have an iOS device or US/Canada access yet

What Makes Sora 2 Different

Let me be clear about why this matters.

Most AI video tools give you flashy demos but frustrating results. Things morph weirdly.

Physics breaks. You get 3 seconds of usable footage from 20 attempts.

Sora 2 actually tries to understand how the world works.

When you ask for a backflip, it models the physics. When someone talks, their lips move correctly.

When water splashes, it behaves like water.

Is it perfect? No. Will it replace real filmmaking? Also no.

But it's the first AI video tool that feels like it's actually going somewhere useful.

The Bottom Line

Sora 2 is OpenAI's serious push into AI video generation. It combines realistic physics, synchronized audio, and creative control in a way we haven't seen before.

The cameos feature is genuinely novel—putting yourself into AI scenes with accuracy is wild.

Right now it's invite-only in the US and Canada, free to start, with a Pro tier for ChatGPT subscribers. It's on iOS with web access coming.

If you get access, start simple. Play with prompts. See what works. The technology is impressive, but it's still early days.

Want in? Download the Sora app, sign up, and join the waitlist. That's your move.

The future of video creation is getting interesting. Sora 2 is proof of that.

Ready to try Sora 2? Share this guide with someone who'd love to create AI videos.