Frameworks for GPT Benchmarking: Guide

Want to find the best GPT model for your needs? Benchmarking is the key. It helps you measure and compare GPT models based on performance, speed, cost, and reliability. Here's a quick breakdown:

- What is GPT Benchmarking? It’s the process of systematically testing GPT models to evaluate their accuracy, response time, token efficiency, and cost-effectiveness.

- Why does it matter? Choosing the right model can save money, improve workflows, and ensure consistent performance for tasks like content creation, tutoring, or technical documentation.

- Key Metrics: Accuracy, latency, cost efficiency, context window usage, and output consistency.

- Top Tools:

- OpenAI Evals: Great for OpenAI models like GPT-3.5 and GPT-4, offering custom evaluations and model comparisons.

- EleutherAI Evaluation Harness: Supports over 60 benchmarks and multiple architectures, ideal for research teams.

- God of Prompt: A library of 30,000 categorized prompts to streamline benchmarking and testing.

Quick Comparison:

| Framework | Best For | Supported Models | Key Features |

|---|---|---|---|

| OpenAI Evals | OpenAI ecosystem users | GPT-3.5, GPT-4 series | Automated evaluations, YAML-driven configuration |

| EleutherAI Harness | Research and multi-model | 200+ models | Academic-grade benchmarks, local inference |

| God of Prompt | Business/workflow design | ChatGPT, Claude, etc. | Pre-built prompts, lifetime updates |

How to Benchmark Models:

- Set up your system (API keys, hardware, etc.).

- Use consistent prompts and settings for testing.

- Analyze metrics like accuracy, latency, and cost.

- Choose tools like OpenAI Evals or EleutherAI for structured evaluations.

- Leverage resources like God of Prompt to simplify prompt creation.

Deep dive: Generative AI Evaluation Frameworks

Top Frameworks for GPT Benchmarking

Frameworks for GPT benchmarking come in various forms, catering to different needs - from specialized tools to multi-system platforms. Below, we explore three standout frameworks, each offering unique features for evaluating large language models.

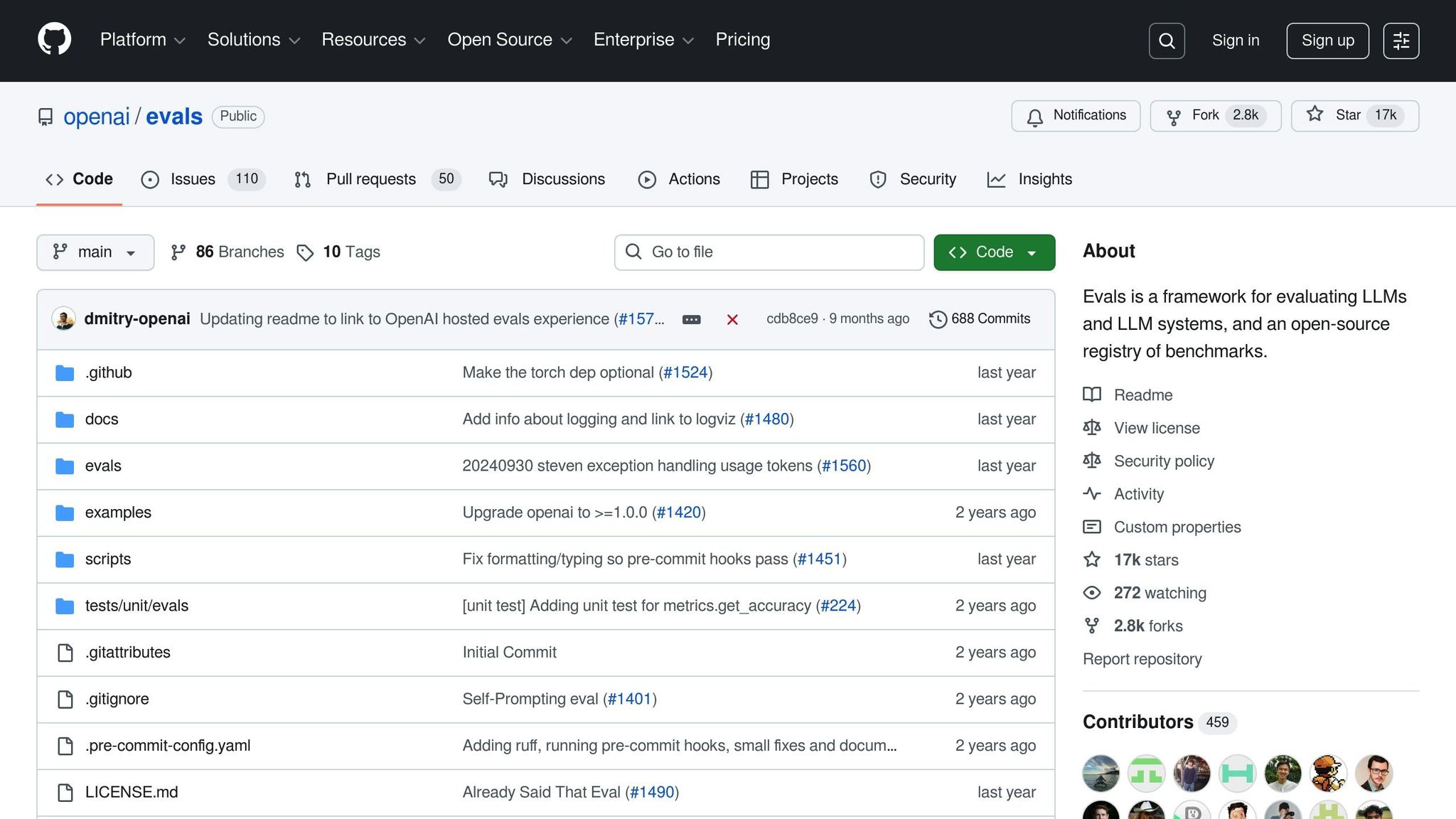

OpenAI Evals

OpenAI Evals is an open-source framework designed for systematic benchmarking and evaluation of large language models. It specializes in automated assessments of prompts, completions, and model performance.

One of its standout features is the ability to conduct "model vs. model" or "model vs. reference" comparisons, which are crucial for identifying performance differences between versions. It also supports custom datasets and templates, enabling tailored benchmarks to suit specific use cases.

The framework includes built-in evaluation types like multiple choice, summarization tasks, and factual accuracy checks. It even integrates human feedback to ensure the automated results align with practical, real-world quality. Using a YAML-driven approach, OpenAI Evals ensures consistency and reproducibility across evaluation runs.

For professionals in the U.S. working with GPT-3.5-turbo and GPT-4-turbo models, OpenAI Evals provides a straightforward way to achieve reliable benchmarking results.

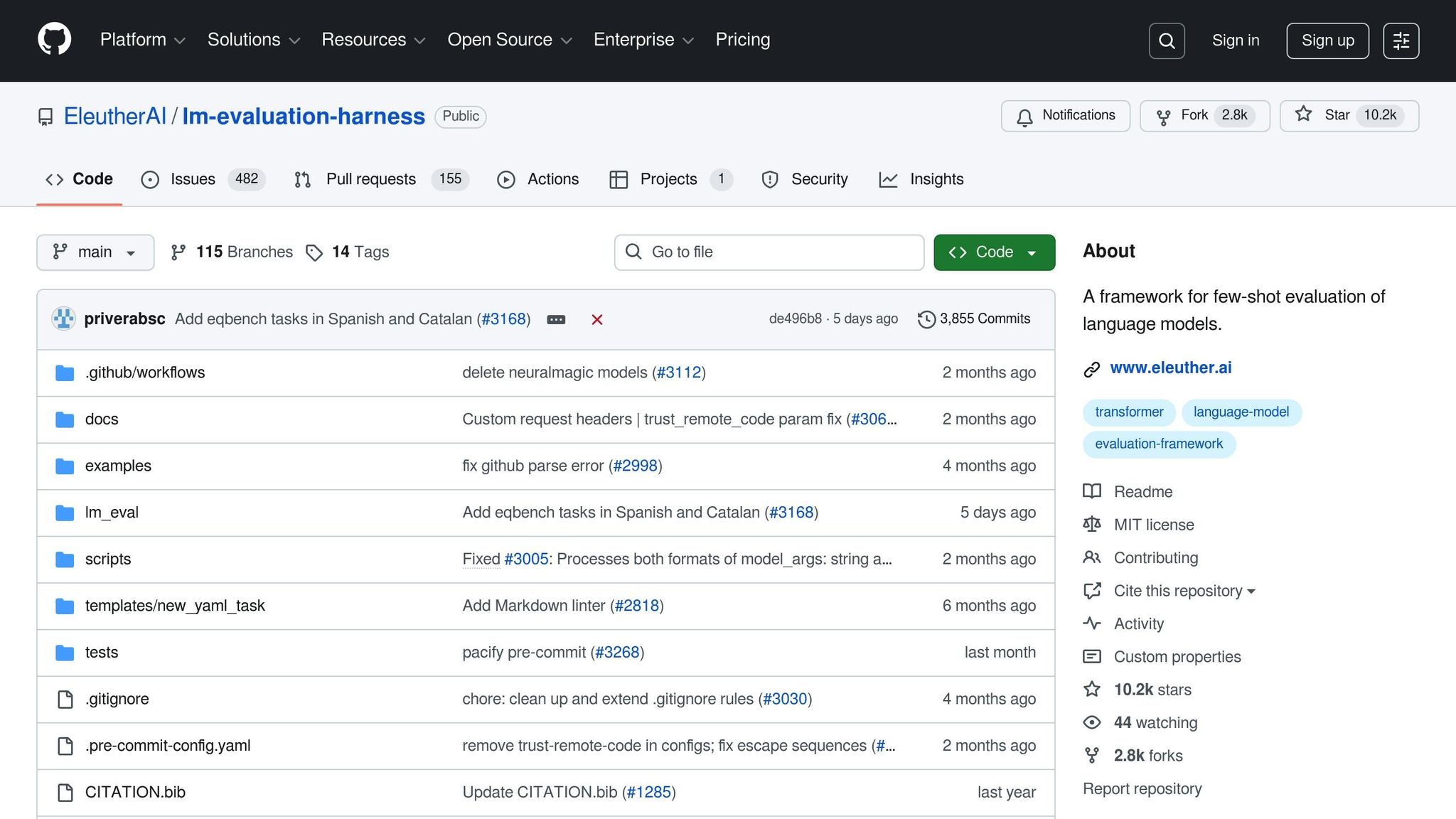

EleutherAI Evaluation Harness

EleutherAI Evaluation Harness is a versatile, open-source framework that supports few-shot evaluations of generative language models. It covers over 60 standard academic benchmarks, each with hundreds of subtasks, making it a robust choice for research teams.

The framework is compatible with a wide range of model architectures, including HuggingFace transformers (both autoregressive and encoder-decoder models) and quantized models like GPTQModel and AutoGPTQ. It also integrates with accelerated inference engines and supports both commercial APIs and local inference servers. This flexibility extends to specialized deployments, such as NVIDIA NeMo models, OpenVINO models, and AWS Inf2 [Neuron] systems.

EleutherAI’s strength lies in its academic rigor and commitment to transparency. All prompts used in its evaluations are publicly accessible, allowing for independent verification and comparison of results. It also supports adapters like LoRA, making it a valuable tool for teams working with fine-tuned models.

God of Prompt for Benchmarking Workflows

God of Prompt simplifies the creation of benchmark test cases by offering over 30,000 categorized AI prompts for tools like ChatGPT, Claude, Midjourney, and Gemini AI. Instead of building prompts from scratch, teams can leverage these pre-organized collections to save time and effort.

The platform provides lifetime updates, ensuring its prompt library evolves alongside advancements in AI. Accessible via Notion, it helps users organize prompts tailored to specific projects or model types, streamlining workflow design.

Comparison Table

| Framework | Primary Strength | Best For | Model Support |

|---|---|---|---|

| OpenAI Evals | Automated custom evaluation | OpenAI ecosystem users | GPT-3.5, GPT-4 series |

| EleutherAI Evaluation Harness | Academic rigor and broad compatibility | Research teams and multi-model environments | Broad support across various architectures and APIs |

| God of Prompt | Curated prompt sourcing and organization | Business applications and workflow design | ChatGPT, Claude, Midjourney, Gemini AI |

Each of these frameworks supports essential benchmarking metrics, making them valuable tools for data-driven evaluations. The right choice depends on your specific goals. For OpenAI users, OpenAI Evals offers a seamless experience. Research teams needing multi-model compatibility might prefer EleutherAI Evaluation Harness, while God of Prompt is ideal for businesses seeking ready-to-use prompts for practical benchmarking scenarios.

How to Set Up and Run GPT Benchmarks

Setting up benchmarks for GPT models requires careful preparation to ensure accurate and reliable results. While the exact process can differ based on the framework you use, following these steps will help you create a solid benchmarking environment.

System Requirements and Setup

For API-based evaluations, such as those conducted with OpenAI Evals, a modern multicore CPU with sufficient memory is usually enough. However, if you're working with frameworks that support local model inference, like the EleutherAI Evaluation Harness, you'll need more robust hardware, including a powerful GPU, to handle the demands of local processing.

Most operating systems, including Windows, macOS, and Linux, are compatible with these tools. Ubuntu LTS releases are particularly popular for their smooth integration, while Windows users may benefit from enabling WSL2 for better compatibility with Python-based dependencies.

Storage needs depend on your workflow. API-based evaluations require minimal storage, but local inference workflows demand significant disk space to download and cache large language models.

Step-by-Step Configuration

To get started with OpenAI Evals, clone the repository and set up your Python environment. Ensure you're using Python 3.8 or newer, then install the package:

pip install evals

Next, create a .env file to store your OpenAI API key, formatted like this:

OPENAI_API_KEY=sk-your-key-here

Define your evaluation parameters in a YAML file. For example, if you're testing GPT-4's factual accuracy, your configuration might look like this:

model: gpt-4-turbo

dataset: custom_facts

eval_type: match

temperature: 0.0

max_tokens: 100

For the EleutherAI Evaluation Harness, additional setup is required. Install the framework with:

pip install lm-eval

After installation, configure your model sources. For API-based evaluations, add your API keys to your environment variables. For local evaluations, download the required model weights and update the framework's configuration to point to their storage location.

Once everything is set up, you can run your first benchmark. For example, to evaluate GPT-2 on the HellaSwag dataset using GPU acceleration, you would use:

lm_eval --model hf-causal --model_args pretrained=gpt2 --tasks hellaswag --device cuda:0

Finally, ensure you have a collection of high-quality prompts to achieve reliable benchmarking results.

Finding and Organizing Prompts

A well-prepared prompt dataset is critical for meaningful evaluations. Established datasets like GLUE, SuperGLUE, and BIG-bench are excellent starting points, as they cover a wide range of tasks, including reasoning, language understanding, and factual knowledge.

If you're creating custom prompts, tailor them to your specific goals. For instance, business applications may focus on customer service scenarios, while research projects might explore mathematical reasoning or programming tasks. Use version control to maintain consistency and track changes in your prompt collection.

Platforms like the God of Prompt can simplify this process by offering categorized prompt bundles designed for various industries and use cases. These collections allow teams to quickly adapt prompts to their evaluation needs.

To keep things organized, adopt standard naming conventions and use metadata tagging. This approach makes it easier to reproduce benchmarks and compare results over time, ensuring your evaluations remain consistent and reliable.

sbb-itb-58f115e

How to Analyze and Compare Benchmark Results

Once you've run your benchmarks, the next step is to make sense of the results. Proper analysis is key to turning raw data into actionable insights.

Reading Metrics and Results

Understanding the metrics is crucial because accuracy, latency, cost, and consistency all play distinct roles depending on your goals:

- Accuracy: The importance of accuracy depends on the task. A model with higher accuracy will generally perform better and more reliably for tasks requiring precision.

- Latency: This measures how quickly a system responds, often in milliseconds or seconds. For example, API-based evaluations tend to respond faster than local inference. In real-time applications like chatbots, keeping latency low is essential to maintain user satisfaction.

- Cost: For large-scale deployments, cost analysis is vital. Take OpenAI's GPT-4 as an example - it charges per token. Estimating token usage can help predict expenses and manage budgets effectively.

- Token Efficiency: Models that achieve similar results using fewer tokens can lead to significant cost savings. Pay attention to both input and output token usage to identify areas for optimization.

- Consistency: Reliable performance across multiple runs is a good indicator of a model's stability. Look for models that deliver consistent results over repeated evaluations.

Building Comparison Tables

Organizing your findings in a structured way makes it easier to compare frameworks and choose the best fit for your needs. Tables are a practical way to summarize key metrics. Here's an example:

| Framework | Supported Models | Setup Time | Estimated Cost | Accuracy Level | Prompt Integration |

|---|---|---|---|---|---|

| OpenAI Evals | GPT-3.5, GPT-4, GPT-4 Turbo | Quick setup | Moderate expense | High | Custom prompts supported; integrates with God of Prompt |

| EleutherAI Harness | 200+ open models | More complex | Lower (local use) | Moderate | Standard datasets supported with custom prompts |

When comparing frameworks, don't forget to factor in hardware requirements. Some frameworks run efficiently on a standard laptop, while others may need powerful GPUs for optimal performance. The learning curve is another consideration - some tools are user-friendly, while others require more technical expertise. Community support can also make a big difference. Active forums, responsive GitHub repositories, and comprehensive documentation can save you time and frustration when troubleshooting or integrating new tools.

Making Data-Driven Decisions

Once you’ve analyzed the metrics and created comparison tables, it’s time to align the findings with your specific goals. Different use cases will prioritize different metrics:

- Creative Content: Marketing teams may prioritize models that excel at generating engaging, imaginative outputs.

- SEO Applications: Models that integrate keywords effectively and produce well-structured content are often the top choice.

- Educational Tools: High factual accuracy and clear explanations are critical for learning environments.

You’ll also want to weigh trade-offs between performance, setup time, and cost. For instance, if two frameworks deliver similar results but one is significantly cheaper to maintain, that might tip the scales in its favor.

Using standardized prompts, like those from God of Prompt, can streamline your evaluations. Consistency in testing not only saves time but also ensures fair comparisons across different models. Don’t forget to consider ongoing maintenance and update costs as part of your decision-making process.

Best Practices and Advanced GPT Benchmarking Strategies

Benchmarking GPT models effectively requires more than just running basic tests. The most accurate results come from a structured approach that accounts for variability, incorporates advanced techniques, and tackles complex, real-world scenarios.

Getting Reliable Benchmark Results

To ensure consistency, start with a standardized prompt format for all tests. Use the same structure, tone, and style for similar tasks. If you modify variables between tests, document every change carefully to maintain reproducibility.

Set the temperature to 0 (or close to it) to reduce randomness. This ensures that repeated runs yield consistent outputs, making it easier to spot true performance differences rather than random fluctuations.

Run multiple iterations for each test case to account for model variability. Even at low temperature settings, slight differences can occur. Running 3–5 iterations and averaging the results provides a clearer picture of performance.

Automation can simplify large-scale benchmarking and reduce errors. Python scripts can batch process tests, log results, and maintain consistent API timing. Additionally, record key details about the test environment - like the date, model version, API endpoint, and system specifications - to track any external factors that might influence results.

These foundational practices set the stage for integrating more advanced techniques into your benchmarking workflow.

Using Prompt Engineering Resources

Advanced prompt engineering techniques can elevate benchmarking accuracy. Methods like Chain-of-Thought (CoT), self-consistency, and Tree-of-Thoughts (ToT) have been shown to improve results significantly by enhancing the model's reasoning capabilities.

Tree-of-Thoughts (ToT) is particularly effective for complex problem-solving tasks. For example, in benchmarking scenarios, ToT achieved a 74% success rate on the Game of 24 task (using a breadth of b=5), far surpassing standard input-output methods (7.3%), CoT (4.0%), and CoT with self-consistency (9.0%).

Another valuable resource is God of Prompt, which offers a curated collection of over 30,000 AI prompts. These categorized prompt bundles provide standardized templates that can serve as consistent baselines across different models. Their prompt engineering guides also help users identify the best techniques for specific tasks, ensuring benchmarks align with real-world usage patterns.

While refining prompt formats is critical, exploring advanced use cases can take benchmarking to the next level.

Advanced Benchmarking Use Cases

Calibrated Confidence Prompting (CCP) is a technique that evaluates a model's ability to express confidence in its responses. This is particularly important for assessing reliability in sensitive applications.

Security-focused benchmarking is another advanced strategy. By designing tests that identify vulnerabilities in the model, you can address weaknesses in prompt engineering and improve overall robustness.

Frameworks like Langchain, Semantic Kernel, and Guidance AI are invaluable for automating complex prompting workflows. They make advanced benchmarking processes more efficient and reproducible.

Finally, Active Prompting has demonstrated its potential by outperforming self-consistency methods by an average of 2.1% when using code-davinci models. This approach adds another layer of sophistication to benchmarking workflows, ensuring even more reliable results.

Conclusion

This guide has covered key strategies and tools for effective GPT benchmarking. At its core, GPT benchmarking relies on structured frameworks, practical methods, and reliable resources. We've discussed how tools like OpenAI Evals and the EleutherAI Evaluation Harness provide solid foundations for systematic testing, while advanced prompt engineering plays a crucial role in improving benchmark precision.

Achieving accurate benchmarking results hinges on consistency and reproducibility. Using a temperature setting of 0, running multiple iterations, and keeping thorough documentation are essential steps to ensure dependable outcomes. Incorporating automation not only reduces the chance of errors but also allows for scalability. As GPT models continue to evolve, benchmarking methods need to measure both their accuracy and overall performance comprehensively.

A valuable resource in this process is God of Prompt, which offers a collection of over 30,000 categorized AI prompts. These prompts serve as standardized baselines, making it easier to benchmark across various models. Additionally, their prompt engineering guides help refine techniques for specific tasks, ensuring benchmarks align with real-world usage scenarios.

As the field of benchmarking progresses, there’s a growing focus on reliability, calibration, and resilience against vulnerabilities. The choice of framework ultimately depends on your goals - whether you're conducting academic research, optimizing AI for business, or building new AI products. By leveraging proven frameworks, targeted prompt engineering, and resources like God of Prompt, you can streamline benchmarking efforts and gain meaningful insights.

FAQs

How do I choose the right GPT benchmarking framework for my goals?

To select the best GPT benchmarking framework, start by pinpointing the performance metrics that matter most for your project. These might include accuracy, scalability, bias detection, or robustness. Next, think about the specific tasks your project emphasizes - whether it's reasoning, coding, or working across multiple modalities - and choose a framework designed to evaluate those capabilities effectively.

You'll also want to ensure the framework fits your project's scale and technical needs. Look for tools that are straightforward to set up, offer clear and actionable evaluation results, and can adapt to ongoing advancements in AI. By aligning the framework with your goals and requirements, you'll get benchmarking results that are both precise and highly relevant.

What advanced techniques can improve the accuracy and reliability of GPT benchmarking?

To improve the precision and dependability of GPT benchmarking, you can apply specific prompt engineering methods:

- Chain-of-thought (CoT) prompting: This technique encourages the model to break down problems into smaller, logical steps, helping it tackle more intricate tasks effectively.

- Self-consistency: By generating multiple responses and selecting the one that appears most frequently, this approach reduces variability and ensures more reliable outcomes.

- Meta prompting: Here, the model is directed to review or validate its own answers, which enhances the overall accuracy of its responses.

These strategies work together to produce benchmarking results that are more consistent and dependable, while also encouraging clearer reasoning and minimizing discrepancies in outputs.

What role do metrics like accuracy, latency, and cost efficiency play in choosing the right GPT model for different applications?

Metrics like accuracy, latency, and cost efficiency play a central role in choosing the right GPT model for your specific needs.

- Accuracy is a top priority for tasks that demand reliable and precise outputs, such as conducting research or generating important insights.

- Latency becomes critical in real-time scenarios like chatbots or interactive tools, where quick responses enhance the overall user experience.

- Cost efficiency is a key consideration for large-scale projects or those with tight budgets, ensuring you can manage expenses without compromising too much on performance.

Selecting the best GPT model boils down to your main objectives - whether you need pinpoint accuracy, lightning-fast responses, or a cost-effective solution to meet your application's demands.