OpenAI held their annual developer conference on October 7, 2025, in San Francisco.

They announced a lot of new products, tools, and features in one morning.

Let me walk you through every announcement, what each one does, and why you should care about it.

ALSO READ: Ultimate Guide to OpenAI Sora 2: Everything You Need to Know in 2025

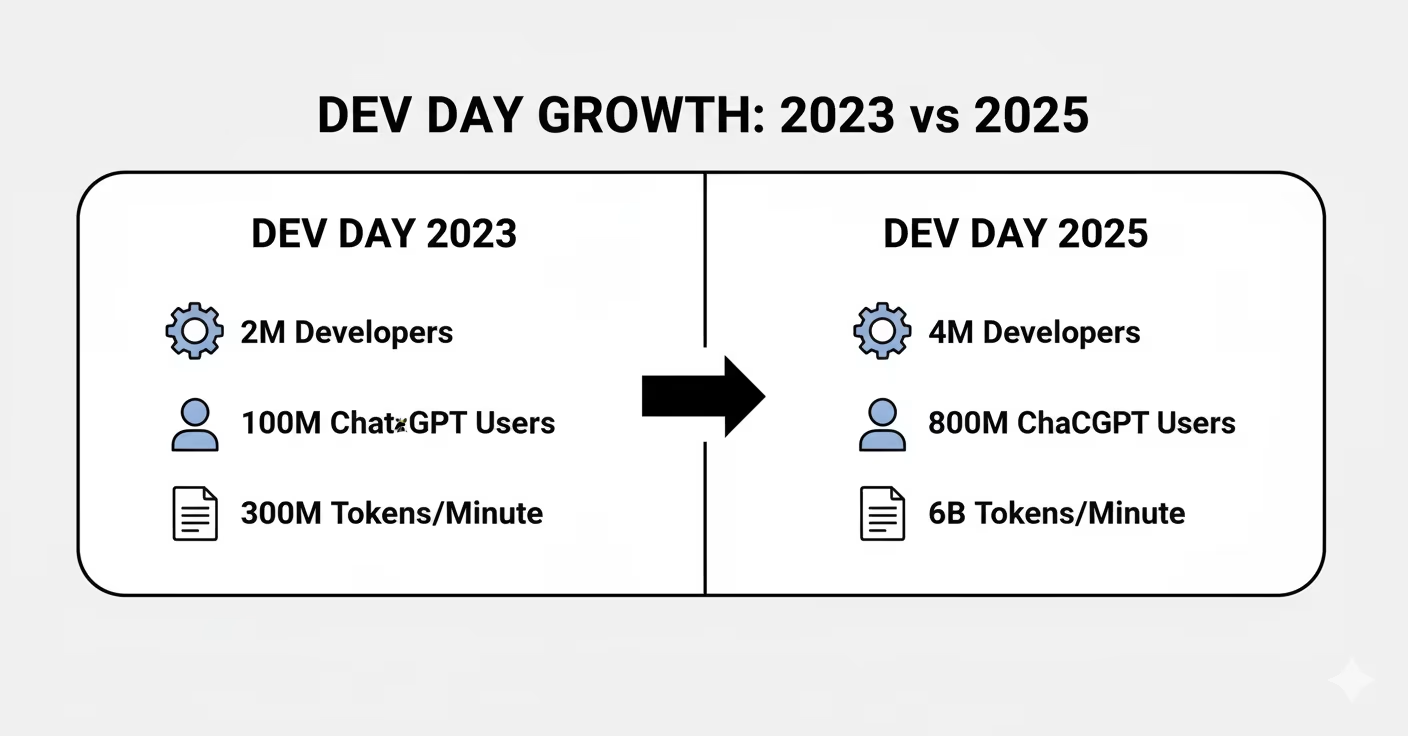

Before we get into the new stuff, here's how much the platform grew since the last DevDay in 2023.

Two years ago at DevDay 2023:

Now at DevDay 2025:

The developer count doubled.

The user count went up 8 times.

The token processing increased 20 times.

This matters because all the announcements are built for this bigger scale.

OpenAI isn't making experimental features anymore.

They're building for production use by millions of developers.

OpenAI announced that developers can now build full applications that work inside ChatGPT conversations.

This isn't like the GPTs they released before.

This is much bigger.

Apps are interactive programs that live right in the chat.

Users don't download anything.

They don't visit another website.

The app runs inside the conversation.

How users find apps:

They can call them by name.

Someone types

"Spotify, make me a workout playlist"

and the Spotify app appears in the chat. Or ChatGPT suggests them.

If you're talking about buying a house, ChatGPT might suggest the Zillow app to browse homes.

What makes these different from chatbots:

These apps have real interfaces.

Maps. Video players. Interactive forms. Clickable buttons. You can do real work inside them.

They connect to actual backends. Your databases. Your APIs. Your business logic.

This isn't just AI responses - it's your full application.

Users can log in with existing accounts. If someone already pays for your service, they can access it through ChatGPT.

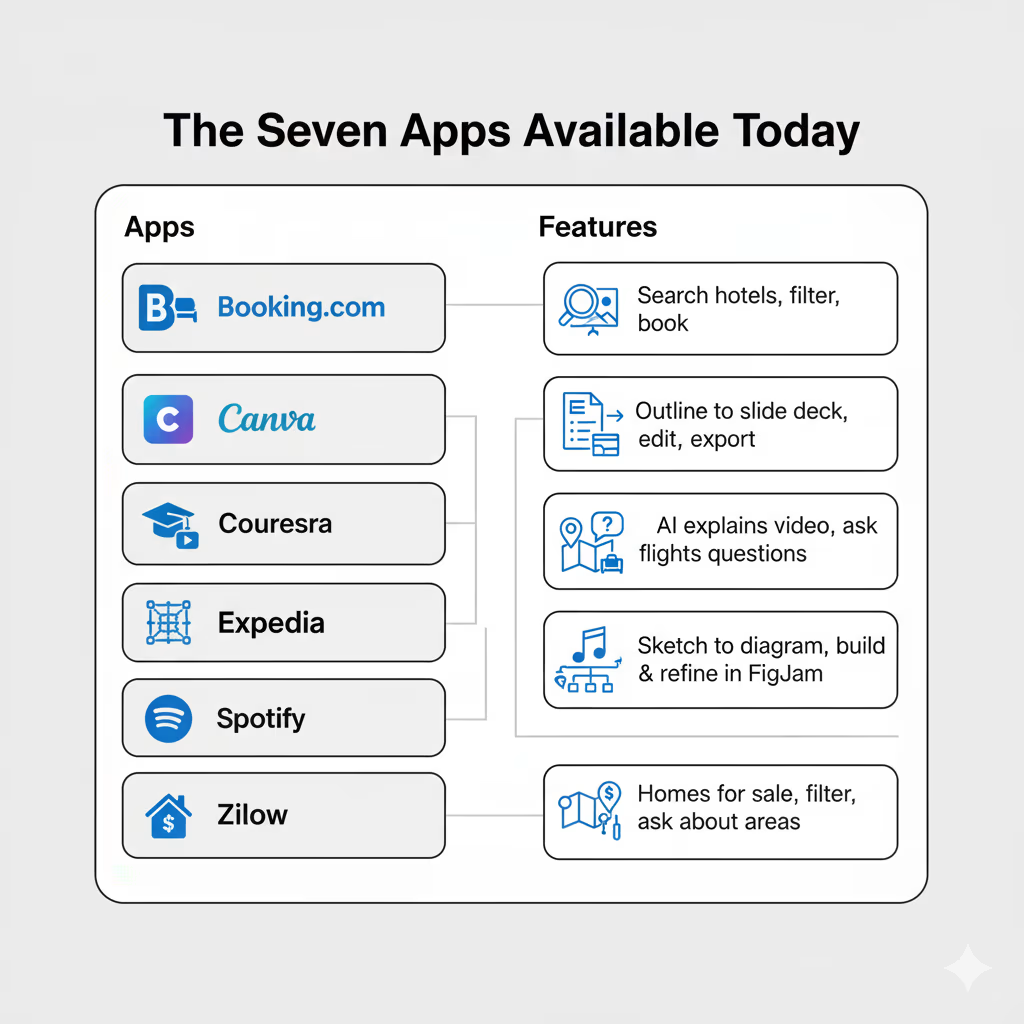

OpenAI launched with seven partner companies. Each one shows what's possible.

Booking.com - Search for hotels, see results with photos and prices, filter by what you need, book directly

Canva - Type an outline, Canva turns it into a slide deck. Edit the design right there. Export when you're done.

Coursera - Watch educational videos with AI explaining difficult parts as you watch. Ask questions about what you're learning.

Expedia - Plan trips, search flights, book hotels and activities, all in the conversation

Figma - Upload a sketch, Figma converts it to a working diagram. Click to open in FigJam for more editing.

Spotify - Describe what kind of music you want, Spotify builds a playlist. Refine it by talking.

Zillow - See homes for sale on an interactive map. Filter by price, bedrooms, features. Ask about neighborhoods.

OpenAI released the Apps SDK so any developer can build apps for ChatGPT.

What the SDK gives you:

It's built on something called Model Context Protocol, or MCP.

This is an open standard for connecting AI to tools.

If you already know MCP, you're halfway there.

The Apps SDK adds more on top of MCP:

What you can build:

Your app can use any web technology.

React, Vue, plain HTML and CSS - whatever you know.

You control the backend completely.

Your servers, your database, your security, your business rules.

You can charge money.

Let free users see some features, paid users see everything. You decide.

The "talking to apps" feature:

This is important.

Your app can tell ChatGPT what the user is looking at.

Example: Someone is watching a course in the Coursera app.

They ask "can you explain this part more?"

ChatGPT knows exactly which part of the video they mean because the Coursera app told it.

Or someone is looking at a house on the Zillow map.

They ask "how close is this to a dog park?"

ChatGPT knows which house they mean.

This makes the whole thing feel natural.

No explaining context over and over.

When you can publish your app:

Right now, the SDK is in preview.

You can build and test using something called Developer Mode.

Later in 2025, OpenAI will open submissions.

Any developer can submit their app for review.

When approved, your app goes in a directory where people can find it.

How you make money:

OpenAI hasn't released all the details yet, but they said it's coming later this year.

One option they mentioned: Agentic Commerce Protocol.

This lets people buy things instantly inside ChatGPT without leaving to another website.

Getting users is the hardest part of building software.

You can build something great and nobody finds it.

Apps SDK solves this.

Build your app once. It reaches 800 million people who use ChatGPT every week.

They find it naturally when they need it.

No paying for ads. No fighting with app store policies.

No long download and setup process.

That's a massive change in how software distribution works.

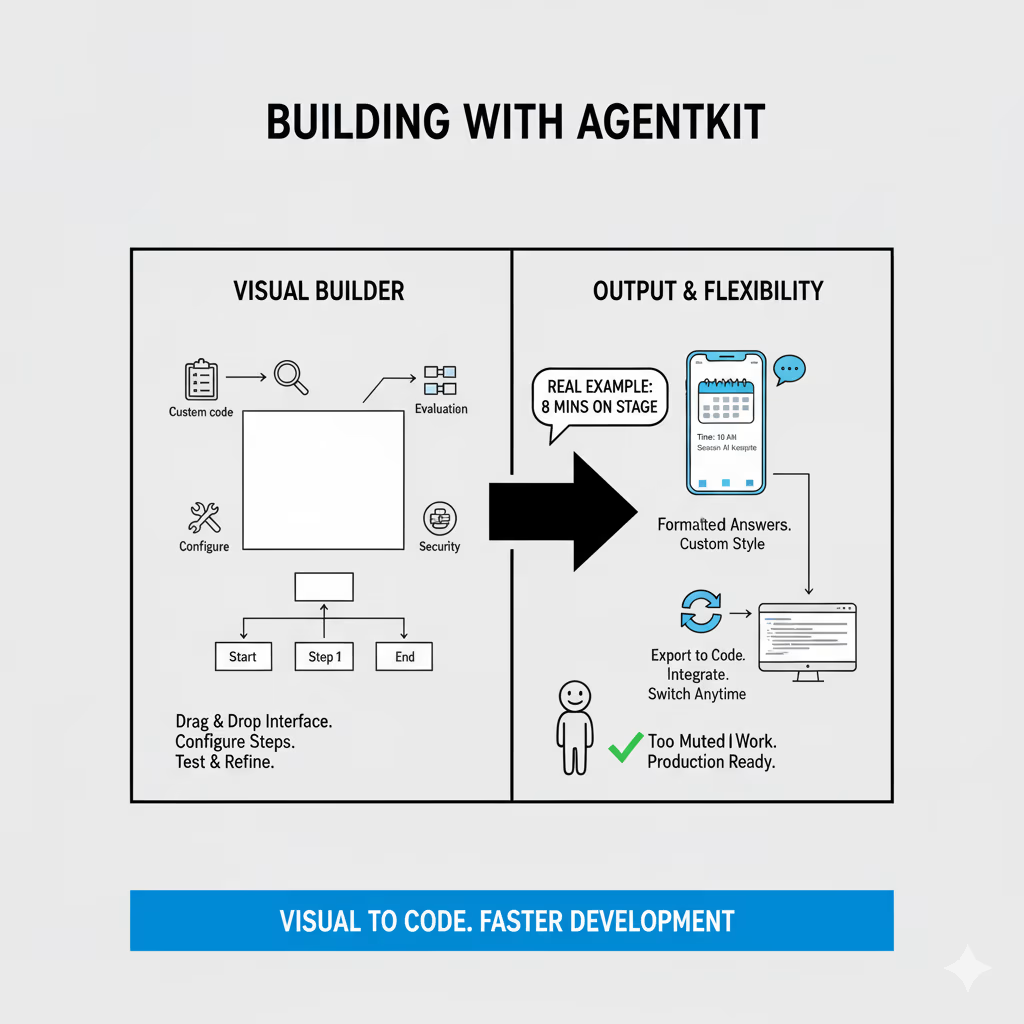

OpenAI announced AgentKit, which they describe as a complete set of tools for building AI agents.

Right now, building an AI agent that actually works in production is really hard.

You need to connect a bunch of different tools. Set up complicated workflows.

Write custom code to connect everything. Build evaluation systems to test if it works. Create a user interface. Handle errors. Make it secure.

Most agents never make it past the prototype stage because it's too much work.

AgentKit changes this. It gives you everything in one place.

AgentKit has four main components that work together.

This is a visual tool for designing agent workflows.

Instead of writing code, you drag boxes onto a canvas and connect them.

Each box is a different step in what your agent does.

Agent boxes - These are AI agents for specific tasks. You write instructions for what each one does, give it tools to use, connect it to your data.

Tool boxes - These connect to file search, APIs, databases, or MCP servers. Any external thing your agent needs to access.

Guardrail boxes - These are safety filters. They can block personal information, catch attempts to trick the agent, or filter inappropriate content.

Logic boxes - These handle if/then decisions. "If the user asks about X, go to agent A. If they ask about Y, go to agent B."

Approval boxes - These stop and ask a human before doing something. Good for sensitive actions like spending money or sharing data.

Widget boxes - These create custom response formats. Like showing data in a table, or displaying a map, or creating an interactive form.

You start with a blank canvas.

Add the boxes you need.

Connect them in order.

Click on each box to configure what it does.

Run a test conversation to see if it works.

Adjust anything that's wrong.

Run it again.

Keep going until it works right.

Real example from the conference:

Someone built an agent live on stage in under 8 minutes.

It could answer questions about the DevDay schedule, show session details in a nice format, and respond in a specific style.

All using Agent Builder.

Companies like Ramp said Agent Builder let them build in hours what used to take months.

When to use code instead:

You can export your workflow to code if you need to customize something the visual tool can't do.

Or if you want to integrate it into existing code.

The workflow gets an ID either way, so you can switch between visual and code approaches.

This is an admin panel for managing all your data connections in one place.

What it connects:

Why this matters:

Without Connector Registry, every team rebuilds the same connections.

Your support team connects to Google Drive.

Your sales team connects to Google Drive.

Your product team connects to Google Drive.

Three times, three different ways.

With Connector Registry, you connect once.

It's available everywhere across your company's ChatGPT and API usage.

What admins can control:

For big companies with lots of teams and lots of data, this saves a huge amount of time and keeps things secure.

ChatKit is a pre-built chat interface you can drop into your app.

What it handles for you:

Building a good chat interface is harder than it looks.

You need to handle messages streaming in character by character. Save conversation history.

Show when the AI is thinking. Display rich content like images or interactive widgets.

Work on phones and computers.

ChatKit does all of this. You just add it to your app and customize the colors and branding.

How fast it is to use:

Canva said they built a support agent for their developer community and got it working in under an hour using ChatKit.

That saved them two weeks compared to building it themselves.

What you customize:

It works with agents from Agent Builder, or with custom agents you build yourself.

You can't make agents better if you can't measure how they're doing. AgentKit includes tools for this.

Trace grading - This shows you every decision the agent made, step by step. "It classified the request as type A.

It routed to agent 1. Agent 1 called tool X. Tool X returned data Y." You see the whole chain.

Datasets - You create test cases. The same questions or scenarios over and over. Run your agent against them.

See where it succeeds and where it fails. This helps you find and fix problems.

Automated prompt optimization - The system tries different ways of writing instructions to your agents. It finds which versions work best. This saves you from manually testing dozens of variations.

External model testing - You can test your agent using other AI models from other companies, not just OpenAI.

This helps you compare and choose the best one for your use case.

Guardrails are part of AgentKit but important enough to explain separately.

What they are:

Safety filters that check what goes into and comes out of your agents.

Types of guardrails:

PII masking - Automatically finds and hides personal information.

Names, emails, phone numbers, social security numbers, credit card numbers. You define what counts as sensitive.

Jailbreak detection - Catches attempts to trick your agent into doing things it shouldn't.

People try creative ways to bypass rules. Guardrails block these attempts.

Content moderation - Filters inappropriate content, harmful instructions, or things that violate your policies.

Custom rules - You can write your own. Check for anything specific to your business or use case.

In Agent Builder, you drag a guardrail box into your workflow. Put it before sensitive steps. Configure what it should catch. That's it.

Or use them as code libraries in Python or JavaScript. Add a few lines to check inputs or outputs.

Performance:

Guardrails are fast. Most add 10 to 50 milliseconds. That's tiny compared to how long AI models take to think.

Open source:

OpenAI made Guardrails open source. You can see exactly how they work. Contribute improvements. Trust them because you can inspect the code.

Albertsons - They run over 2,000 grocery stores.

Store managers have to make constant decisions about promotions, inventory, displays.

They built an agent with AgentKit.

Now managers ask questions like "Why are ice cream sales down 32%?"

The agent analyzes everything and suggests what to do.

HubSpot - They built an agent called Breeze using AgentKit.

It answers customer support questions by searching their knowledge base, pulling policy details, and putting together smart answers.

Faster help for customers.

Ramp - They said Agent Builder cut their development time by 70%.

What used to take two quarters now takes two sprints.

The visual canvas kept their legal team, product team, and engineering team all aligned.

LY Corporation - A big technology company in Japan.

They built a work assistant agent in under two hours using Agent Builder.

Engineers and business people worked together in the same interface.

Available now if you have:

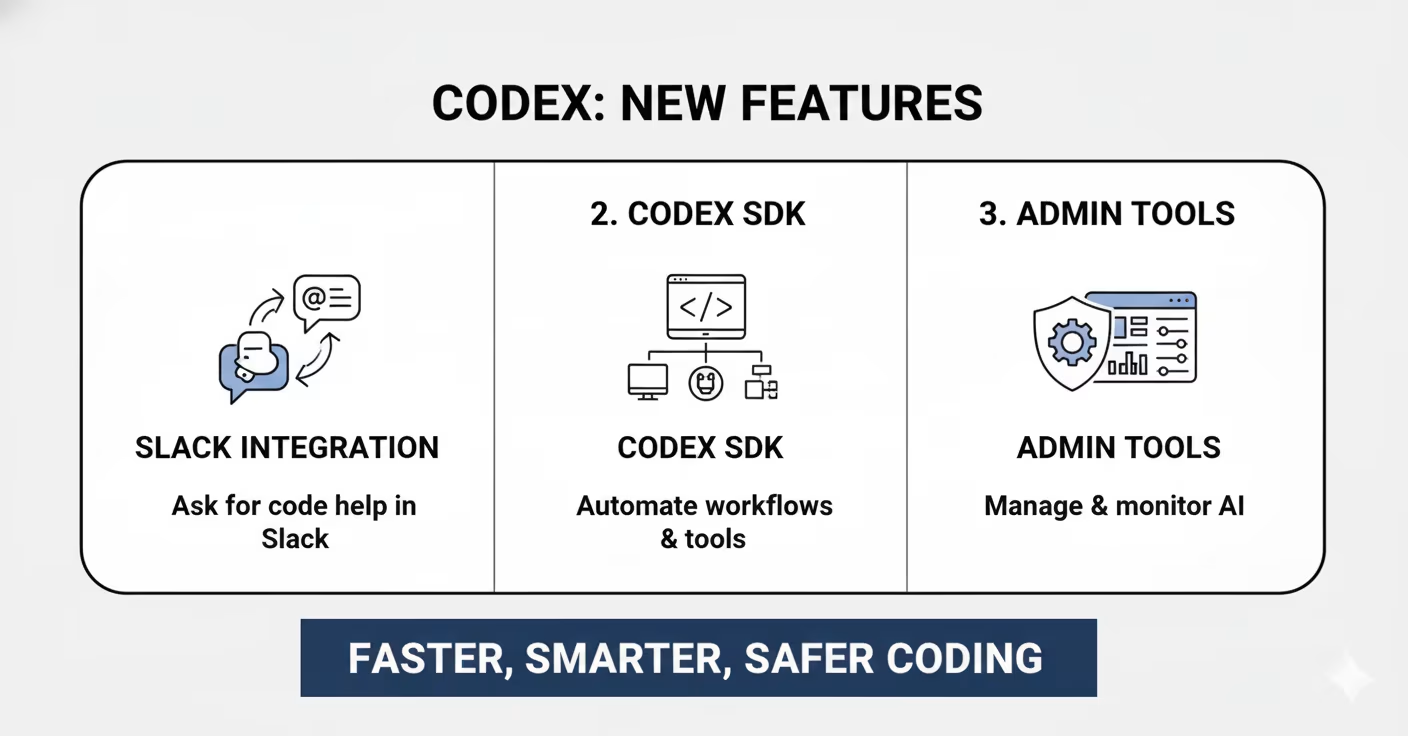

Codex has been in "research preview" since earlier this year.

Now it's officially released and generally available.

Plus three big new features.

Codex is an AI agent that helps you write code.

It works in several places:

Everything connects through your ChatGPT account. You can start work in one place and continue in another.

OpenAI shared some numbers:

Since early August, daily messages to Codex went up 10 times.

People are using it way more.

The model powering it, GPT-5-Codex, served over 40 trillion tokens in just three weeks.

That's a massive amount.

Inside OpenAI, almost every engineer uses Codex now.

They merge 70% more pull requests each week compared to before.

And Codex automatically reviews almost every pull request to catch problems.

You can now use Codex directly in Slack.

How it works:

In any Slack channel or thread, tag @Codex and ask it to do something.

"@Codex add error handling to the authentication endpoint"

Codex reads the whole thread to understand context.

It figures out which code you're talking about.

Makes the changes. Posts a link where you can review what it did.

What you can do with the results:

Click the link to see the changes in Codex Cloud.

From there you can merge the changes to your code, keep asking for more changes, or download it to work on your own computer.

Why this matters:

Your team already works in Slack.

Now coding help is right there too.

No switching to another tool.

Just ask Codex like you'd ask a teammate.

The SDK (Software Development Kit) is a way to use Codex in your own tools and automated workflows.

What it's for:

Example code:

import { Codex } from "@openai/codex-sdk";

const agent = new Codex();

const thread = await agent.startThread();

const result = await thread.run("Refactor this function");

That's TypeScript. Support for more programming languages is coming.

Real use case:

You can set up GitHub Actions to automatically review every pull request with Codex.

It checks for security issues, suggests improvements, makes sure the code follows your standards.

All automatic.

If you're a company using Codex, admins now have more control.

What admins can do:

Example analytics:

Dashboards show things like "daily code review issues by priority" and "sentiment of code review feedback over time."

This helps managers understand if Codex is actually helping and where teams might need training.

Codex now uses a model called GPT-5-Codex.

OpenAI trained this specifically for coding tasks.

What makes it special:

It's better at refactoring code (reorganizing and improving existing code).

It's better at reviewing code.

And it can think for different amounts of time depending on how hard the problem is.

Simple problems get quick answers.

Hard problems get more thinking time.

Cisco - They rolled out Codex to their entire engineering organization.

Code reviews that used to take a long time now happen 50% faster. Projects that took weeks now take days.

Instacart - They connected the Codex SDK to their internal system called Olive.

Now Codex automatically cleans up old code nobody uses anymore, removes expired experiments, and improves performance.

Engineers focus on new features instead of cleanup.

Starting October 20, when you use Codex Cloud (the remote version), it counts against your usage limits.

Before this, it was separate.

Check the pricing page for your specific plan to see what this means for you.

OpenAI released GPT-5 a while ago.

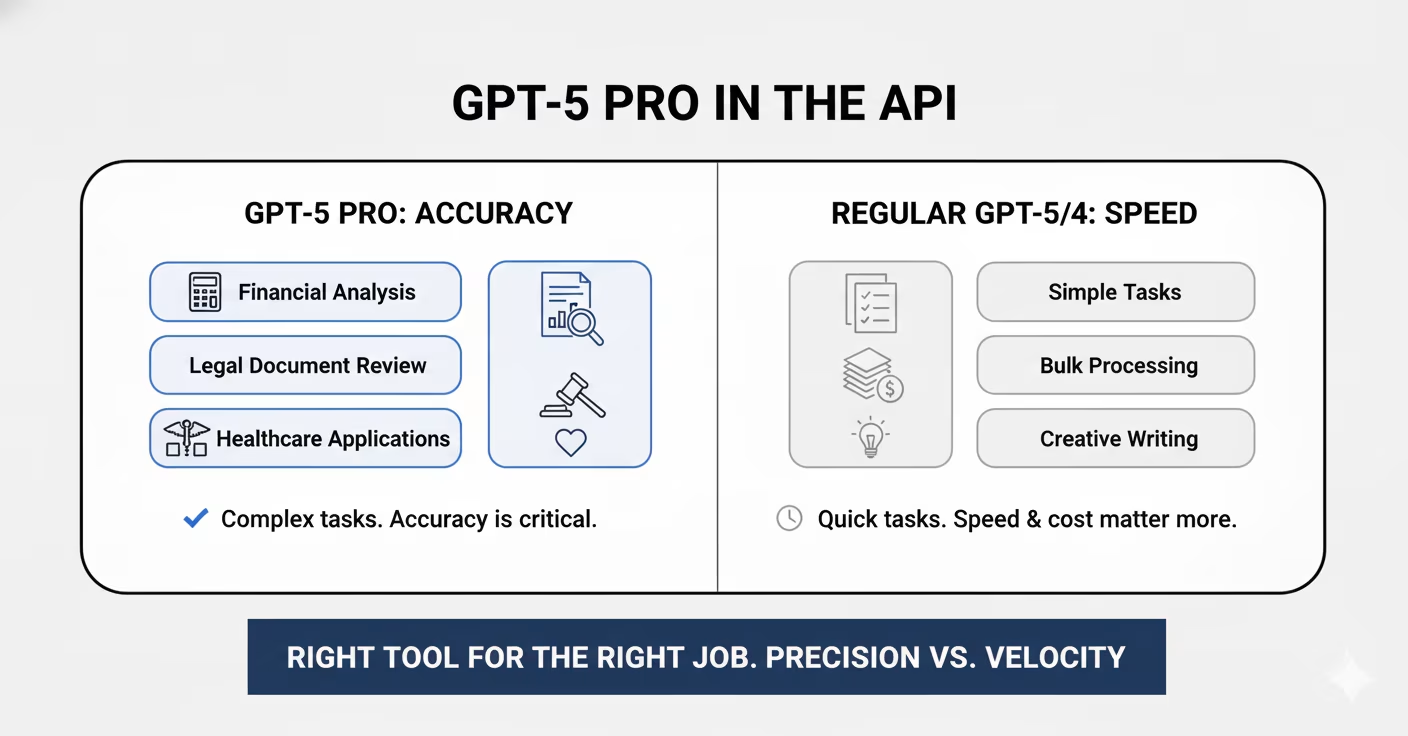

Now they released GPT-5 Pro to all developers through the API.

It's OpenAI's smartest, most capable model. B

uilt for tasks where you absolutely need accuracy and can't afford mistakes.

Use GPT-5 Pro for:

Use regular GPT-5 or GPT-4 for:

GPT-5 Pro thinks longer about problems.

It shows you its reasoning process.

It catches its own mistakes.

This all costs more and takes more time, but you get better answers.

It's in the API right now. All developers can use it.

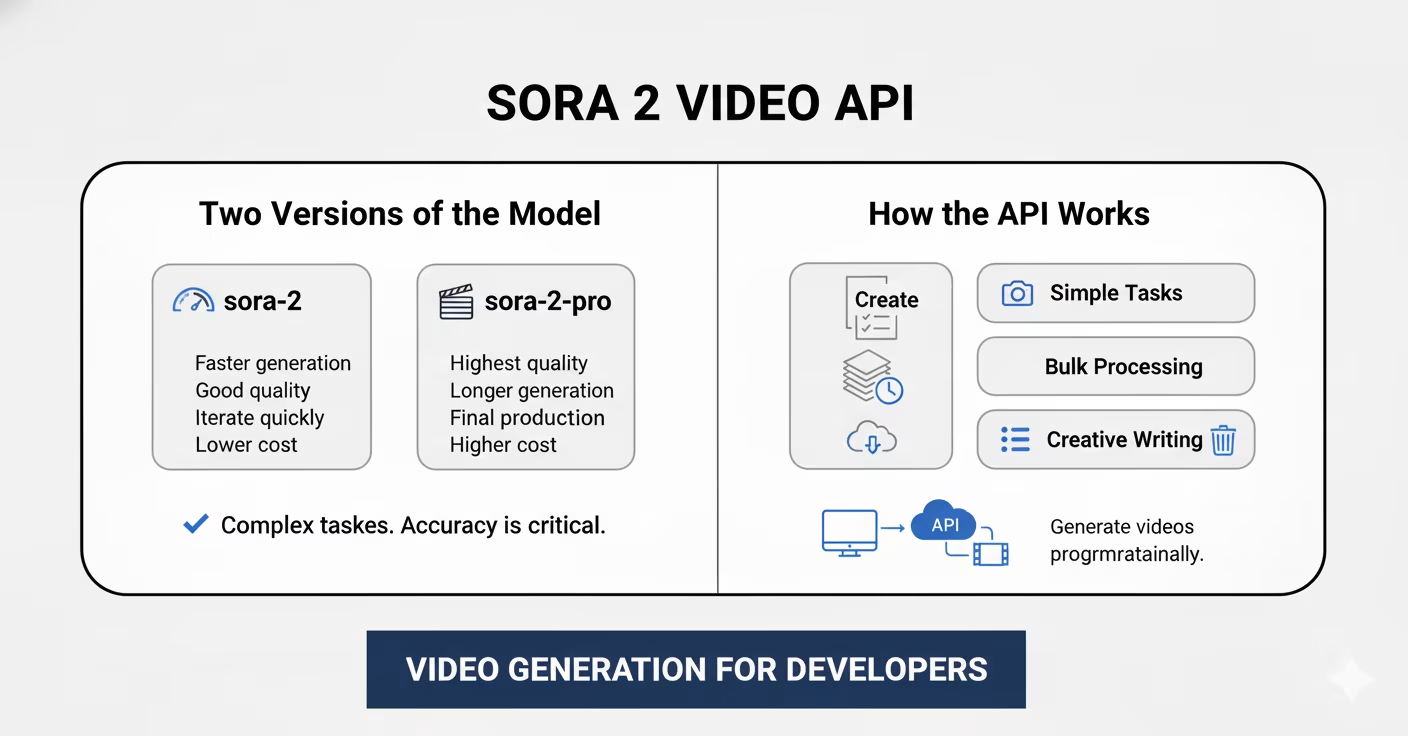

Sora 2 is OpenAI's video generation model.

It's been available in the Sora app.

Now there's an API so developers can generate videos programmatically.

sora-2 - The faster one

Good quality results but generates quickly. Perfect for when you're testing ideas and iterating. Costs less.

sora-2-pro - The quality one

Takes longer to generate. Costs more. But the quality is higher and more polished. Use this for final production videos.

Five different endpoints:

The process:

Generating video takes time. You send your request.

The API gives you an ID. You check the status until it says "completed." Then you download it.

Or instead of checking repeatedly, you can set up webhooks. OpenAI notifies you when it's done.

Every video comes with audio.

Not just any audio - synchronized audio that matches what's happening.

Dialogue, sound effects, background noise, all fitting together.

Image references - You can upload an image as the starting frame.

Sora 2 animates from there. Useful for brand consistency or specific characters.

Remix - Take an existing video and change specific things. "Make the sky purple" or "add a second character."

It modifies instead of starting over.

Parameters you control:

The API blocks certain things:

If you try to generate these, the request fails with an error message.

Mattel, the toy company, uses the Sora 2 API. Their designers upload sketches of new toy ideas.

Sora 2 generates animated videos showing how the toy would look and move.

They can show these to decision-makers before building expensive physical prototypes.

The API is in preview. It works, but expect it to improve over time. New features will be added. Performance will get better.

This is a new voice model. Smaller and cheaper than the existing realtime model.

70% less expensive than the large realtime model. Same quality. Just cheaper.

All the same capabilities:

This makes voice features affordable for:

Use Mini for: Most voice applications, especially if you're doing it at scale and cost matters

Use the large realtime model for: When you need absolutely the best quality possible and cost isn't the main concern

Live in the API right now. Start using it today.

Look at how these pieces connect:

Apps SDK lets you reach 800 million users.

AgentKit lets you build complex agents to power those apps.

Codex helps you write the code faster.

GPT-5 Pro gives you intelligence for hard problems.

Sora 2 lets you generate video content.

Realtime Mini makes voice affordable.

Everything works together to let you build and ship sophisticated software faster than before.

You can start using today:

Expected in the remaining months of 2025:

If you're a developer:

Pick one thing from this list.

Try it this week. Build something small but real.

Learn how it actually works instead of just reading about it.

Agent Builder is probably the easiest to start with.

You can build something in an hour without writing code.

If you run a company:

Think about one specific problem your business has. Could an agent solve it?

Could an app in ChatGPT reach new customers?

Run a small pilot. Measure the results. Expand what works.

If you're just interested:

Watch for apps appearing in ChatGPT.

Try them when they match what you're doing.

See how different this feels from traditional software.

The shift is happening. The tools are real. The question is what gets built with them.