Let’s be real: ChatGPT is both amazing and frustrating.

It can explain quantum physics in plain English, draft your marketing copy in seconds, or even debug your code.

But it also confidently spits out wrong answers — known as AI hallucinations.

OpenAI recently published a research paper claiming they’ve found a mathematical way to reduce hallucinations. Sounds great, right?

Except… their solution might actually break the very thing that makes ChatGPT so useful in the first place.

Let me walk you through what’s going on — and why fixing hallucinations could end up killing ChatGPT for everyday users.

ALSO READ: 10 ChatGPT Deep Research Prompts For Marketing

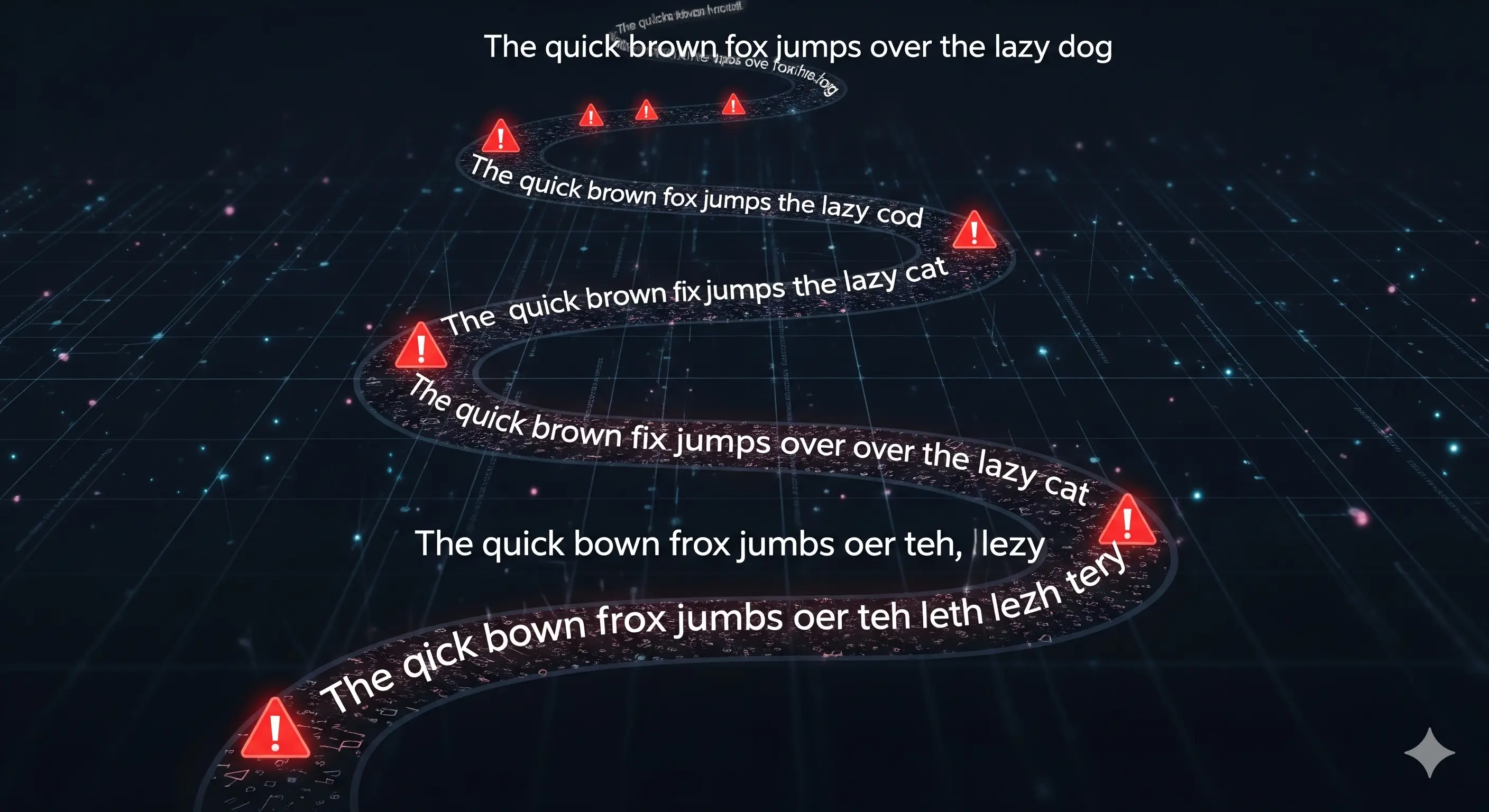

When we talk about “AI hallucinations,” we don’t mean the AI is tripping on digital mushrooms. It’s when the model confidently makes things up.

Examples you’ve probably seen:

For consumers, this can be funny.

For businesses, it’s a dealbreaker.

Imagine your AI assistant inventing a tax regulation or hallucinating a medical guideline — the costs are huge.

The answer comes down to how large language models work.

Even with perfect training data, hallucinations can’t be fully eliminated.

The researchers proved mathematically that hallucination rates are baked into the very way language models generate sentences.

Here’s a simple test they ran: ask a state-of-the-art model for the birthday of Adam Kalai, one of the paper’s authors.

The results?

Three different, confidently stated wrong answers:

The actual birthday is in the autumn. None of these were even close.

The takeaway: if a fact wasn’t frequently seen in training, the model is more likely to make something up rather than admit uncertainty.

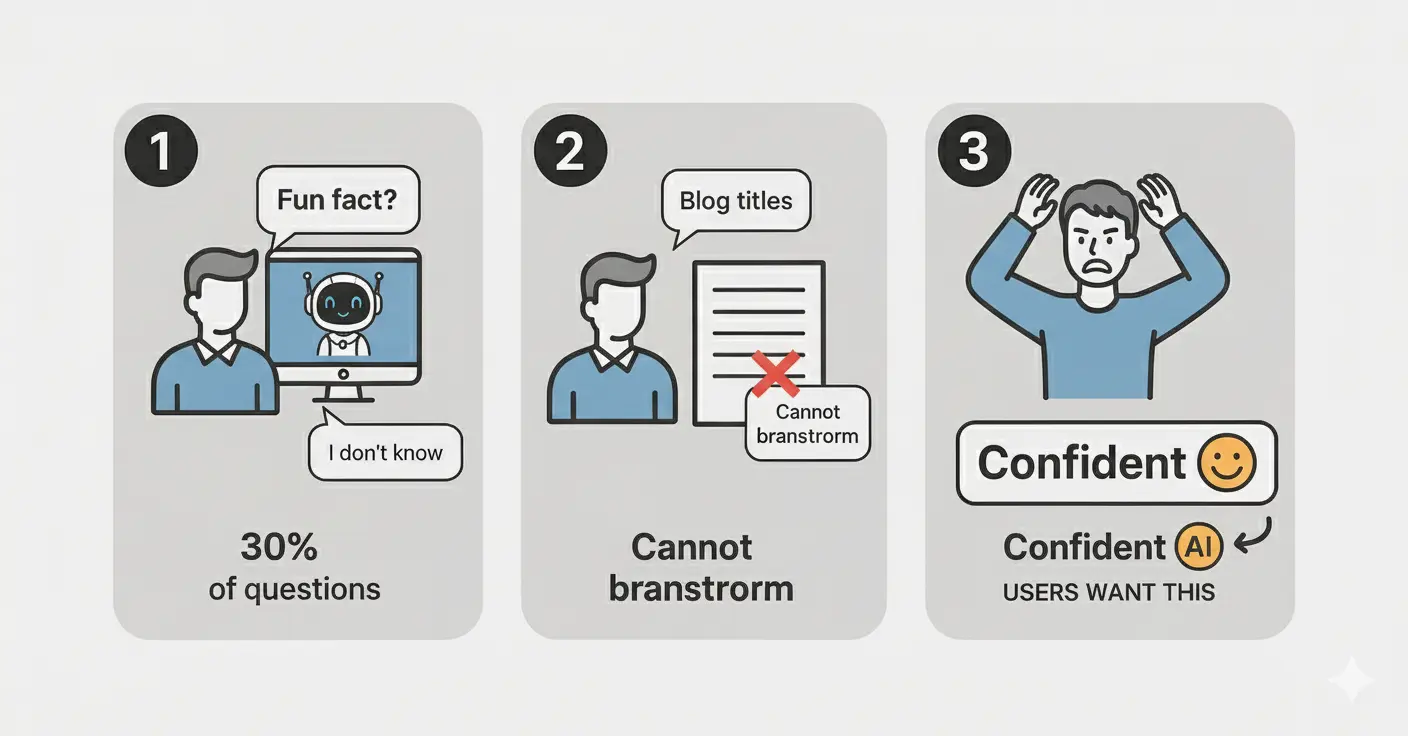

So why haven’t companies fixed this already? Turns out, it’s a problem with how we measure AI performance.

Most benchmarks use binary grading:

Here’s the catch: if the AI says “I don’t know”, it also gets 0 points.

Mathematically, the optimal strategy becomes clear: always guess. Even a wild guess has a chance of being right, while saying nothing guarantees a zero.

In other words, our current evaluation systems penalize honesty.

OpenAI’s solution is to make models confidence-aware.

Instead of answering everything, the AI would be trained to say:

This way, the model avoids blurting out nonsense. The math checks out: fewer hallucinations, more reliable outputs.

Sounds like a win… until you think about what that means for ChatGPT.

Imagine firing up ChatGPT and suddenly:

That’s what would happen if ChatGPT adopted this fix.

And here’s the brutal truth: people don’t want an honest AI — they want a confident one. Even if that confidence is sometimes misplaced.

There’s another hidden issue: computation costs.

Making AI confidence-aware isn’t cheap. To decide if it’s 75% confident, the model has to:

For enterprise use cases like chip design or medical analysis, that’s worth it.

For consumer chatbots used by millions every day?

The economics don’t work.

A confidence-aware ChatGPT would be slower and more expensive to run.

Think about why people love ChatGPT right now:

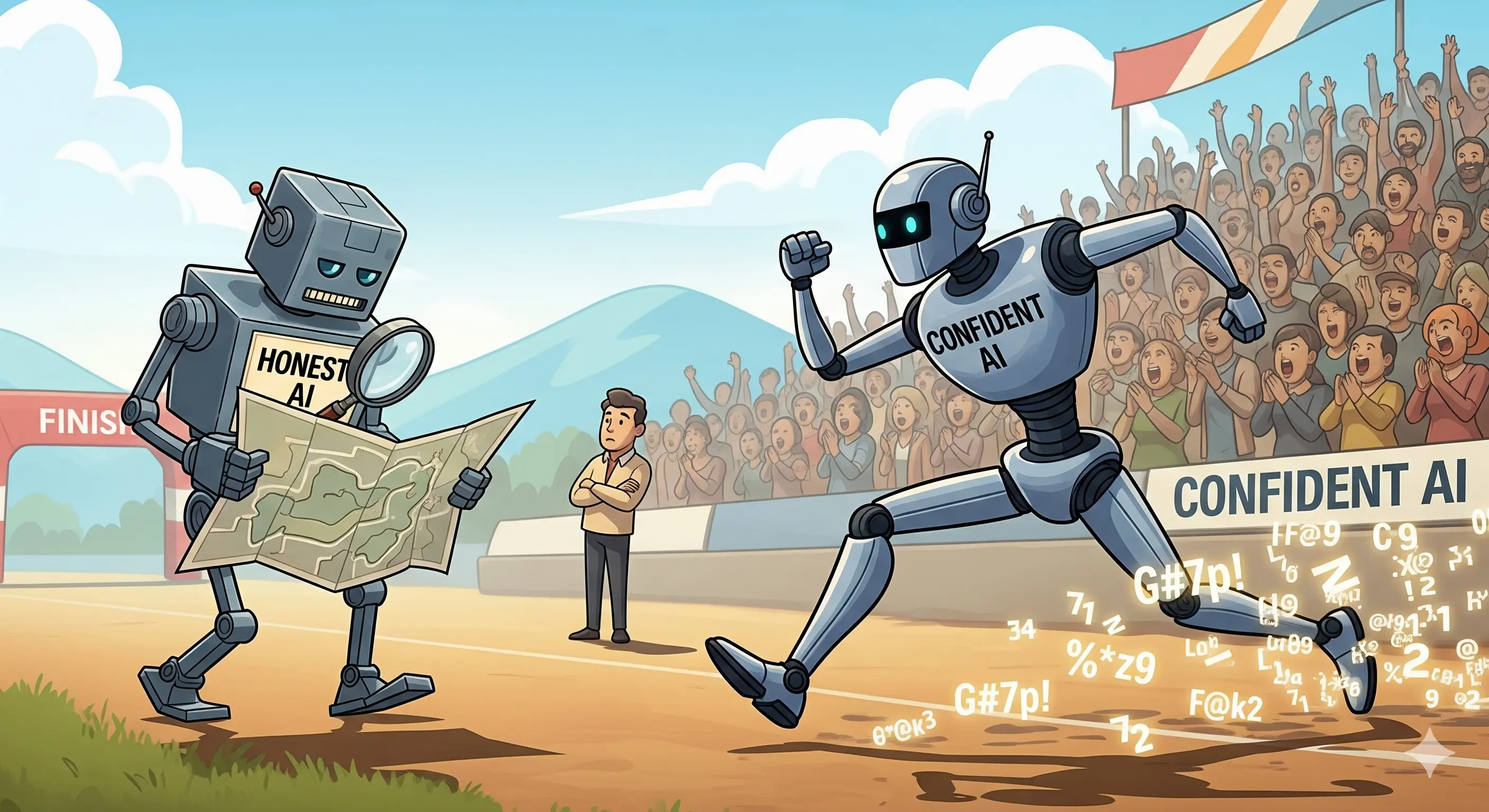

If OpenAI made it cautious, hesitant, and full of “I don’t know”, most casual users would abandon it.

Competitors who kept their models fast and confident — even with some hallucinations — would win the market.

This is the paradox: the fix for hallucinations is also a potential death sentence for ChatGPT as we know it.

Does that mean OpenAI’s fix is useless? Not at all.

For high-stakes enterprise systems, hallucinations are unacceptable. Think:

In these contexts, accuracy is worth the extra computation. Confidence-aware AI is the only viable path.

But for casual consumer apps, the cost and friction outweigh the benefits.

Here’s the core issue nobody wants to admit:

Until these incentives change, hallucinations won’t fully go away in consumer AI. The economics and user psychology both push models to be overconfident.

OpenAI’s research proves hallucinations can be reduced — but at a price.

If ChatGPT started saying “I don’t know” regularly, the very thing people love about it would disappear.

What’s likely to happen is a split:

So, is OpenAI’s plan a breakthrough or a death sentence?

The truth is, it’s both. It’s a technical win, but a user experience nightmare.

If you’re building AI into your business, you need to decide: do you want confidence at scale, or caution at a cost?

And if you want practical ways to work with both, check out my Complete AI Bundle — with 30,000+ prompts and templates designed for reliability in real-world use cases.