What Are Agentic LLMs? A Comprehensive Technical Guide

LLMs used to answer questions. Now they complete tasks.

Agentic LLMs don’t stop at one response — they plan, act, and use tools to reach goals on their own.

They’re changing how we build with AI. And if you’re working on anything serious with language models, you need to know how they work.

ALSO READ: What Is Prompt Scaffolding and Why it Matters

What Exactly Is an Agentic LLM?

An agentic LLM is a language model designed to act like an autonomous agent.

It doesn’t just generate text. It pursues a goal.

Instead of one-and-done outputs, it:

• Plans multi-step tasks

• Makes decisions

• Uses tools like browsers or code interpreters

• Adjusts its strategy based on results

It’s not just answering — it’s acting with intent.

Passive vs Agentic LLMs: The Core Difference

Here’s the key shift:

• Passive LLMs: You prompt, they respond. Done.

• Agentic LLMs: You give a goal — they break it down, take action, and keep going until it’s complete.

Passive is static.

Agentic is dynamic, iterative, and goal-driven.

This change turns LLMs into real problem-solvers — not just content machines.

How Agentic LLMs Work: A High-Level System Overview

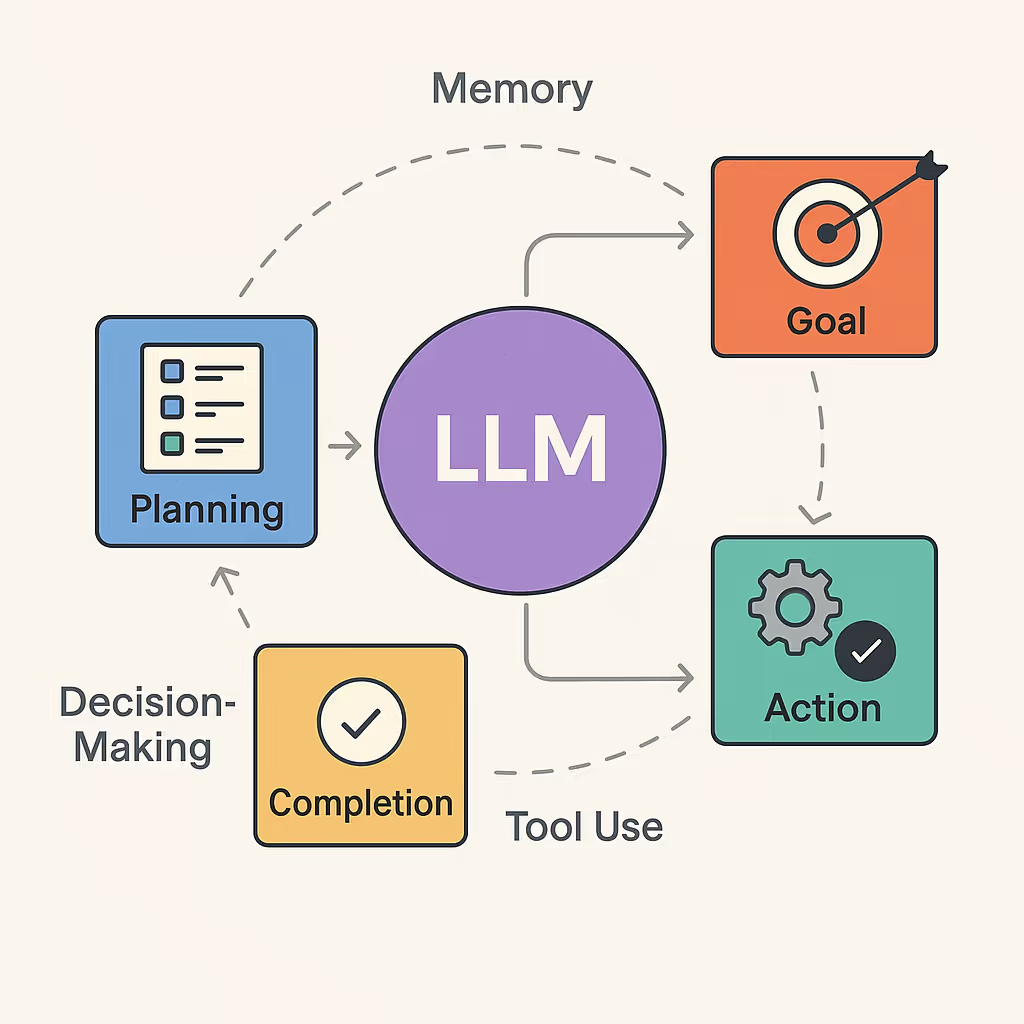

At a high level, an agentic system works like this:

1. Goal: You give the model an objective (e.g. “Research market trends”).

2. Planning: It breaks that down into steps.

3. Action: It takes those steps — using tools, making decisions.

4. Observation: It checks results and adjusts.

5. Completion: It loops until the task is done.

The core LLM stays at the center. Around it is a framework that supports memory, tool use, and decision-making.

Memory in Agentic Systems: The Engine of Context Persistence

Agentic LLMs rely on memory to stay smart across steps.

There are usually three layers:

• Short-term memory: Keeps track of the current conversation or task.

• Working memory: Holds results, decisions, or interim actions during execution.

• Long-term memory: Stores facts, user preferences, or learnings across sessions.

Without memory, agents lose track. With it, they adapt and improve over time.

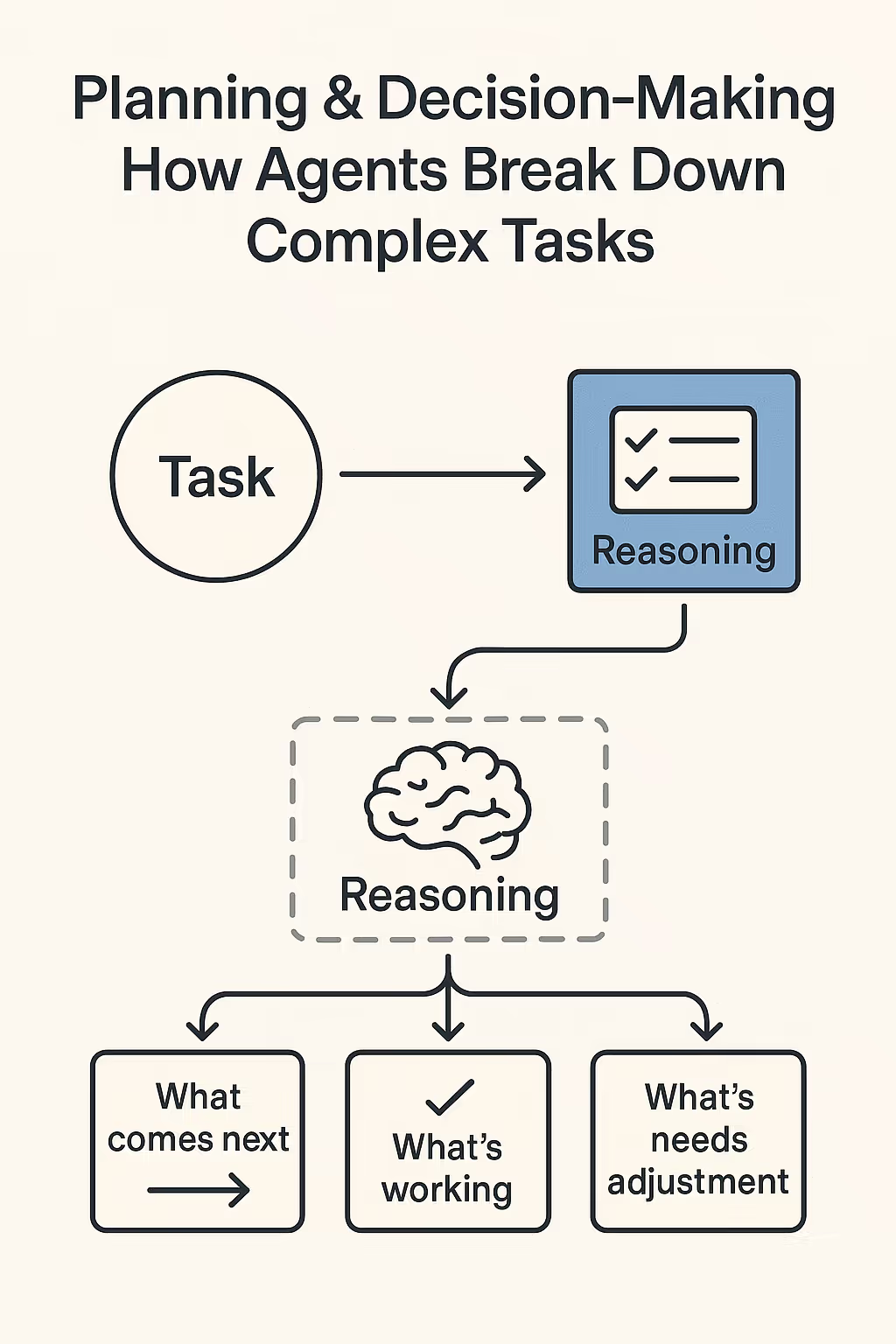

Planning & Decision-Making: How Agents Break Down Complex Tasks

Agentic systems don’t guess what to do next — they reason through it.

There are two styles of planning:

• Implicit planning: The model decides one step at a time.

• Explicit planning: It outlines all steps upfront, then executes them in order.

In both cases, the model uses tools like chain-of-thought reasoning or even scratchpads to figure out:

• What comes next

• What’s working

• What needs adjustment

This is how agents move from “respond” to “solve.”

Tool Use: How Agents Extend Beyond Language

One of the biggest upgrades in agentic LLMs is their ability to use tools.

This means the model can:

• Run code

• Search the web

• Query databases

• Use APIs (like calendars, file systems, or calculators)

Instead of trying to generate everything from training data, the model knows when to pause and call a tool to get real results.

Tool use turns a smart model into a capable assistant.

Without it, the agent is limited to guessing. With it, it can operate in the real world.

Reactive vs Deliberative Agents: Two Modes of Thinking

Agentic LLMs don’t all behave the same. There are two major types:

• Reactive agents:

They respond directly to input without thinking ahead. Simple, fast, but limited.

• Deliberative agents:

They think before acting. They plan, revise, and reason through each step.

Most advanced systems today are deliberative — using chain-of-thought reasoning to handle more complex workflows.

Why it matters:

Deliberative agents are slower, but far more capable. They can handle uncertainty, change direction, and make better decisions over time.

Training Agentic LLMs: The Multi-Stage Process Behind Autonomy

You don’t get an agentic model out of the box. It takes multiple stages of training:

1. Pretraining — Huge datasets teach the model language and reasoning.

2. Instruction tuning — Makes the model follow goals and respond to task-based prompts.

3. Reinforcement learning — The key to agency. The model gets feedback and learns how to improve over time, not just answer better.

This final layer is what teaches the model to act, not just reply.

Fine-Tuning for Agency: Feedback Loops That Make It Smarter

Fine-tuning doesn’t stop at launch. In agentic systems, feedback matters.

• You observe the agent’s behavior

• You label good or bad decisions

• You retrain based on performance

This continuous loop helps the agent:

• Avoid repeated mistakes

• Improve judgment

• Handle more edge cases over time

Without fine-tuning, even the best agent will eventually drift or fail.

Evaluation Metrics: Measuring Performance, Safety, and Reliability

How do you know an agentic LLM is actually working?

You monitor more than just output quality.

Key metrics include:

• Task completion rate – Did it finish what it started?

• Tool success rate – Did tool calls return valid results?

• Latency and cost – Is it efficient or bloated?

• Failure handling – What happens when it gets stuck?

• User trust signals – Are people satisfied, confused, or correcting it often?

The more complex the system, the more important these metrics become.

Real-World Applications: What Agentic LLMs Are Already Doing Today

Agentic LLMs aren’t theory — they’re already being used to handle serious, high-effort tasks.

Here’s where they show up:

• Research agents: Automatically search, analyze, and summarize current web data with citations

• Coding copilots: Plan, write, test, and debug code across files

• Business analysts: Connect to company data, generate reports, and follow up with next steps

• Legal & compliance tools: Read long documents, extract relevant information, and take follow-up actions

The common thread? These aren’t one-off tasks. They’re workflows — and that’s where agents thrive.

Designing Agentic Systems: Key Considerations for Builders

If you’re building with agentic LLMs, you’re not just crafting prompts — you’re designing a system.

Important questions to answer:

• How will the agent access memory?

• What tools will it be allowed to use?

• How will it plan and revise its steps?

• What happens if a tool fails or gives unexpected output?

• How will you evaluate its performance?

This is where design shifts from prompt engineering to agent architecture.

Limitations and Open Challenges in Agentic AI

Agentic LLMs are powerful — but far from perfect. Key challenges include:

• Hallucinations: Even agents with tools can get facts wrong.

• Long-term memory reliability: Not all memories are stable or well-organized yet.

• Error recovery: When something fails, agents don’t always know how to course-correct.

• Tool misuse: Without strong constraints, agents might overuse or misuse APIs.

These problems are why monitoring, feedback, and fail-safes matter more in agentic systems.

The Path Forward: Why Agentic LLMs Will Reshape AI Interfaces

We’re moving from “chat with an AI” to “assign tasks to an AI.”

Agentic LLMs are the foundation of this shift.

They bring structure, memory, and decision-making to AI systems — and open the door to real autonomy.

Whether you’re building internal tools, public-facing agents, or future copilots, understanding how agentic models work is no longer optional.

It’s the next evolution of AI — and it’s already here.