What is Decomposed Prompting and Why it Matters

Most prompts try to do everything in one go — and that’s where they fail.

When tasks get too complex, language models start to guess, fumble, or break down.

The result? Incomplete answers, messy logic, or just plain wrong outputs.

Decomposed prompting fixes that by breaking big problems into smaller steps.

It gives the model clear instructions for each part — and it works better because of it.

In this post, we’ll look at what decomposed prompting is, how it works, and when to use it for more reliable results.

ALSO READ: Google Veo 3 vs Pika Labs: Feature-by-Feature Comparison

What Is Decomposed Prompting? (In Simple Terms)

Decomposed prompting is a modular approach that breaks a big task into smaller, focused steps.

Instead of one long prompt trying to do everything, you split the work:

• Each part handles one job

• The system follows a step-by-step path

• You get more reliable, accurate results

This method turns complex tasks into structured flows — just like breaking down a project into smaller tasks your team can handle better.

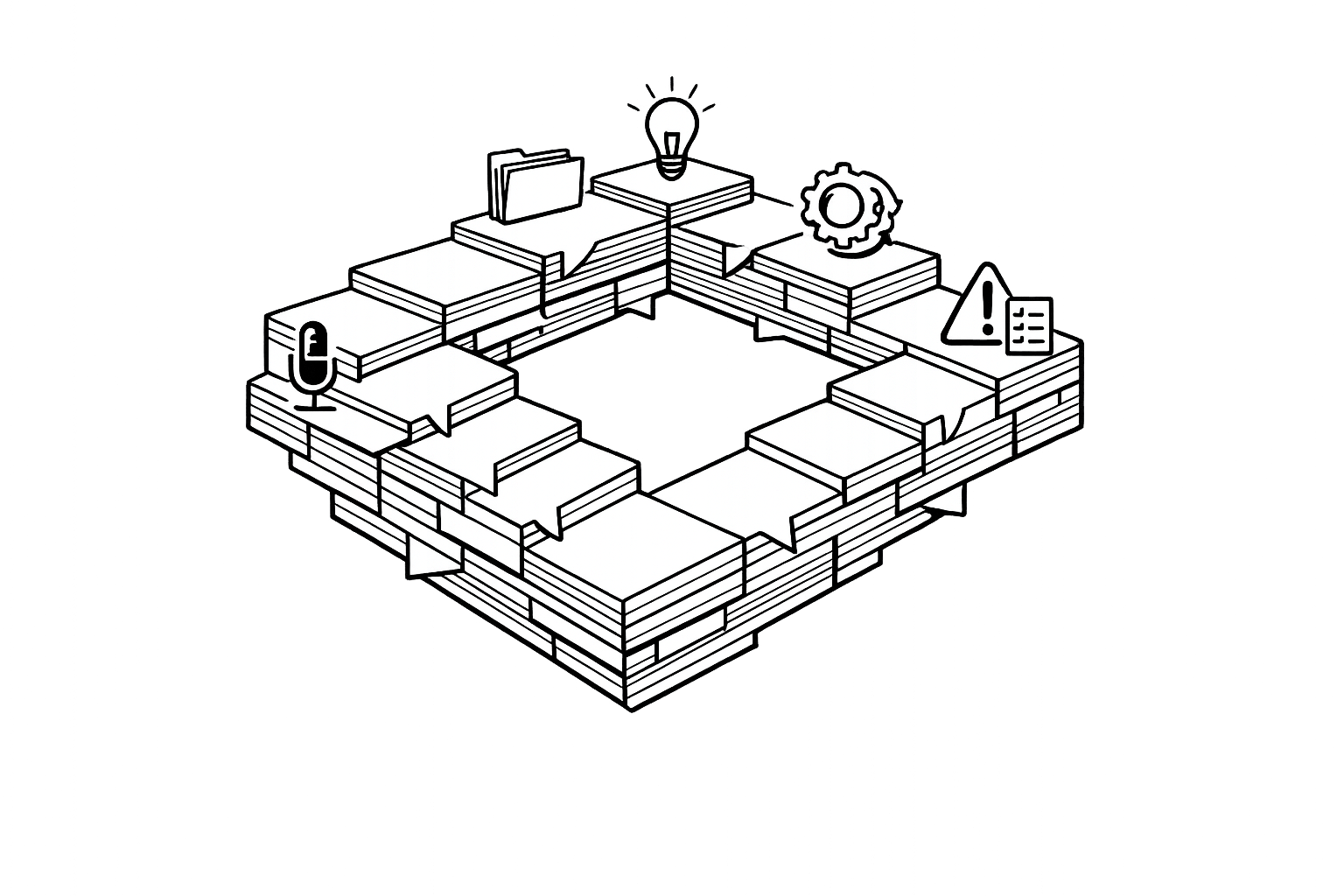

How It Works: Breaking a Task into Sub-Tasks

Here’s what actually happens behind the scenes:

• A decomposer prompt takes the goal and maps out the steps

• Each step is handled by a sub-task handler — a mini prompt trained or written to do one specific thing

• An execution controller moves the data between steps and tracks the full process

The model doesn’t try to guess everything at once. It follows a system. That’s why it works.

Core Components of a Decomposed Prompting System

There are three key components that make decomposed prompting possible:

• Decomposer prompt: Sets the flow, breaks the task down

• Sub-task handlers: Handle each part of the work — like extracting data, calculating values, or formatting text

• Execution controller: Manages the sequence and keeps everything running smoothly

This setup keeps things clean and modular. If one step breaks, you only fix that part — not the whole workflow.

Decomposed Prompting vs Standard Prompting vs Chain-of-Thought

Here’s how they differ:

• Standard prompting gives one prompt, gets one response — it’s fast, but weak on complex tasks

• Chain-of-thought improves reasoning by guiding the model to think in steps — but it still happens in a single prompt

• Decomposed prompting takes full control — splitting logic into smaller steps, reusing parts, and improving accuracy

If you’ve hit a wall with complex prompts, this is how you break through it.

Why It Works: The Case for Task Specialization

Decomposed prompting gives each part of the task its own clear focus.

That means:

• The model doesn’t get overwhelmed

• Each handler is easier to design, test, and reuse

• You can plug in tools, logic, or calculations when needed

• Mistakes are easier to catch and fix in isolation

It’s structured prompting — not guesswork. And it’s exactly what complex AI tasks need.

Examples: What a Decomposed Prompt Actually Looks Like

Here’s how decomposed prompting works in real life — using a structured PM prompt as the format:

<system>

You are a text processing assistant.

Goal: Take a name string and return the first letter of each word, separated by spaces.

</system>

<user>

Input: "Jack Ryan"

Tasks:

1. Split the string into individual words.

2. Extract the first letter from each word.

3. Join the letters with a space between them.

</user>

<assistant>

Step 1: ["Jack", "Ryan"]

Step 2: ["J", "R"]

Step 3: "J R"

</assistant>

Each step is handled by a dedicated sub-task. This makes it easy to debug, reuse, or update just one part without breaking the full workflow.

Use Cases: When You Should Use Decomposed Prompting

Decomposed prompting is ideal for:

• Structured planning tools

• Agents that perform multiple steps (like research → analyze → summarize)

• Code generation with logic layers (e.g., write → test → explain)

• Workflow assistants, especially in ops, product, or content

• Any task that needs tool use or memory between steps

It brings reliability and clarity to tasks that a single prompt can’t handle well.

When to Avoid It: Keep It Simple When You Can

This method isn’t for everything.

Avoid decomposed prompting when:

• The task is straightforward and short

• You only need one action or one output

• You want fast results without setup

• Maintaining full prompt context is essential (since decomposition can split it)

Use it when structure adds value. Skip it when speed matters more than complexity handling.

Modularity = Reusability

Sub-task handlers are like components. Build once, use many times.

For example:

• A text splitter handler can be reused in chat cleanup, data extraction, and pre-processing

• A label ranker handler can be used for sentiment analysis, ticket triage, or roadmap planning

• A merge output handler can be reused in summarization, reporting, or packaging responses

Each handler stays focused — and you stay in control.

Scalability: Why This Method Handles Bigger Workloads Better

As tasks grow — longer inputs, more steps, more tools — standard prompting starts to crack.

Decomposed prompting holds up because:

• It breaks things down

• Each part can run independently or recursively

• It adapts to tools, APIs, or longer sequences

• You can trace, fix, or improve any single point

That’s what makes it a solid choice for complex AI-powered systems and agent workflows.

Tool Integration: Let Sub-Task Handlers Call APIs and Code

Decomposed prompting doesn’t stop at text.

You can route sub-tasks to:

• Code interpreters

• API endpoints

• Database queries

• File retrieval systems

For example, a handler could:

<user>

Task: Extract the top 3 user questions from this support ticket thread.

Then use a Python script to calculate how often each issue appears.

</user>

The model breaks this up:

1. Text extraction

2. Keyword grouping

3. Code execution for frequency count

This makes your LLM setup more like a workflow engine than just a chat model.

Training Decomposed Prompts: Why Specialization Wins

Each sub-task handler can be fine-tuned or adapted on its own.

Instead of re-training a massive prompt, you only train the part that needs help.

You can:

• Add few-shot examples for a single task

• Reinforce structure with consistent formatting

• Update logic in isolation without touching the whole pipeline

This keeps your system lean, flexible, and fast to improve over time.

Prompt Templates: Real-World Modular Prompt in Action

Let’s look at a full decomposed prompt designed for product teams.

<system>

You are a PM assistant.

Goal: Turn raw user feedback into a prioritized feature list using RICE (Reach, Impact, Confidence, Effort).

</system>

<user>

Project: Team Dashboard Redesign

Capacity (story-points/sprint): 45

Feedback:

<feedback>

– "We need a better way to track task updates"

– "It's hard to filter project milestones"

– "The dashboard is too cluttered on mobile"

</feedback>

Tasks:

1. Group feedback into 3–5 themes

2. Score each using RICE

3. Output top 3 themes as a roadmap

</user>

<assistant>

Theme: Mobile Usability | Mentions: 1

RICE: R=8, I=7, C=6, E=4 → Score=84

Theme: Task Tracking | Mentions: 1

RICE: R=9, I=6, C=5, E=3 → Score=90

Theme: Filter Navigation | Mentions: 1

RICE: R=7, I=5, C=6, E=4 → Score=63

Roadmap (Next 6 Months):

• Q3 – Improve mobile dashboard layout – 20% bounce rate drop

• Q3 – Simplify task tracking view – 2x faster updates

• Q4 – Add filter logic to milestones – Increase milestone completion

</assistant>

Each part is clean, isolated, and ready to scale.

Choosing the Right LLM for Decomposed Prompting in 2025

Not every model handles decomposed workflows equally. Here’s how they compare:

• GPT-4o / GPT-4.5: Excellent at precision and logic execution

• Claude 4 Opus: Great for long-form reasoning, task splitting, and massive input context

• LLaMA 4 (Open-source): Fast, flexible, and getting better at structured workflows

• Gemini 1.5: Performs well in hybrid tool + text environments

Your choice depends on the task.

For deep decomposed reasoning, Claude or GPT-4o leads. For flexible local setups, LLaMA 4 Scout is strong.