What is LangChain? Is LangChain worth it?

If you’ve been building with AI tools lately, you’ve probably heard the name LangChain.

Everyone’s throwing it around like it’s the secret sauce behind smart agents, chatbots, and all those AI workflows.

But let’s get real for a second — what is LangChain, really?

And should you even bother using it?

ALSO READ: Google Veo 3 vs Pika Labs: Feature-by-Feature Comparison

What Is LangChain?

LangChain is a developer framework for building apps powered by LLMs (like GPT-4 or Claude).

It gives you tools to:

• Connect AI models to your own data

• Chain multiple steps together (like: search → summarize → respond)

• Add memory so your app can “remember” past chats

• Plug in APIs, tools, and even search engines

Think of it like LEGO for AI workflows.

What Can You Actually Build With It?

Here’s what people are using LangChain for:

• Smart chatbots that talk like humans

• Custom search tools over PDFs or websites

• AI agents that browse the web, answer questions, take action

• Workflow automation with logic and memory

If you’ve seen a bot that sounds like it “gets it” — there’s a good chance LangChain is behind the scenes.

Key Features You Should Know

• Chains: Connect multiple AI calls together like puzzle pieces.

• Memory: Give your chatbot long-term memory (so it’s not forgetful).

• Agents: Let the AI decide what tools to use and when.

• Retrieval (RAG): Pull facts from your own documents or databases.

• Tool Use: Connect to calculators, APIs, or even other AI models.

Why Developers Love LangChain

• It saves time. You don’t have to build everything from scratch.

• It supports many models (OpenAI, Anthropic, Cohere, and more).

• There’s a huge community and tons of examples.

• It works with Python and JavaScript.

It’s kind of like a “batteries-included” toolkit for AI builders.

Where LangChain Struggles

LangChain isn’t perfect. Some real talk:

• Can feel heavy or bloated for small projects

• Sometimes too many layers of abstraction

• Debugging long chains can be tricky

• It still takes dev experience to get the best results

If you just need to ask GPT a question and get an answer — LangChain is probably overkill.

LangChain vs Alternatives (Like LlamaIndex or Haystack)

• LangChain = full-featured, modular, flexible

• LlamaIndex = great for retrieval-based apps, simpler to start

• Haystack = focused on search + RAG, fast API support

LangChain is the most flexible of the three — but also the most complex. Choose based on your use case.

Is LangChain Free?

Yes. The framework is open-source.

But the tools you plug into it (like GPT-4, Claude, or Pinecone) can cost money.

You don’t pay for LangChain itself — just for the services it connects with.

Is LangChain Worth Learning in 2025?

Short answer: Yes — if you’re building AI products.

LangChain is still leading the way in giving devs control over LLMs.

It’s not the easiest tool, but it’s one of the most powerful if you want to build something real.

If you’re serious about custom AI workflows — it’s worth your time.

Who Should Use LangChain (and Who Shouldn’t)?

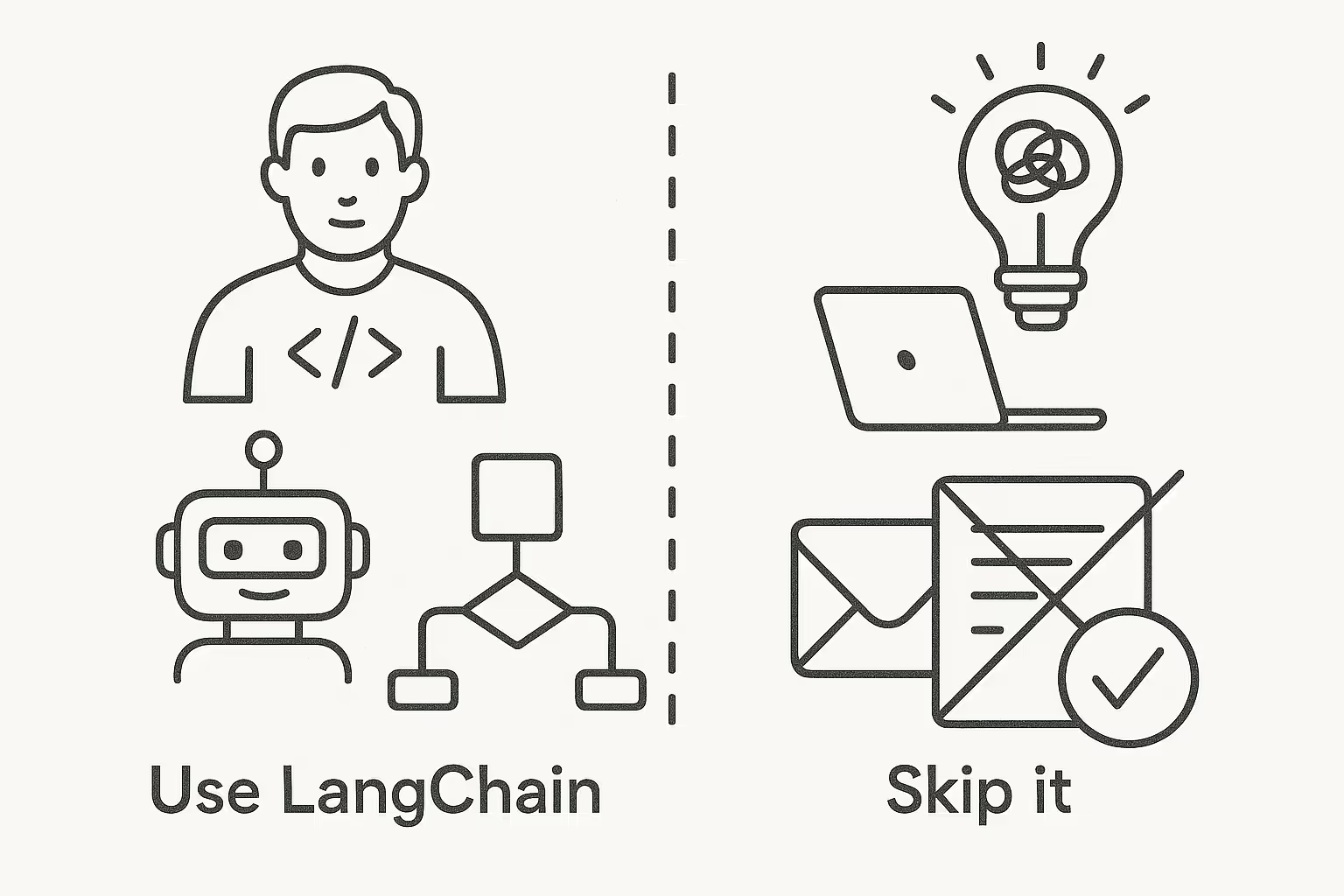

Use it if:

• You’re a developer building LLM apps

• You want control over how your AI behaves

• You’re building complex flows with multiple tools or steps

Skip it if:

• You’re just experimenting with prompts

• You don’t want to code

• You only need a single model response

Final Thoughts: My Take on LangChain

LangChain has its quirks, but it’s the most complete framework for building smart AI workflows today.

It keeps evolving, it’s backed by a big community, and it plays well with most models.

It’s not for everyone. But if you’re building something serious — it’s probably what you’re looking for.