Multimodal AI: Text-to-Everything Tools Explained

Multimodal AI processes multiple types of data - like text, images, audio, and video - simultaneously, enabling human-like communication. Unlike traditional AI, which focuses on a single data type, multimodal AI combines diverse inputs for more nuanced understanding and outputs.

Why It Matters:

- Market Growth: The multimodal AI market was valued at $1.34 billion in 2023 and is projected to reach $10.9 billion by 2030.

- Adoption: Only 1% of companies used it in 2023, but this will grow to 40% by 2027.

Key Benefits:

- Faster decision-making and improved productivity.

- Enhanced customer experiences across industries like finance, retail, and manufacturing.

- Tools like MidJourney, DALL·E, and Runway make content creation (images, videos, audio, and even 3D models) accessible and efficient.

Quick Comparison of Top Tools:

| Tool | Best For | Ease of Use | Pricing | Platforms |

|---|---|---|---|---|

| MidJourney | Artistic images | Requires expertise | $10/month | Discord, Web |

| DALL·E | Photorealistic images | User-friendly | Free/$20 per month | Web, ChatGPT |

| Runway | Text-to-video | Flexible plans | Free to $76/month | Web, API |

How to Choose:

- Define your goals (e.g., content creation, marketing, product development).

- Look for tools with speed, integration capabilities, scalability, and accuracy.

- Test free trials to find the best fit for your workflow.

Multimodal AI is transforming how businesses create and communicate, offering faster, more versatile solutions for content creation and customer engagement.

Multimodal AI: LLMs that can see (and hear)

Text-to-Image Tools: Creating Pictures from Words

Text-to-image AI tools are reshaping how businesses create visual content by turning text prompts into striking imagery. These tools rely on neural networks trained on millions of text-image pairs and use diffusion modeling to transform random noise into visuals that align with the input. This technology is just one example of how multimodal AI is pushing the boundaries of converting text into various media formats.

Between 2022 and 2023, more than 15 billion AI-generated images were created, and 63% of marketing leaders plan to invest in generative AI tools soon. Additionally, 85% of shoppers say product photos are a key factor in their purchasing decisions.

These tools offer a budget-friendly alternative to hiring graphic designers. They help businesses maintain consistent branding, enable visual A/B testing, and support more personalized marketing campaigns.

"These text-to-image tools allow users to edit or produce imagery by using textual prompts; they are easy to use and empower marketers to create rich imagery from scratch and to make complex edits quicker."

– Praveen Krishnamurthy, Product Marketing Manager at Adobe

Let’s take a closer look at two leading tools in this space: MidJourney and DALL·E.

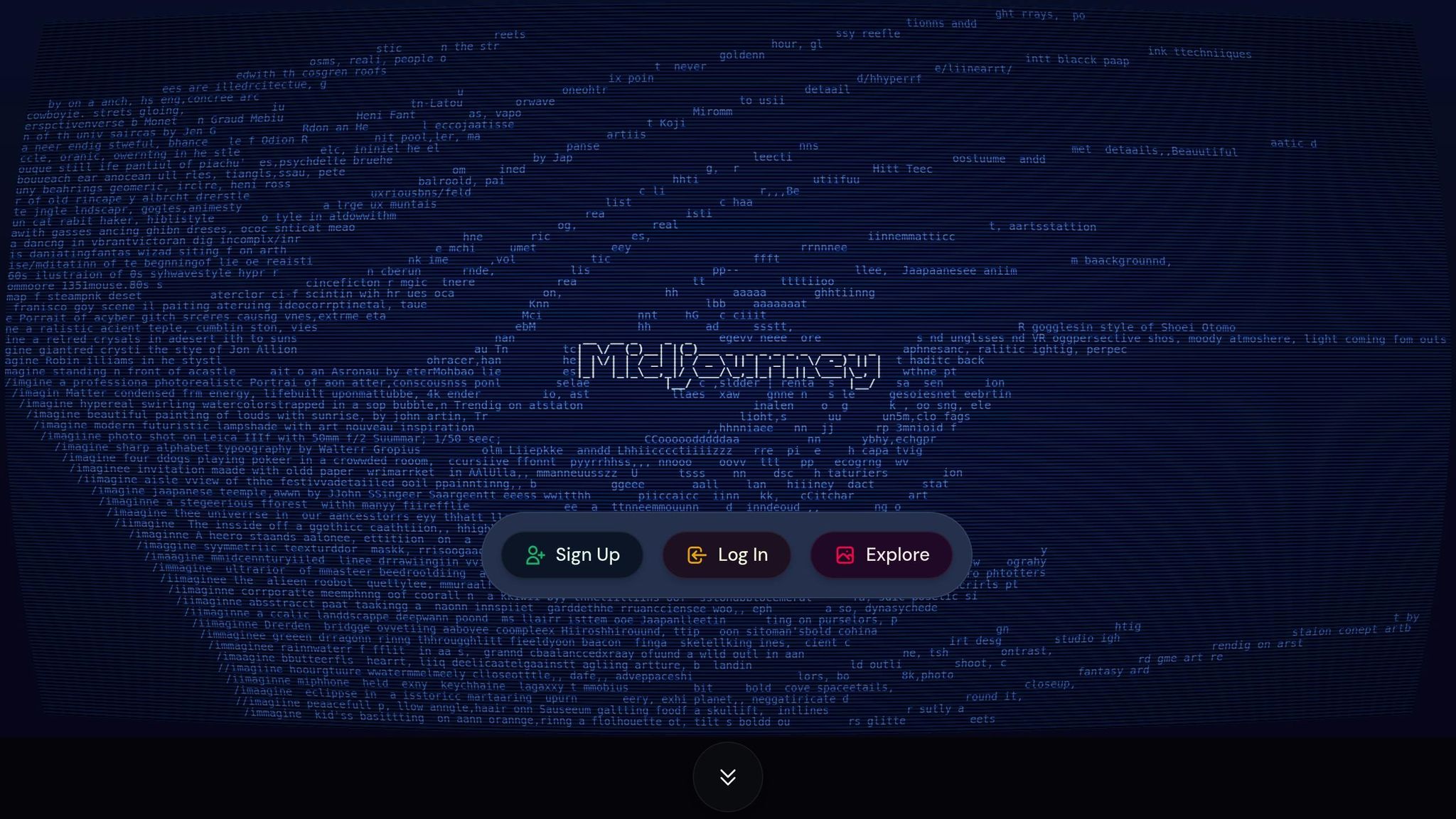

MidJourney: Making Artistic Images

MidJourney stands out for its ability to create high-quality, artistic visuals. Using diffusion technology, it generates stylized artwork that’s perfect for branding and creative marketing. Users can fine-tune results by embedding parameters into prompts, although the tool tends to work best with concise, keyword-focused inputs.

A notable example of its professional use comes from Zaha Hadid Architects, which employs MidJourney to conceptualize design ideas and refine them for 3D modeling.

"For me it's always been very similar to verbal-prompting teams, referencing prior projects and ideas and gesticulating with my hands. That's the way of generating ideas and I can do that now directly with MidJourney or [DALL·E], or the team can do it as well on our behalf, and so I think that's quite potent."

– Patrik Schumacher, Zaha Hadid Architects Principal

MidJourney is available via a subscription starting at $10 per month, accessible through Discord or a web interface. While it excels at speed and creativity, it struggles with accurately rendering text within images.

DALL·E: Generating Realistic Images

DALL·E - named after Salvador Dalí and Pixar’s WALL-E - is renowned for its photorealistic image generation and strong natural language understanding. The latest version, DALL·E 3, integrates seamlessly with ChatGPT, making it especially user-friendly. Users can provide detailed prompts, and the tool responds with visuals that not only match descriptions but also incorporate text seamlessly - ideal for marketing materials.

For instance, Copy.ai uses DALL·E to create visuals for blog posts, social media, and web design, significantly speeding up their creative process.

"We use DALL·E to generate visual content for our blog posts, social media, and website design. DALL·E has significantly impacted our workflow by speeding up the content creation process and allowing us to experiment with different visual styles effortlessly."

– Chris Lu, Co-founder of Copy.ai

Kam Talebi, CEO of Butcher’s Tale, found DALL·E invaluable for restaurant decor, using it to create unique, budget-friendly art pieces for large-format prints.

"We used DALL·E to create a couple of pieces of art and had them printed in a large format to decorate one of our restaurants. We wanted something unique and affordable."

– Kam Talebi, CEO of Butcher's Tale

DALL·E offers a free tier via ChatGPT, with premium plans starting at $20 per month as part of ChatGPT Plus. It also provides indemnification protection for enterprise users. Marketing consultant Frank Strong highlights its appeal:

"I used to use free stock photos, and these images by DALL·E are just 1000% more visually appealing."

– Frank Strong

DALL·E excels at creating realistic, tailored visuals and educational illustrations, making it ideal for businesses that need polished, professional-quality images.

| Feature | MidJourney | DALL·E |

|---|---|---|

| Best For | Artistic, stylized images | Photorealistic, precise images |

| Ease of Use | Requires prompt expertise | User-friendly and conversational |

| Text Integration | May struggle with text | Integrates text seamlessly |

| Pricing | $10/month subscription | Free tier; $20/month premium |

| Platforms | Discord and web interface | Web, mobile, and API (via ChatGPT) |

| Customization | Extensive style options | Interactive editing tools |

Both MidJourney and DALL·E have proven their value in real-world applications. For example, DALL·E played a key role in a Heinz advertising campaign that generated 850 million earned impressions globally. Whether your goal is artistic expression or precise, photorealistic visuals, these tools can elevate your content strategy and streamline creative workflows. Their capabilities also pave the way for advancements in generating audio, video, and 3D models, which we’ll explore next.

Text-to-Audio and Text-to-Video: Adding Sound and Movement

AI isn't just about turning words into pictures anymore - it’s now giving text a voice and even bringing it to life through video. With multimodal AI, text can be transformed into audio and video using neural networks that create sounds, voices, and visuals. For example, text-to-video models use video diffusion technology to generate videos that match natural language inputs .

This shift is redefining how content is made. On average, people spend about 17 hours a week watching videos, pushing businesses to move from slower, traditional production methods to faster, AI-powered solutions. Similarly, creating audio content has become much simpler. These advancements are paving the way for even more exciting uses of multimodal AI.

Text-to-Audio: Turning Words into Sound

Modern text-to-speech systems rely on deep neural networks to process text. They analyze everything from words and punctuation to accents, pitch, tone, and rhythm. Once the text is analyzed, these systems create audio features that a vocoder then converts into lifelike speech.

The uses for text-to-speech technology are vast. It’s a game-changer for podcasting, audiobooks, educational materials, and accessibility tools that help reduce screen time and alleviate visual strain. Platforms like Fliki are leading the charge, serving over 50,000 businesses and helping users achieve up to a fivefold increase in productivity when creating content. These tools even allow for voice personalization, ensuring brand consistency across audio content .

The visual side of this technology is equally impressive, with tools like Runway pushing the boundaries of what’s possible.

Runway: From Text to Video

Runway’s Gen-3 Alpha model allows users to transform text into video quickly, offering high-quality results with plenty of customization options. But it doesn’t stop there. The platform includes tools for editing, removing backgrounds, replacing objects, and even creating 3D captures from just three uploaded videos.

Organizations are using Runway to bring ideas to life, spark creativity, and significantly cut production costs.

"Runway makes the impossible possible when it comes to content creation. It's an invaluable tool." – R/GA

Runway’s pricing is flexible, catering to different needs. Users can choose from a free Basic plan with 125 credits, a Standard plan at $12 per month (billed annually) with 625 credits, a Pro plan at $28 per month (billed annually) with 2,250 credits, or an Unlimited plan at $76 per month (billed annually) that offers unlimited video creation.

For marketers and content creators, Runway is a powerful ally. It’s perfect for creating eye-catching ad campaigns, product demos, and engaging social media content. To maximize impact, keep videos short (under two minutes), use headlines that grab attention, ensure visuals match your brand’s tone, and add captions for sound-off viewing.

As Piyush Rawat notes:

"Text to video is not just a trend - it's a transformational shift in how marketers create and scale content. By lowering the barriers to entry, these tools empower marketers to produce engaging, high-quality videos at speed and scale."

These advancements in text-to-audio and text-to-video are just the beginning. Multimodal AI is evolving rapidly, with the potential to deliver even more complex outputs, including fully realized 3D models.

Text-to-3D Models: Building 3D Content

The world of multimodal AI is taking a bold step forward, moving beyond flat images and videos into the dynamic realm of three-dimensional space. Text-to-3D tools are changing the game, allowing users to turn simple text descriptions into detailed 3D models - no specialized training required.

This technology is advancing at a breakneck pace. HP predicts that AI-driven functional parts will see massive growth within the next two years, signaling a transformation in how industries approach 3D modeling. Tasks that once demanded deep technical expertise can now be handled with straightforward text prompts, opening up 3D modeling to businesses of all sizes. Let’s dive into how these tools work and the impact they’re already making.

How Text-to-3D Tools Work

The process of converting text into 3D models typically unfolds in four steps. First, the system processes and interprets the text, extracting details like shape, size, texture, and style. Next, it generates 2D images from various angles, creating a visual foundation. These images are then transformed into 3D models using advanced spatial reasoning. Finally, the system outputs files that are ready for 3D printing or digital use.

This transformation is powered by two core technologies: Neural Radiance Fields (NeRF) and diffusion models. NeRF excels at creating high-quality 3D models with realistic lighting and textures, while diffusion models refine the details and remove noise for polished results. Most platforms can generate a complete 3D model in just 15 to 25 seconds. What’s more, users can customize these models further by adding additional text prompts, tailoring the output to meet specific needs. This streamlined process is proving to be a game-changer across various industries.

Business Uses for 3D Models

Industries are already embracing the efficiency and creativity of text-to-3D tools. While these tools are best suited for creating individual objects rather than complex scenes or lifelike characters, they’re finding a strong foothold among game developers, architects, and artists.

In gaming and entertainment, developers are using text-to-3D tools to quickly generate assets like props, weapons, and environmental elements for video games and metaverse experiences. What used to take weeks can now be done in minutes, freeing up time to focus on gameplay and user experience.

Product development teams are also reaping the benefits. HP’s AI Text to 3D solution showcases the versatility of this technology. Teams can design everything from custom keycaps featuring unique designs like dragons or pets to intricate jewelry, personalized eyewear, and home decor items like vases or lamps. They can even create collectibles such as action figures and model kits - all tailored to specific preferences.

"HP AI Text to 3D transforms your ideas into reality." - HP

In architecture and construction, these tools speed up prototyping by turning sketches or written descriptions into 3D models. This allows architects to visualize ideas quickly and share concepts with clients before diving into detailed technical plans.

E-commerce and digital marketing teams are also finding text-to-3D invaluable. Instead of relying on costly product photography, retailers can generate 3D models that customers can examine from every angle, enhancing the online shopping experience.

Beyond practical applications, text-to-3D tools are sparking creativity in product design, helping teams imagine new structures and explore unconventional ideas.

Real-world examples highlight the power of this technology. Alpha3D has helped Viva Technology create 3D models effortlessly, even without traditional 3D modeling skills. The company has also partnered with Threedium to rapidly produce 3D assets for enterprise clients, and it supports WANNA in generating high-quality models quickly with a user-friendly interface. These success stories show that text-to-3D tools are not just a novelty - they’re becoming essential for businesses looking to innovate and cut production costs while maintaining top-tier quality.

sbb-itb-58f115e

How to Choose the Right Multimodal AI Tool

With the multimodal AI market expected to hit $4.5 billion by 2028 and grow at an annual rate of 35%, selecting the right tool is more important than ever. The stakes are high - 53% of companies report major revenue losses due to faulty AI outputs, and systems left unmonitored for six months see a 35% increase in errors. So, how do you make the right choice?

Start by identifying your goals and challenges. Whether you want to speed up content creation, improve marketing materials, or streamline product development, having clear, measurable objectives will guide your decision and help you avoid costly missteps. Below, we’ll break down the key features to look for and compare some of the top tools to simplify your decision-making process.

Key Features to Consider

When evaluating multimodal AI tools, keep these features in mind:

- Processing Speed and Performance: Check the platform’s benchmarks for speed and responsiveness.

- Integration Capabilities: Opt for tools with robust APIs and seamless compatibility with your existing software. Avoid those requiring manual data transfers.

- Accuracy Across Modalities: Ensure the tool can handle different input types - text, images, video - while maintaining low error rates, even with complex data.

- Scalability: Choose a platform that can grow with your business and handle increased usage as your needs expand.

- Data Handling and Governance: Look for strong data governance features that give you control over how your data is stored and used.

- Computational Requirements: Understand the hardware and processing resources the tool demands to ensure it fits your budget and infrastructure.

Comparing Popular Tools

Different tools excel in different areas, so matching your needs to the right platform is crucial. Here’s a snapshot of some leading options:

- Google Gemini: Known for its extensive training dataset and ability to handle a wide range of tasks. However, it may be less effective in open-ended conversations and can occasionally produce errors in image generation.

- ChatGPT (GPT-4V): Offers excellent public availability and excels in open-ended conversations and text generation. Its smaller training dataset and focus on text may limit its versatility.

- Meta ImageBind: A flexible tool ideal for multimodal content searches and diverse tasks, but its steep learning curve and high computing requirements can be challenging for smaller teams.

- Runway Gen-2: Excels at video rendering with fast turnaround times, making it perfect for video-heavy projects. However, experimental videos may need further refinement.

- LangChain and Microsoft AutoGen: Best for teams with advanced technical expertise.

- Bizway: Designed for non-technical users, offering ease of use.

- Phidata and LangGraph: Particularly strong at managing complex multimodal datasets.

Cost structures vary widely across these platforms. Some use subscription models, others charge one-time fees, and some operate on usage-based pricing. Be sure to consider both upfront costs and ongoing expenses like training, maintenance, and infrastructure upgrades.

"We're trying to epitomize all that we are familiar [with]: the client, the client's needs, our answers, and the opposition, and then present to the client what they need when they need it... On the off chance that we had a sales rep who could do that for everybody, that would be perfect, yet we don't." - Seth Earley, Author of The AI-Powered Enterprise and CEO of Earley Information Science

Multimodal AI investments deliver an average ROI of 3.5×, improving operational efficiency, employee productivity, and customer satisfaction. When evaluating tools, think beyond the initial price tag. Consider the long-term value, including cost savings, revenue growth, better customer experiences, and enhanced team productivity.

Before committing to a platform, always start with a free trial or a limited version. Testing how the tool fits into your workflow will help you spot potential compatibility or performance issues early. The right multimodal AI tool should simplify and enhance your processes - not make them more complicated.

Conclusion: Using Multimodal AI for Better Content

Multimodal AI is reshaping how businesses approach content creation by seamlessly integrating text, images, audio, and video into unified workflows. What once seemed experimental is quickly becoming a cornerstone of modern strategies.

Industry data highlights this shift. Gartner predicts that by 2027, 40% of companies will adopt multimodal AI, a dramatic rise from just 1% in 2023. Similarly, 30% of outbound marketing messages from large organizations are expected to be AI-generated by 2025, compared to less than 2% in 2022. This evolution isn't just about saving time - it’s about staying ahead in a competitive, fast-changing environment.

Businesses are already seeing measurable results. Multimodal AI tools are being used to automate content creation across formats, from writing product descriptions to crafting social media captions. These tools also produce visually engaging graphics and videos, raising the overall quality of content. For instance, one in three businesses plans to use AI for website content creation, while 44% are focusing on generating multilingual content.

One key strength of multimodal AI is its ability to bridge the gap between different types of content. By using text prompts to refine and adjust outputs, creators can experiment with their ideas without starting over. This capability makes professional-quality content creation accessible to anyone, even those without technical expertise, simply by describing their vision in plain language.

Tools like MidJourney, DALL·E, and Runway are already showcasing what’s possible. These platforms demonstrate how businesses can align AI capabilities with their goals, build internal expertise, and implement governance for responsible use. Whether it’s MidJourney’s artistic designs or Runway’s video generation, these tools show how text can become a universal interface for creativity.

The era of multimodal AI in content creation isn’t a distant future - it’s already here, ready for businesses to embrace today.

FAQs

How can businesses choose the right multimodal AI tool for their needs?

To choose the right multimodal AI tool, start by clearly outlining your business objectives and pinpointing the challenges you aim to solve. Think about the specific types of outputs you’ll need - whether it’s images, audio, video, or even 3D models - and consider how the tool will fit into your current workflows.

When evaluating tools, focus on a few key areas: performance, cost, user-friendliness, and how well the tool aligns with the needs of your industry. Don’t forget to examine deployment options, such as whether the tool operates in the cloud or on-premise, and make sure it adheres to your security and data privacy standards. By matching the tool’s capabilities with your business goals, you’ll set the stage for meaningful results.

What challenges might arise when using text-to-everything AI tools for content creation?

Challenges of Text-to-Everything AI Tools

Text-to-everything AI tools are undeniably powerful, but they’re not without their hurdles. A common pitfall is that AI-generated content can sometimes feel flat, lacking the spark of originality or emotional resonance that connects with people. This often happens because these tools rely heavily on existing data, which can make it tough for them to generate fresh ideas or pick up on subtle nuances like humor or cultural references.

Another big challenge is accuracy. AI systems can inadvertently carry over mistakes or biases from their training data, which can compromise the reliability of the content they produce. On top of that, when these tools are used to create different formats - like images, audio, or video - technical issues can crop up. Ensuring everything works smoothly across multiple mediums can be tricky and time-consuming.

Understanding these challenges is key to making the most of these tools, whether you’re using them for creative projects or professional tasks. By being aware of their limitations, you can better manage expectations and tailor their use to fit your needs.

How are text-to-3D model tools transforming industries like gaming and architecture?

Text-to-3D model tools are shaking up industries like gaming and architecture by making workflows smoother and sparking new levels of creativity. In gaming, developers can now create detailed, game-ready assets using just a simple text prompt. This not only speeds up production but also trims costs. The result? Faster iterations, richer environments, and the freedom to incorporate intricate elements without the need for time-consuming manual modeling.

For architects, these tools are a game-changer when it comes to transforming ideas into reality. By converting written descriptions into detailed 3D models, architects can communicate their visions more effectively with clients, experiment with rapid prototypes, and explore bold design ideas. This technology is redefining how professionals in these fields tackle challenges and push creative boundaries.