Top Advanced Prompt Resources for Technical Users

Prompt engineering is reshaping how technical professionals interact with AI models. It’s no longer about simple instructions; it’s about creating precise, structured prompts to improve workflows like coding, data analysis, and debugging. Advanced techniques such as Chain-of-Thought reasoning, Retrieval-Augmented Generation (RAG), and multimodal prompting are transforming the field.

Key highlights from the article:

- Prompt Libraries: Prebuilt templates for tasks like AutoML, regex generation, and debugging. Enterprise frameworks offer more structure and consistency.

- Prompt Tools: Platforms like LangChain and PromptLayer help with testing, orchestration, and version control.

- Integration Frameworks: Systems like RAG link AI models with external data for better context and outputs.

- Evaluation Platforms: Tools like OpenAI Evals ensure prompts meet performance benchmarks.

For professionals, adopting these resources can optimize productivity while reducing costs. By leveraging prompt libraries, tools, and frameworks, you can enhance precision and streamline integration into production systems.

Advanced Prompt Engineering Resources Framework for Technical Users

1. Prompt Libraries

Curated Technical Prompt Collections

Specialized prompt libraries offer ready-to-use templates that simplify complex workflows. For example, the "ChatGPT Data Science Prompts" repository includes 60 tailored prompts designed for tasks like AutoML, hyperparameter tuning, and regex generation. Another notable resource is the Travis Tang data science prompt repository, which has gained significant traction on GitHub, with over 1,600 stars and 275 forks. This popularity highlights its usefulness among technical experts.

When exploring these libraries, look for features such as persona-based prompts (e.g., "act as a senior data scientist") and placeholders like {{variable_name}} for dynamic variable injection. These features allow you to customize prompts for different datasets and scenarios.

Additionally, enterprise-focused frameworks expand on these collections by introducing structure and consistency, which are crucial for integrating prompts into production systems.

Enterprise-Grade Prompt Frameworks

Enterprise-level libraries bring a higher degree of organization and precision to prompt engineering. They ensure outputs follow specific formats - whether it's Python code, SQL queries, or structured JSON - making it easier to integrate them into production workflows. Many of these libraries use Markdown headers or XML tags to clearly separate instructions, examples, and contextual data, helping models better interpret the structure.

It's often more effective to use libraries that include few-shot learning examples (input-output pairs) rather than relying entirely on zero-shot instructions. Few-shot examples can significantly improve a model's ability to handle complex tasks. For processes requiring detailed reasoning, such as debugging or system design, Chain-of-Thought prompts are especially useful. These prompts guide the model through step-by-step logic, and many enterprise libraries incorporate this approach for better problem-solving.

Advanced LLMs like GPT-4.1 now support extensive context windows - ranging from 100,000 to 1 million tokens - allowing you to embed comprehensive reference material directly into a single prompt.

When deploying these libraries in professional environments, it’s wise to lock applications to specific prompt versions or model snapshots to maintain consistent performance as models evolve. Placing static content, like library instructions, at the start of prompts can also reduce costs by taking advantage of API-level caching. These strategies ensure seamless integration into production pipelines, aligning with best practices for prompt engineering.

sbb-itb-58f115e

Prompt Engineering Techniques Explained: A Practical Guide

2. Prompt Engineering Tools

When working with prompt libraries, having the right tools for prompt maintenance and testing can make a huge difference. These tools not only improve efficiency but also ensure your prompts perform reliably.

Dashboard-Based Prompt Management Systems

Modern platforms have made it easier to manage prompts by separating them from the actual code. Instead of embedding prompts directly into your application, you can create reusable templates using variables like {{customer_name}} or {{dataset_path}}. These templates are managed through intuitive web dashboards, where updates can be made quickly and safely using unique IDs - no need to touch the code. This approach simplifies updates and ensures consistency across your system.

Another helpful feature is prompt caching. By placing static instructions at the start of API requests, you can cut down on response times and token usage, making your system more efficient.

"Prompt engineering is the new coding. In a world increasingly driven by machine learning, the ability to communicate with AI-generated systems by using natural language is essential." - Vrunda Gadesha, AI Advocate, IBM

While managing prompts effectively is crucial, testing them systematically is just as important to maintain reliability.

Orchestration and Testing Frameworks

To take prompt reliability a step further, orchestration frameworks like LangChain, Mirascope, and PromptLayer come into play. These tools bring software engineering principles into prompt design, allowing you to create modular and testable prompt chains. These chains can be integrated into MLOps pipelines for tasks like version control, A/B testing, and thorough evaluations.

For added stability in production environments, it’s a good idea to pin your applications to specific model snapshots (e.g., gpt-4.1-2025-04-14). This prevents disruptions caused by updates to the underlying models. Additionally, using XML tags such as <user_query> or Markdown headers can help separate instructions from context, making it easier for models to interpret your prompts accurately. Breaking workflows into smaller, manageable steps - like data cleaning, feature engineering, and reporting - ensures each component is testable and can be reused in other projects.

3. Integration Frameworks

Once testing is complete, the next step is to connect your prompts with your existing systems. Integration frameworks act as a bridge, linking prompt engineering to practical applications. This makes it possible to deploy AI capabilities smoothly across your technology stack.

API-Based Prompt Management

OpenAI Reusable Prompts offers an efficient way to separate prompt logic from your application code. Instead of hardcoding instructions, you can manage prompts through a centralized dashboard using unique IDs. This setup allows you to use dynamic variables to adjust prompts without altering the code. Need to refine instructions, resolve issues, or tweak outputs? You can do all of that instantly without redeploying your application. For tech teams handling multiple applications, this approach saves time and minimizes the risk of introducing errors.

From here, think about how integrating external data can further enhance what your prompts can do.

Data-Augmented Prompt Architectures

Retrieval-Augmented Generation (RAG) frameworks take prompts to the next level by incorporating external or proprietary data during runtime. By linking prompts to tools like vector databases or file search systems, AI models can access up-to-date information that goes beyond their training data. This allows you to inject relevant context into prompts, enabling your applications to provide precise and reliable responses without having to retrain the models. Additionally, newer models now support context windows of up to one million tokens, giving you the flexibility to include extensive amounts of data.

4. Evaluation and Testing Platforms

After integrating prompts into your workflow, the next step is to verify their performance. Systematic evaluation plays a key role in ensuring reliability, especially in advanced prompt engineering. It's essential to confirm that the integrated prompts meet the performance benchmarks required for technical tasks.

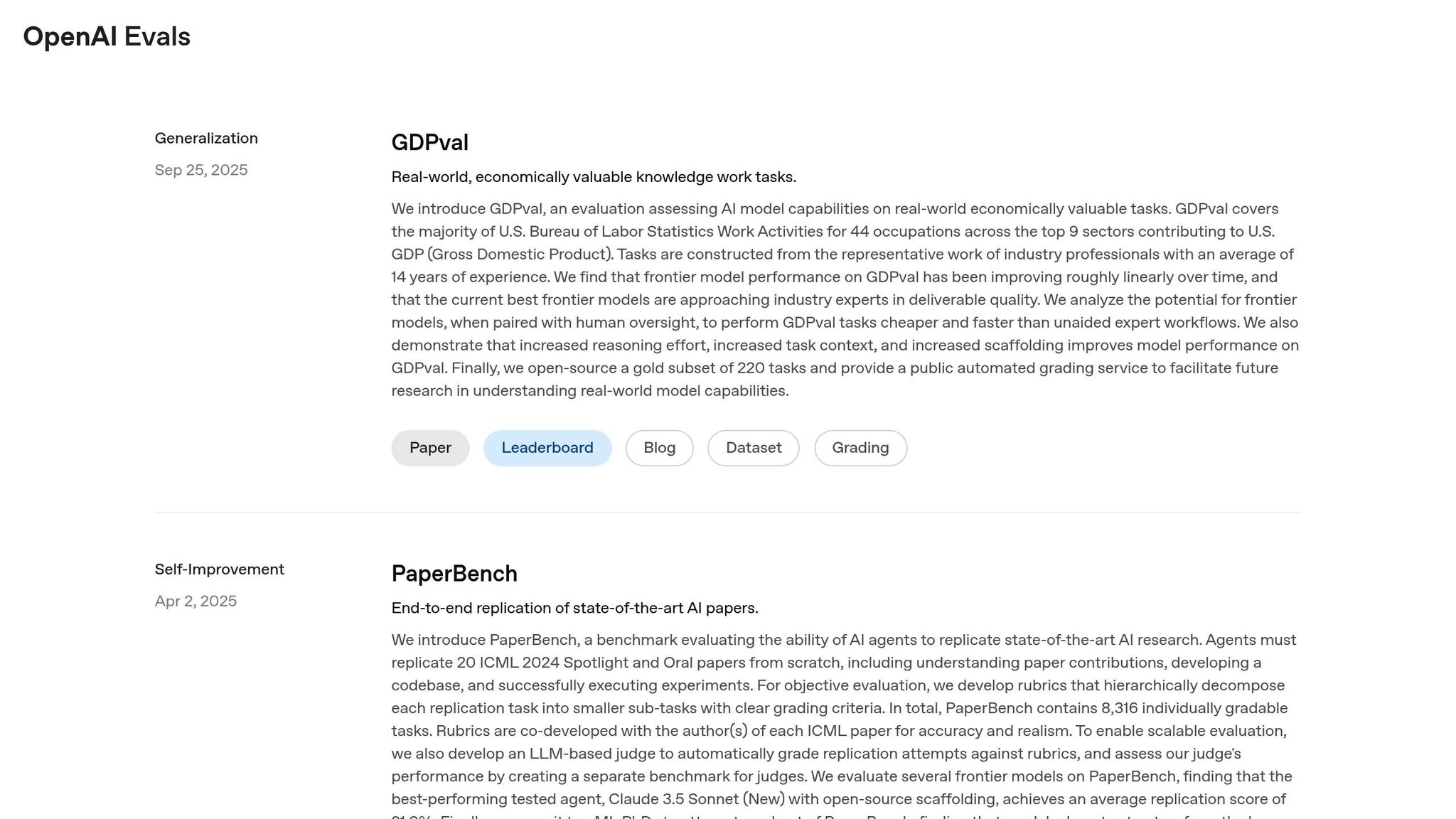

OpenAI Evals: A Framework for Benchmark Testing

OpenAI Evals provides an open-source framework and benchmark registry designed to test how well models perform in areas like reasoning and coding accuracy. It also allows users to build custom evaluation sets securely. One standout feature is its "model-graded" evaluations, where one large language model (LLM) assesses another's outputs based on predefined criteria written in YAML files. This approach removes the need for intricate coding when evaluating qualitative aspects of a model's performance.

"If you are building with LLMs, creating high quality evals is one of the most impactful things you can do." - OpenAI

Recent developments have further enhanced evaluation techniques. For instance, in November 2023, Microsoft researchers introduced "Medprompt", a method to optimize GPT-4 for specialized domains. By using strategies like dynamic few-shot selection, self-generated chain-of-thought reasoning, and majority vote ensembling, they achieved a 90.10% score on the MMLU benchmark. This breakthrough enabled GPT-4, a general-purpose model, to outperform domain-specific models fine-tuned for medical knowledge. It highlights how advanced prompting and evaluation strategies can narrow the gap between generalist and specialist AI systems.

The platform also supports advanced architectures, such as prompt chains and tool-using agents, while offering features like direct logging of evaluation results to external databases. This functionality makes it easier to track and compare model versions and prompt iterations over time. By seamlessly integrating evaluation with prompt management, OpenAI Evals emphasizes the ongoing refinement process required in technical workflows.

Conclusion

In the world of AI systems, advanced prompt resources have become a must-have for technical professionals. The gap between basic prompting and well-crafted prompt engineering can mean the difference between a generalist model delivering average results or performing like a specialist by using ChatGPT to its full potential. For instance, Microsoft’s Medprompt achieved an impressive 90.10% score on the MMLU benchmark.

The current technical landscape emphasizes the need for reproducibility and scalability. Platforms now provide access to over 30,000 structured prompts with lifetime updates, along with tools for independent prompt versioning. This allows teams to tweak prompt logic without the hassle of redeploying entire systems. According to industry reports, professional prompt tools can slash content creation times by up to 80%.

As AI technologies grow more sophisticated, refining prompt engineering strategies is critical. Using prompt libraries, specialized tools, and integration frameworks ensures that technical teams stay ahead. Different AI models require tailored approaches: reasoning models thrive with high-level goals, while GPT models benefit from clear, step-by-step instructions. Professionals must also pin specific model snapshots and develop evaluation frameworks to track performance over time. Techniques like prompt caching, structured outputs with XML tags, and dynamic few-shot selection are among the best practices for cutting costs while boosting accuracy.

Mastering prompt engineering is an ongoing process. It’s often described as a "mix of art and science", where even minor adjustments in phrasing or structure can lead to significant improvements. As the field advances rapidly, systematic experimentation and refinement are key. Those who dedicate time to exploring prompt libraries, testing evaluation methods, and honing their strategies will remain at the forefront as AI capabilities continue to expand.

FAQs

How can prompt libraries improve technical workflows?

Prompt libraries are a game-changer for technical users, offering ready-made, reusable prompts that cut down the time and hassle of creating and troubleshooting prompts from scratch. For developers and data scientists, these libraries make tasks like data cleaning, exploratory analysis, and feature engineering more efficient, freeing up time to tackle more complex challenges.

With their structured and consistent prompts, these libraries help ensure reliable results while minimizing errors, such as irrelevant or incorrect outputs. They also make it easier to switch between AI models like GPT-4 and Claude without needing to rewrite existing prompts, simplifying the integration process.

In short, prompt libraries boost productivity, encourage effective prompt engineering practices, and enable technical teams to focus their energy on solving tough problems more effectively.

How do integration frameworks improve the performance of AI models?

Integration frameworks take large language models (LLMs) to the next level by connecting them to external tools, data sources, and systems. This transforms LLMs from simple text generators into versatile tools capable of solving complex problems. With features like real-time data access, function execution, and automated workflows, these frameworks help minimize errors, boost relevance, and improve the accuracy of results for tasks such as data analysis or code generation.

The integration framework from God of Prompt streamlines this process with reusable modules that handle essential tasks like authentication, rate-limiting, and response formatting. This setup allows developers to focus on creating solutions - like AI-driven assistants or automated workflows - while the framework ensures access to current data and consistent performance. By automating repetitive tasks and offering a dependable structure, these frameworks enable technical users to deliver faster, scalable, and production-ready solutions.

What is Retrieval-Augmented Generation (RAG) and how does it enhance AI responses?

Retrieval-Augmented Generation (RAG) is a cutting-edge AI technique that pairs a large language model (LLM) with a retrieval system. This setup allows the AI to pull in external knowledge - like documents, code snippets, or the latest data - before crafting a response. By tapping into this external information, RAG helps the AI deliver more precise and contextually relevant answers without requiring the model itself to be retrained.

Here’s the basic idea: A retrieval system first scours a curated database to find the most relevant information based on the user’s query. This data is then combined with the original prompt and fed into the LLM. The model uses this enriched input to generate its response. What’s great about this approach is that it not only boosts accuracy but also enables the AI to cite its sources. This transparency makes it easier for users to double-check the information. RAG is especially useful for tackling complex technical issues and ensuring responses remain up-to-date and dependable.