AI Speech Translation Tools for Businesses

AI speech translation tools are transforming how businesses communicate across languages. These tools transcribe, translate, and deliver speech in near real-time, enabling multilingual conversations during video calls, customer support, and meetings. By reducing reliance on interpreters, businesses save costs - up to $172 per language per meeting - and expand access to global talent and markets. Modern systems operate with minimal delays (200–300ms), making conversations feel natural and uninterrupted.

Key takeaways:

- Real-time translation: Supports over 120 languages with 1–2 second delays.

- Cost savings: Eliminates recurring interpreter fees.

- Global reach: Simplifies hiring and customer service across borders.

- Technology options: Choose between cascaded (ASR-NMT-TTS) and end-to-end models for speed and accuracy.

For businesses, selecting the right tool depends on factors like latency, language support, and integration capabilities. Tools like Microsoft Azure Speech, Google Cloud Translation, and DeepL cater to various needs, from live meetings to marketing localization. Advanced customization options, including glossaries and domain-specific tuning, ensure consistent results across industries.

Use Google Meet speech translation to connect in near real-time across languages

sbb-itb-58f115e

How AI Speech Translation Works

AI speech translation relies on three key technical components that work together to transform spoken words from one language into another. These systems can operate as separate modules in a cascaded pipeline or as a unified end-to-end model. The choice between these architectures impacts speed, accuracy, and cost, making it a crucial consideration for businesses.

The backbone of AI speech translation includes Automatic Speech Recognition (ASR), Neural Machine Translation (NMT), and Text-to-Speech (TTS). These components are interconnected, and their performance directly affects the system's overall output.

Cascaded vs. End-to-End Models

The traditional cascaded approach links ASR, NMT, and TTS in sequence. ASR converts speech into text by recognizing phonemes and predicting word sequences. NMT then translates the text into the desired language, and TTS transforms the translated text back into audio. While this method is straightforward and allows flexibility in swapping components, it often suffers from error accumulation at each stage and has a typical delay of 4–5 seconds.

Modern end-to-end systems, like Meta's SeamlessM4T, bypass intermediate text steps entirely. These systems use a single encoder-decoder architecture to translate speech directly into speech. This reduces latency to about 2 seconds, minimizes errors, and performs better in noisy environments or with varying speaker accents.

Core Components of AI Speech Translation

ASR Technology: ASR has advanced to end-to-end neural networks that map audio waveforms directly to text. These systems now achieve Word Error Rates under 5%, nearing human-level accuracy. For instance, Deepgram's Nova-3 model reduced word error rates for streaming audio by 54.2% compared to older systems.

The ASR process involves four steps:

- Audio preprocessing: Cleans and normalizes the input.

- Neural network processing: Analyzes audio patterns.

- Language modeling: Ensures grammatical and linguistic coherence.

- Post-processing: Adds punctuation and formats the text.

Handling challenges like poor microphone quality, overlapping conversations, and dialects is critical for business settings.

"ASR converts spoken audio into written text, while NLP analyzes that text to understand meaning, intent, and sentiment. Think of ASR as the ears and NLP as the brain." - Kelsey Foster, Growth, AssemblyAI

NMT Systems: Neural Machine Translation works by using language-agnostic encoders like SONAR to create a universal mathematical representation of meaning. This enables the system to handle multiple languages effectively. To meet real-time demands, modern NMT employs "prefix-to-prefix" translation, starting the translation process before the speaker finishes their sentence. Unified speech-to-speech systems outperform traditional cascaded setups, achieving up to 23% higher BLEU scores.

TTS Components: Text-to-Speech systems have evolved to produce natural-sounding voices that retain the speaker's tone, pitch, and emotion. These systems use RVQ audio tokens to prioritize frequencies that humans perceive most clearly. For example, Deepgram's Aura-2 achieves a latency of under 200 milliseconds for its initial response.

In 2025, Google introduced an end-to-end speech-to-speech model for Google Meet. This system uses a streaming architecture and the SpectroStream codec, offering real-time translation with a 2-second delay for five Latin-based language pairs while maintaining the original speaker's voice.

| Component | Technical Function | Key Technologies Used |

|---|---|---|

| ASR | Converts audio waveforms to text | Conformer-Transducer, CTC Alignment, Whisper |

| NMT | Translates source text to target text | Transformers, NLLB, M2M, LLMs |

| TTS | Synthesizes text into spoken audio | Azure Neural TTS, ElevenLabs, SpectroStream |

| Quality Layer | Ensures domain-specific accuracy | RAG, LoRA Adapters, Constrained Decoding |

Customizing AI Models for Business Applications

Generic AI models often struggle with specialized terminology in fields like law, medicine, or technology. Businesses can improve translation accuracy using three main techniques: Retrieval-Augmented Generation (RAG) lexicons, Low-Rank Adaptation (LoRA), and Mixture of Experts (MoE).

- RAG glossaries: Maintain a database of industry-specific terms, ensuring accurate translations for rare or technical words.

- LoRA adapters: Fine-tune models for regional dialects or accents without requiring a full retraining.

- MoE architectures: Activate specialized sub-models for specific language pairs.

An example of tailored deployment is Plavno's Project Khutba, launched in 2025. This real-time prayer translation system for mosques uses a modular ASR-NMT-TTS pipeline, supporting over 1,000 listeners with a latency under 550 milliseconds. It also incorporates RAG lexicons to handle religious terminology accurately.

For multi-speaker environments like boardrooms, features such as Voice Activity Detection (VAD) and speaker diarization are essential. VAD identifies when speech occurs, while diarization assigns speech to individual speakers, preventing confusion in context or attribution.

To optimize performance for live events, businesses should carefully configure the model's "lookahead" parameter. A longer lookahead improves translation quality but increases delay. For minimal latency, tools using WebRTC or WebSocket streaming can maintain a Real-Time Factor (RTF) below 1, ensuring translations stay in sync with the speaker.

Business Applications of AI Speech Translation

AI speech translation is transforming how businesses handle live multilingual meetings, adapt marketing strategies, and deliver employee training on a global scale.

Real-Time Multilingual Communication

Businesses are using advanced AI technologies like ASR (Automatic Speech Recognition), NMT (Neural Machine Translation), and TTS (Text-to-Speech) for real-time multilingual interactions. These tools support In-Person meetings, Video Calls, and Broadcasts like webinars, offering simultaneous captions and even AI-generated voice synthesis in over 120 languages and dialects with minimal latency (1–2 seconds).

What makes these systems so effective is their ability to translate mid-sentence, keeping conversations natural and uninterrupted - crucial for negotiations or team discussions. Custom glossaries ensure accurate translations of technical terms, industry jargon, and brand names.

| Interaction Mode | Primary Business Use Case | Key Delivery Method |

|---|---|---|

| In-Person | Consultations, help desks, press events | Shared device translation, live captions |

| Video Call | Team meetings, remote consultations | Integrated language options for participants |

| Broadcast | Webinars, public announcements | Streaming with multilingual support |

To optimize results, businesses should use high-quality microphones for clear input and pre-configure language options before sessions begin. Participants can independently control captions and audio output, tailoring the experience to their needs.

But AI translation isn't just about live interactions - it’s also revolutionizing marketing strategies.

Marketing Campaign Localization

AI tools are enabling businesses to translate multilingual marketing copy into more than 140 languages, opening doors to new regional markets. By converting spoken content into text, businesses improve search engine visibility, making it easier for global audiences to discover their audio or video materials. For example, modern systems like SeamlessM4T outperform traditional methods, achieving up to 23% better BLEU scores in speech-to-speech translation.

These tools also offer localized audio with minimal delay (as little as 2 seconds) and even avatar-based content delivery in multiple languages. Voice preservation technology ensures that the speaker’s unique tone and personality remain intact, making translated messages feel genuine and relatable.

Custom glossaries safeguard brand-specific language, and for critical campaigns, pairing AI translations with human review ensures that cultural and contextual nuances are captured accurately.

AI translation is also making waves in the realm of employee training and education.

Training and Educational Content

AI speech translation is transforming how global companies deliver training materials. During webinars, voice-to-voice translation allows participants to speak naturally while others hear the content in their preferred language. AI-powered video generators can create multilingual training videos using avatars, cutting the need for costly production teams.

A growing number of Learning and Development professionals - 71%, to be exact - are already incorporating AI into their work. These tools drastically reduce the time required to develop training courses, with some estimates suggesting an 80-hour course can now be created in a fraction of that time. Voice cloning even makes it possible for training materials to feature the voices of key company figures, like a CEO or HR leader, across multiple languages.

"AI-powered video transcription is changing how we convert speech to text. It boosts accessibility, helps with video localisation, and expands global reach." - Nick Warner, Author, HeyGen

Automated transcription and live captions also improve accessibility for hearing-impaired learners or those who prefer reading over listening. With 60% of the global workforce expected to need reskilling by 2030, AI tools allow businesses to scale their training efforts efficiently without significantly increasing costs or complexity.

For the best outcomes, companies should use custom glossaries, high-quality recording equipment, and pre-select language pairs before broadcasts.

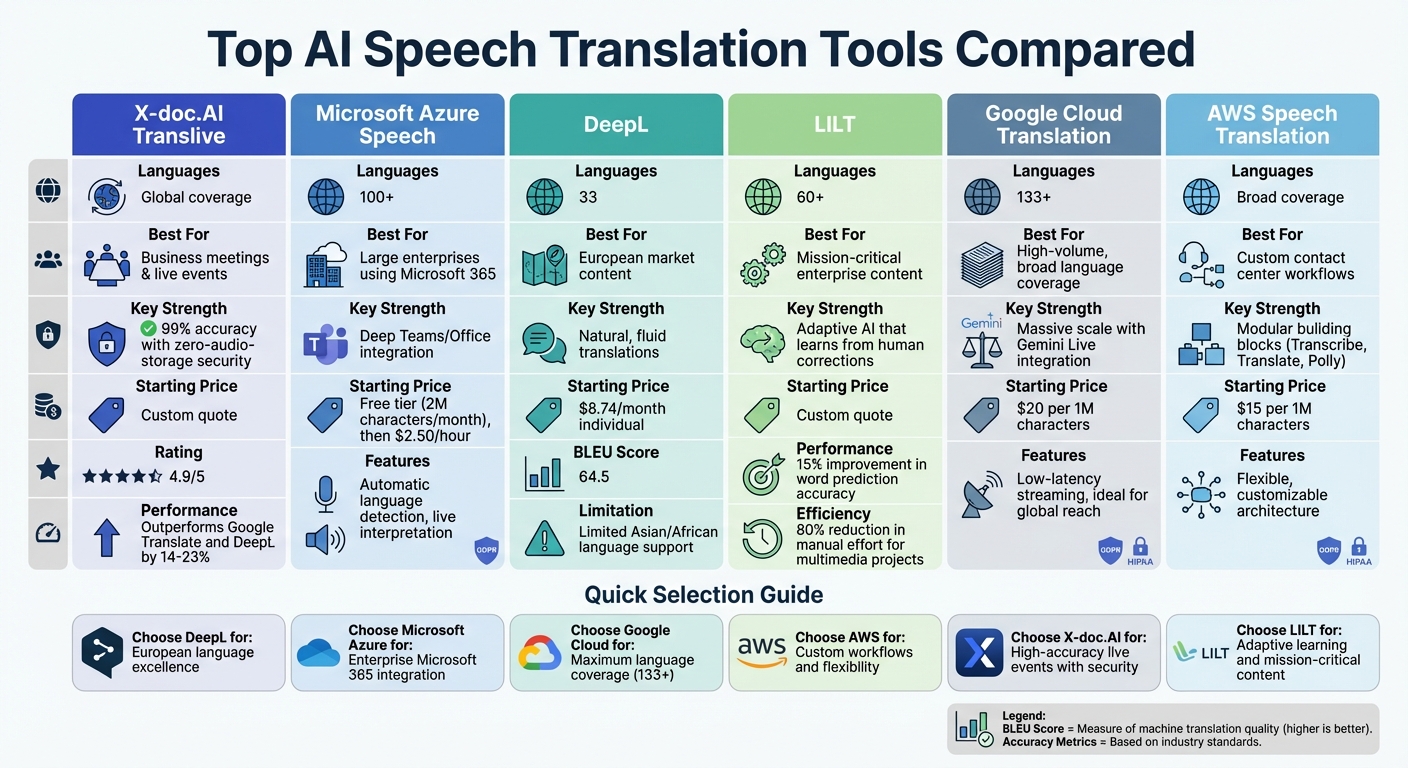

Top AI Speech Translation Tools Compared

AI Speech Translation Tools Comparison: Features, Languages, and Pricing

There are plenty of AI speech translation tools out there, but not all of them meet the unique demands of businesses. Whether you need real-time translations for meetings, accurate document translations, or customizable workflows via APIs, finding the right tool depends on your priorities.

Feature Comparison Table

Here’s a breakdown of some leading AI speech translation tools, highlighting their strengths and ideal use cases:

| Tool | Languages | Best For | Key Strength | Starting Price |

|---|---|---|---|---|

| X-doc.AI Translive | Global | Business meetings & live events | 99% accuracy with zero-audio-storage security | Custom quote |

| Microsoft Azure Speech | 100+ | Large enterprises using Microsoft 365 | Deep Teams/Office integration | Free tier (2M characters/month), then $2.50/hour |

| DeepL | 33 | European market content | Natural, fluid translations (BLEU score: 64.5) | $8.74/month individual |

| LILT | 60+ | Mission-critical enterprise content | Adaptive AI that learns from human corrections | Custom quote |

| Google Cloud Translation | 133+ | High-volume, broad language coverage | Massive scale with Gemini Live integration | $20 per 1M characters |

| AWS Speech Translation | Broad | Custom contact center workflows | Modular building blocks (Transcribe, Translate, Polly) | $15 per 1M characters |

X-doc.AI Translive stands out by claiming its optimized voice models outperform both Google Translate and DeepL by 14–23%. Additionally, X-doc.AI's zero-audio-storage promise and LILT's enterprise-grade features make them strong options for businesses handling sensitive data under GDPR compliance.

Below is a closer look at each tool’s strengths and limitations to help you decide which one aligns best with your business needs.

Pros and Cons of Each Tool

DeepL is a standout for European languages, delivering smooth, natural translations that preserve document formatting. However, its language support - 33 languages - falls short for businesses needing coverage in Asian or African languages. This makes it an excellent choice for companies focused on European markets but less ideal for global operations.

"AI translation tools bridge those gaps. These advanced systems don't just translate word for word - they interpret context, capture nuance, and account for industry-specific terminology".

Microsoft Azure Speech is a go-to for enterprises already using Teams and Office 365. It offers automatic language detection and live interpretation, maintaining tone and context. The free tier is generous, covering up to 2 million characters monthly, but costs can escalate quickly for larger-scale operations. This tool is perfect for real-time multilingual meetings without requiring extra software.

LILT takes a unique approach with its "Contextual AI Engine", which adapts to human corrections, improving word prediction accuracy by 15%. Its human-in-the-loop model reduces the need for manual effort by up to 80% in multimedia projects. However, the tool demands a more complex setup and higher upfront costs, making it best suited for enterprises with high-stakes content needs.

Google Cloud Translation leads the pack in language coverage, supporting 133+ languages with low-latency streaming. Its pricing - $20 per million characters - is competitive for businesses managing large-scale translations. This tool is ideal for developers and companies looking to reach global markets efficiently.

X-doc.AI Translive shines in real-time meeting interpretation and file-based translations, boasting 99% accuracy and a "long-term memory" feature for custom terminology. Despite its high performance (rated 4.9/5), it’s a newer player with fewer user reviews. This tool is a reliable choice for businesses that need consistent accuracy in live events or meetings.

AWS Speech Translation offers unmatched flexibility with its modular design, letting businesses create custom workflows for contact centers or other specialized needs. At $15 per million characters, it’s a cost-effective option for organizations requiring tailored solutions. This modular approach is ideal for businesses with unique operational demands.

Choosing the Right Tool for Your Business

Selecting an AI speech translation tool that aligns with your business needs is critical. A solution tailored for a global contact center might be excessive for a small marketing team, while a budget-friendly tool could fall short for enterprises managing sensitive financial data. Your choice should reflect your operational priorities and future plans. Here's how to ensure your selection meets your needs.

Key Selection Criteria

Accuracy and contextual understanding are top priorities. These factors help ensure the tool captures tone, nuance, and meaning effectively. For instance, KUDO AI achieved a 4.25 out of 5 accuracy score in blind linguist tests.

"Instead of providing just one number that can vary greatly depending on the language combinations, conditions, etc, we recommend trying out the system. By testing it with your content in realistic conditions, you can see exactly how well it works for you".

- Alexander Davydov, Head of AI Delivery at Interprefy

Latency also plays a key role. Aim for end-to-end latency under 500 milliseconds - ideally below 300 milliseconds - for natural conversations. For text-to-speech synthesis, a time-to-first-byte under 200 milliseconds is ideal.

While broad language coverage is appealing, the quality of translations in your target languages should take precedence. Confirm service-level agreements (SLAs) for each language to avoid hidden performance issues.

Compliance is another critical factor. If your business operates under regulations like HIPAA or GDPR, ensure the tool supports secure deployments such as on-premises, virtual private cloud (VPC), or air-gapped environments. Providers like Microsoft Azure Speech and AWS offer these options, while some newer tools are limited to cloud-only setups. X-doc.AI, for example, guarantees zero-audio-storage to address compliance concerns.

Integration capabilities are essential for seamless operation. Look for tools that support custom glossaries and offer robust SDKs, REST APIs, and WebSocket support for real-time audio processing. Unified speech-to-speech models simplify integration compared to systems requiring multiple service endpoints.

Pricing transparency is equally important. Avoid hidden costs across automatic speech recognition (ASR), machine translation (MT), and text-to-speech (TTS) services. Usage-based models, like Deepgram's per-token pricing, are predictable during traffic spikes, while subscription bundles may offer discounts for consistent use. Estimate your monthly usage to evaluate which pricing model works best for your needs.

Scalability and Future Growth

Your chosen tool should not only meet your current requirements but also scale with your business. Test scalability by running pilots with at least 100 concurrent calls to assess quality under load. Real-world testing is crucial - background noise, such as a 0 dB signal-to-noise ratio, can increase Word Error Rate by 57% to 149% compared to clean lab conditions. Use realistic audio samples, including phone-quality recordings and regional accents, to evaluate performance.

As your business evolves, domain adaptation becomes vital. Tools that can quickly train custom models on industry-specific terminology provide greater flexibility. For example, LILT's "Contextual AI Engine" improves word prediction accuracy by 15% through learning from human corrections.

Global operations may require code-switching capabilities. Unified multilingual models handle intra-sentential switching more effectively than systems that route to language-specific models, often achieving sub-300 millisecond latency. Additionally, check whether the tool supports scenarios where multiple languages are spoken in the same session, known as "multilingual floor" scenarios.

Lastly, consider the provider's track record. For example, Interprefy AI won "Best Use of AI Technology" at The Event Technology Awards 2023, and KUDO AI demonstrated an 84% accuracy improvement in a single engine upgrade. Opt for providers committed to continuous innovation rather than static solutions.

Implementation Best Practices

Getting AI speech translation tools up and running successfully requires building autonomous AI workflows, thoughtful setup, rigorous quality checks, and ongoing fine-tuning.

System Integration Methods

Most AI speech translation platforms connect to existing systems using REST APIs, SDKs, or WebSocket streams. For real-time use cases like customer support or live meetings, bidirectional streaming is essential. This setup allows the system to process audio as the speaker talks, minimizing the lag that can make conversations feel unnatural. Persistent socket connections are key to achieving this seamless interaction.

The quality of your audio input plays a huge role in translation accuracy. High-quality microphones are a must. Research shows that phone-quality audio at 8 kHz can reduce accuracy by 15-30% compared to high-fidelity recordings. Add background noise to the mix, and things get even trickier - at a 0 dB signal-to-noise ratio, the Japanese Word Error Rate can spike from 4.8% to 11.9%.

"The real challenge in production voice AI is handling conditions where theory breaks down. Users typically do not speak in quiet rooms with neutral accents." - Bridget McGillivray, Deepgram

For streaming applications, adjust your audio buffer sizes to fit the scenario. Smaller chunks, around 160 milliseconds, are ideal for real-time conversations, while larger chunks, about 800 milliseconds, work better for batch processing where speed isn't as critical. Hosting your system on-premises or in a Virtual Private Cloud (VPC) ensures compliance with strict industry regulations.

Once integration is complete, consider blending AI capabilities with human expertise for optimal results.

Combining AI with Human Review

Pairing AI with human oversight is especially useful for high-stakes content like media releases or clinical documentation. This approach combines the efficiency of AI with the nuanced understanding of human reviewers. AI can handle repetitive tasks and maintain consistency, while humans refine cultural nuances and ensure accuracy.

"The best model is not full automation but a sophisticated collaboration between human linguists and AI." - Bianca Soellner, Marketing Manager, Translated

A Human-in-the-Loop (HITL) system can help maintain quality by having professional linguists review a representative sample of AI translations. Use metrics like Time to Edit (TTE) to measure how long it takes editors to refine AI-generated drafts. This data helps track performance improvements over time. Additionally, establish clear escalation paths so users can flag critical errors for immediate review by human experts.

Not all content requires the same level of scrutiny. For example, customer-facing materials demand more human review compared to internal documents. Companies using this hybrid method have reported cost savings of up to 40% while maintaining quality.

Beyond integration and oversight, training your AI with specialized data can further enhance its performance.

Training AI Models with Business Data

Generic AI models often struggle with specialized terminology, brand names, or industry-specific jargon. Tailoring models to your business can improve accuracy significantly - by 15-20% in healthcare and up to 23% in financial services.

Incorporate human edits into the training process to refine your AI over time. Tools like Translated's Lara use this iterative approach to reduce future editing workloads. Start with Translation Memories, glossaries, and style guides as foundational data to jumpstart accuracy from day one.

Set different Service Level Agreements (SLAs) depending on the language. High-resource languages like English and Spanish typically achieve Word Error Rates of 5-10%, while low-resource languages may range from 16-50%. To avoid errors, configure language detection thresholds - if confidence drops below 0.6, prompt the user for confirmation rather than risking an incorrect translation.

Automate quality checks by cross-referencing AI output with centralized terminology databases to catch brand inconsistencies before human review. And don’t forget to test your system with real-world audio, covering a range of accents and phone-quality recordings, to ensure it performs well under realistic conditions.

Using God of Prompt for AI Speech Translation

When it comes to fine-tuning AI speech translation workflows, God of Prompt provides tools that make the process more efficient and accurate. After implementing your AI speech translation system, maintaining consistent accuracy and aligning translations with your brand's voice becomes essential. This is where God of Prompt's extensive library of over 30,000 AI prompts comes into play. Designed for models like ChatGPT, Claude, and Gemini AI, these pre-built templates and frameworks help streamline translation tasks.

Creating Custom Prompts for Translation Tools

The success of AI speech translation hinges on how you instruct the system. God of Prompt's "Glossary and Prompt Control" tools ensure consistent terminology is applied across real-time workflows. Instead of relying on vague instructions, you can provide precise directives, such as asking the AI to "act as a specialized medical interpreter" rather than simply saying "translate this."

"Glossary and Prompt Control... are designed for teams who need more than just accuracy, but also consistency, clarity, and alignment with your domain-specific terminology." - VideoTranslatorAI Documentation

God of Prompt's framework for prompt engineering breaks effective instructions into four parts: defining the AI's role, specifying the task, setting constraints (like tone or word count), and outlining the desired output format. For instance, in medical translations, you might instruct the AI to "retain all medical terms in English while translating the rest." This avoids errors with technical jargon and ensures accuracy. Additionally, custom glossaries uploaded via these prompts can lock in the correct usage of brand names, industry acronyms, and product-specific terms throughout all translations.

These resources are organized into bundles for tasks like SEO, marketing, and business operations, making it easy to find the right templates for any multilingual project. The platform also integrates with Notion, giving teams a centralized, searchable workspace to manage and customize their prompts. With an impressive 4.8/5 rating from 743 reviews, users report saving an average of 20 hours per week by using these pre-designed prompts.

By enabling precise, consistent translations, God of Prompt not only improves accuracy but also enhances productivity, as explored in the next section.

Improving Workflow Efficiency with AI Prompts

God of Prompt goes beyond accuracy by offering tools to streamline the entire translation process. The "Mega-Prompt Generator" is a standout feature, allowing users to create complex, multi-step instructions in one go. This is especially helpful for tasks like adapting marketing campaigns while preserving the brand's voice. For situations involving emotionally charged or unprofessional text, the "Angry Email Translator" GPT transforms inappropriate messages into polite, professional versions without losing the original meaning.

"Prompt Control allows you to influence how speech translations are phrased in real time... prompts help set the desired tone and register." - VideoTranslatorAI Documentation

Other tools, like "Human Writer GPT" and "Article Rewriter GPT", refine translated text to sound more natural and even bypass AI detection, ensuring localized content connects with the intended audience. Combining "Prompt Control" with terminology databases allows users to enforce style and consistency simultaneously - an essential feature for high-stakes scenarios like live broadcasts.

These tools complement earlier integration strategies by ensuring that translations maintain both technical accuracy and brand cohesion. Trusted by over 25,000 business owners and 70,000 entrepreneurs, God of Prompt offers a practical solution for optimizing AI speech translation workflows. The platform also includes a 7-day free trial for its Premium plan, which provides unlimited access to its prompt generator, no-code automation tools via n8n, and weekly updates.

Conclusion

AI speech translation tools have become a critical resource for businesses navigating global markets. Modern systems now support over 120 language pairs, with accuracy rates typically ranging from 90% to 95%. These high-performance models can process large volumes of speech in just seconds, while native Speech-to-Speech architectures ensure minimal delays in real-time applications.

The financial advantages are hard to ignore. Companies can choose cost-efficient architectures tailored to their specific needs, balancing affordability and quality to enhance both user experience and operational efficiency.

"Speech Translation is the core feature that powers real-time, multilingual conversations across in-person, video call, and broadcast modes." - VideoTranslatorAI

Beyond financial and operational perks, these tools also contribute to accessibility. They enable real-time captioning for individuals who are deaf, hard of hearing, or non-native speakers. Additionally, they enhance SEO visibility by making video content searchable across different languages and markets. Customization options allow businesses to maintain industry-specific terminology and consistent branding across translations.

God of Prompt takes this a step further by offering over 30,000 AI prompts for platforms like ChatGPT, Claude, and Gemini AI. These prompts help businesses fine-tune translations by defining tone, preserving emotional nuances, and ensuring consistent terminology. Their Premium plan includes a 7-day free trial, granting unlimited access to prompt generators and industry-specific automation tools.

As demonstrated throughout this guide, combining advanced AI translation with strategic prompt engineering is a powerful approach for businesses aiming to expand globally. With AI speech translation continuing to advance, this partnership will play a key role in determining which companies successfully scale their international operations.

FAQs

How can I test translation accuracy for real calls and meetings?

To assess how well translations work in actual calls and meetings, start by analyzing transcription quality. Use ground-truth files and metrics like Word Error Rate (WER) to measure accuracy. Pay attention to critical aspects such as intent accuracy, how well the system handles code-switching, and latency during live interactions. These strategies can pinpoint errors and maintain consistent performance across different languages, boosting the system's reliability in practical use.

What security and compliance options are available for sensitive audio data?

To safeguard sensitive audio data, businesses can rely on tools equipped with robust security features such as encryption, HIPAA compliance, and privacy-by-design architectures. For an added layer of protection, offline solutions - like local processing tools - ensure that data remains confined to the device and never gets transmitted elsewhere. These options are particularly suited for industries like healthcare and legal, where stringent data control is non-negotiable. Whether opting for cloud-based compliance or fully offline secure processing, these tools provide the flexibility to meet diverse privacy needs.

How can I keep brand terms and jargon consistent across languages?

Consistency in brand terminology is crucial, especially when your content spans multiple languages. Tools like Glossary and Prompt Control can help streamline this process.

By creating custom glossaries, you can define specific terms and branding elements, ensuring they are translated consistently across all languages. Pairing these glossaries with well-crafted prompts allows AI to follow your brand's tone and style more effectively.

The result? A more cohesive, multilingual experience that aligns with your brand's identity.