Domain-specific AI models are designed for specialized fields like healthcare, legal services, and finance, where general-purpose systems often fail. Evaluating these models requires more than standard metrics like accuracy or BLEU scores, which can miss critical issues. For instance:

This article provides a step-by-step checklist to evaluate AI models effectively in niche applications, ensuring they meet specific industry needs and avoid costly mistakes. Key steps include:

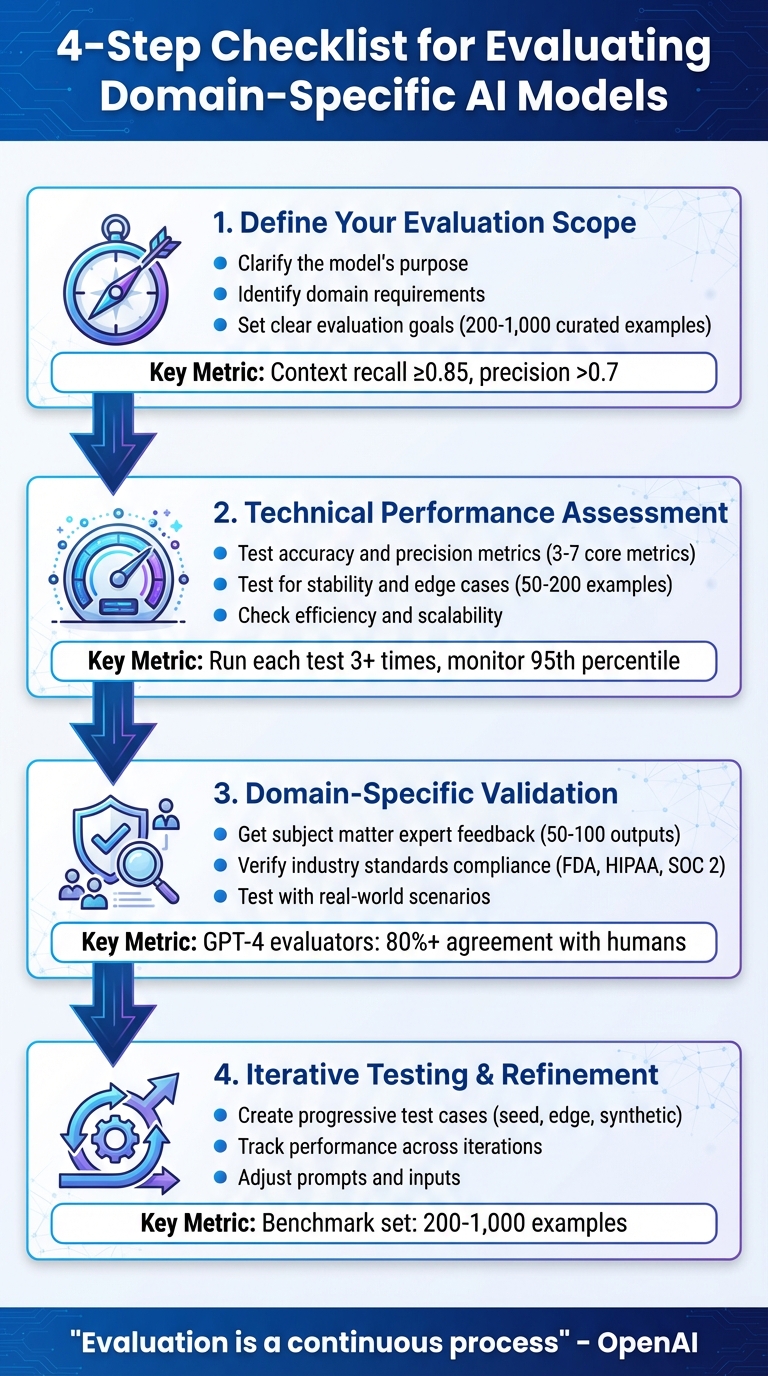

4-Step Checklist for Evaluating Domain-Specific AI Models

Before diving into testing, take the time to define your evaluation scope. This means being clear about what you're testing and why. The evaluation should be tightly connected to the real-world challenges your model is designed to address. As OpenAI aptly states:

"If you cannot define what 'great' means for your use case, you're unlikely to achieve it".

This clarity lays the groundwork for precise and meaningful assessments during both technical and domain-specific evaluations.

Start by pinpointing the specific task your model is expected to perform. Is it routing support tickets? Extracting medication names from patient records? Crafting product descriptions? Each of these tasks demands its own set of evaluation criteria. For example, Sage AI's accounting LLM struggled with terms like "balanced scorecard" because the classification task wasn't clearly defined.

The model's architecture also plays a role. For instance, single-turn models are designed to follow instructions in isolation, while multi-agent systems require evaluation of how well they handle transitions between agents. A model tasked with converting inbound sales emails into scheduled demos will have entirely different success criteria than one summarizing medical transcripts.

Once the model's function is clear, ensure its tasks are aligned with the specific demands of the industry it operates in.

Every industry has its own set of standards and requirements, which must guide your evaluation process. In healthcare, for instance, this includes HIPAA compliance, SOC 2 Type II certification, and audits for potential biases across demographics such as race, ethnicity, age, sex, and insurance type. The table below highlights some key metrics for different domains.

It's important not to rely solely on vendor claims. A case in point: in June 2021, Michigan Medicine tested the Epic Sepsis Model on 27,697 patients. While the vendor claimed an AUC of 0.76–0.83, the actual AUC was only 0.63. The model missed 67% of sepsis cases and generated 88% false positives. This underscores the need to validate performance using your own institution's data and disease prevalence.

Establishing clear, measurable goals is essential. Whether you're comparing models, identifying weaknesses pre-deployment, or validating compliance with regulations, these goals will shape your evaluation strategy.

Begin by compiling a reference set of 200–1,000 human-curated examples that reflect expert judgment in your domain. This serves as your gold standard. Next, define acceptance thresholds - objective criteria that determine pass or fail. For example, a general Q&A system might require a context recall of at least 0.85 and a context precision greater than 0.7.

Involve technical and domain experts early to review outputs and build a taxonomy of errors - such as hallucinations, omissions, or formatting issues - that you'll monitor across iterations. These measurable goals ensure your evaluation addresses the real-world requirements of your domain, bridging the gap between impressive benchmark scores and practical performance.

| Domain/Task | Business Objective | Key Evaluation Metric |

|---|---|---|

| E-commerce | Generate accurate product descriptions | Fact accuracy (≥95%), zero hallucinations |

| Customer Support | Classify and route tickets | Top-1 accuracy (≥90%), false routing (<5%) |

| Healthcare | Extract medication names from records | NER Precision, Recall, and F1 score |

| Sales | Convert inbound emails to demos | Conversion rate, brand alignment |

| General Q&A | Provide precise answers from docs | Context recall (≥0.85), context precision (>0.7) |

Once you've outlined your evaluation scope, it's time to measure the model's technical performance. This involves more than just trusting vendor claims - you need to run your own tests using data from your specific domain. As AWS Prescriptive Guidance highlights:

"Evaluation is the most critical and challenging part of the generative AI development loop".

Your technical tests should align with the goals of your domain-specific evaluation. The checklist below provides a guide to determine if your model is technically ready for deployment.

Choose 3–7 core metrics that match your task. For example, use Accuracy, Precision, Recall, and F1 for classification tasks or named entity recognition (NER). For question-and-answer models, focus on Context Recall and Precision. When working with code generation, Pass@k metrics are critical to ensure the generated code runs and passes unit tests.

Although ROUGE and BLEU are useful for measuring term overlap, prioritize semantic similarity metrics like BERTScore or Cosine Similarity to better capture context and intent. For tasks requiring factual accuracy, such as e-commerce product descriptions, aim for at least 95% accuracy on sampled attributes. In high-stakes scenarios, consider adding Faithfulness or Groundedness scores to identify hallucinations by verifying outputs against the source context.

Automated evaluations should align closely with expert reviews. Instead of subjective 1–5 scoring systems, use binary pass/fail rubrics to ensure results are clear and actionable.

Real-world data is messy and unpredictable. Your model must handle typos, synonyms, random noise, and demographic variations without faltering. Perturbation testing is a great way to systematically alter inputs and assess robustness. For example, you can modify names or genders in prompts to uncover hidden biases or introduce spelling errors to see if the model maintains accuracy.

Run each test case at least three times to average out non-deterministic variability. During these tests, fix random seeds and set the temperature hyperparameter to 0 to minimize variance. For edge-case testing, use a dataset of 50–200 targeted examples, particularly for adversarial or safety-sensitive scenarios.

Additionally, test the model's ability to resist prompt injections and jailbreak attempts using diverse adversarial examples. Document these risks, ranking them by both likelihood and severity. Afterward, shift your focus to evaluating the model's efficiency and scalability under real-world conditions.

Technical performance isn't just about accuracy - it also includes the model's ability to perform efficiently at scale. Monitor factors like latency, throughput, and processing speed while keeping an eye on token usage. Watch for HTTP 429 errors (too many requests) and use the 95th percentile as a benchmark for performance. Compare new model versions in shadow mode to identify performance drift and inefficiencies.

Incorporate these benchmarks into your CI/CD pipelines to run nightly tests. This helps catch performance issues early. As Microsoft's Experimentation Platform team emphasizes:

"Measuring LLM performance on user traffic in real product scenarios is essential to evaluate these human-like abilities and guarantee a safe and valuable experience to the end user".

Without thorough benchmarking, issues like cost overruns, latency problems, and compliance risks may only surface after deployment.

When evaluating AI models, technical metrics like algorithmic performance are just one piece of the puzzle. To ensure your model is genuinely suited for its intended industry, domain-specific validation is essential. This step involves human expertise to verify that outputs align with industry knowledge, standards, and practical applications. It helps catch errors that automated metrics often miss - like incorrect terminology, regulatory violations, or outputs that pass tests but fail in real-world scenarios. Here’s how to ensure your AI model meets the expectations of your specific domain.

Involving domain experts is critical to fine-tuning your model. Start by assembling a team of experts to review outputs and build a gold-standard reference set. This reference set acts as a guiding benchmark, mapping inputs to ideal, expert-level outputs.

Ask these experts to review an initial batch of 50–100 outputs and develop an error taxonomy. This categorizes issues like hallucinations, terminology mistakes, or irrelevant responses [12, 17]. For tasks where there’s no single "correct" answer - such as generative AI tasks - experts should define key facts, entities, or concepts that must be included in valid responses. For example, in medical NLP tasks, you might need a dataset of 20 to over 100 records resembling real clinical data to test pretrained models effectively.

Use detailed rubrics with specific examples to assess quality, avoiding vague 1–5 scoring systems. Advanced language models like GPT-4, when used as evaluators, can achieve over 80% agreement with human preferences, matching human evaluator consistency. Even if automated graders are used, domain experts should regularly audit their accuracy by reviewing system logs. Document all scenarios, edge cases, and feedback to refine your model over time.

In sensitive fields like healthcare, subgroup analyses are crucial. Validate performance across demographics - such as race, age, and sex - to ensure fairness and avoid biased outputs.

Meeting industry standards isn't optional - it’s a necessity. Don’t rely solely on vendor promises; independently confirm that your model adheres to all legal, ethical, and security requirements. In healthcare, this means checking FDA clearance for devices: Class II devices require 510(k) clearance, while Class III devices need Pre-Market Approval (PMA).

For privacy compliance, ensure a Business Associate Agreement (BAA) is in place when handling Protected Health Information (PHI) to meet HIPAA standards. Additionally, confirm that your vendor undergoes annual third-party audits to maintain certifications like SOC 2 Type II or HITRUST. As the Physician AI Handbook states:

"Privacy promises must be legally binding and technically enforced. Data minimization is mandatory, not optional".

Adopt strict data minimization protocols, ensuring the AI accesses only the data necessary for its tasks. Implement tools for toxicity detection and guardrails to prevent the generation of harmful or biased content. Include solid contractual protections, such as performance guarantees, liability indemnification for AI errors, and clear data ownership and deletion clauses. Secure rights to audit and publish independent validation results as part of your agreements.

Set up quarterly reviews to monitor key metrics like model drift, false positive rates, and clinical outcome impacts. Keep in mind that models trained at a single institution often perform about 10% worse when deployed at external sites.

To truly evaluate your model, test it under conditions that mimic its intended use. For healthcare applications, this might include tasks like text classification (e.g., assessing pain scales), named entity recognition (e.g., identifying medication names in patient records), and text generation (e.g., summarizing medical data). Use datasets that closely resemble actual clinical data, and ensure fine-tuning involves at least 100 high-quality records labeled by subject matter experts.

External validation at independent sites is equally important to prove your model’s reliability in real-world settings. For instance, the Epic sepsis model, despite claims of high accuracy, demonstrated only 33% sensitivity during external validation, missing 67% of sepsis cases.

Models scoring below 4 out of 10 should be rejected outright, while those scoring between 8 and 10 may be considered for pilot deployment.

Iterative testing takes your model’s performance to the next level by identifying and addressing weaknesses through repeated evaluations. This process is all about continuous improvement, ensuring your model delivers consistent results in practical scenarios. Below, we’ll dive into the steps to make this cycle as effective as possible.

Start by building a benchmark set of 200–1,000 carefully selected examples. As OpenAI aptly states:

"If you cannot define what 'great' means for your use case, you're unlikely to achieve it".

This benchmark set serves as your foundation for every iteration.

Organize your test cases into three tiers:

Break down complex workflows into smaller, testable units - like separating document retrieval from question-answering - so you can pinpoint exactly where issues arise. By reviewing 50–100 early outputs, you can create an error taxonomy to categorize problems such as hallucinations, formatting issues, or omissions. These insights can then be turned into reproducible test cases.

For even more challenging scenarios, advanced models like GPT-4 can help generate diverse adversarial examples that are difficult to create manually. Once your test cases are in place, the focus shifts to tracking improvements over time.

Version control is critical - keep track of prompts, datasets, checkpoints, and code to ensure your results are reproducible. Use side-by-side comparison dashboards to monitor metrics like quality scores, latency, and cost per 1,000 tokens across different versions of your model. Instead of relying on a single metric, categorize failures (e.g., hallucinations, formatting errors, omissions) to confirm that your adjustments are addressing specific issues.

Run at least three full passes of your test set and average the results to account for variability in model performance. Advanced models like GPT-4, which align with human preferences over 80% of the time, can serve as scalable judges for tracking progress. Automate these evaluations by integrating your test suite into CI/CD pipelines, ensuring benchmarks run automatically with every build or prompt update.

Finally, establish a feedback loop by regularly sampling production logs and edge cases. Have experts review these cases and convert them into new test scenarios for the next iteration.

When errors occur, it’s essential to dig into the root cause. Determine whether the problem lies in the prompt design, training data, or the model’s inherent limitations. For multi-step workflows, evaluate each individual step rather than just the final output to identify where the logic breaks down.

Use evaluation scores and chain-of-thought reasoning to fine-tune prompts. Test your model’s sensitivity to slight changes in prompt wording by experimenting with paraphrased inputs. Additionally, review examples from high, middle, and low-performing outputs to uncover patterns in successes and failures.

If prompt tweaks don’t resolve issues like factual inconsistencies, consider adjusting model parameters such as temperature or top-k settings alongside prompt modifications. As OpenAI emphasizes:

"Evaluation is a continuous process".

After rounds of testing and refining, it’s crucial to document your evaluation results thoroughly. Why? Because proper documentation turns your evaluation into a repeatable and accountable process. Without it, reproducing results, explaining model behavior to stakeholders, or pinpointing the cause of production issues becomes nearly impossible.

Start by logging every element of your evaluation pipeline. This includes the model version, checkpoint, and hyperparameters (like temperature and top‑k). Make sure to record dataset sources, dates, annotator notes, and version-control every split using standardized formats like JSON. Add metadata for each data point, such as descriptive tags, context, and expected fields.

Don’t stop there - document hardware specs, software dependencies, and containerization details (e.g., Docker or Conda) to avoid issues caused by environmental drift. Conor Bronsdon, Head of Developer Awareness at Galileo, offers this advice:

"Version control every prompt, dataset split, and model checkpoint in Git. Wrap your entire pipeline in Docker or Conda images so any teammate can reproduce results with a single command".

To improve your model over time, develop an error taxonomy that categorizes issues like hallucinations, omissions, toxic outputs, or poor formatting. This helps prioritize fixes based on their risk and frequency. You should also create a risk register that links potential harms - like misinformation or privacy breaches - to their likelihood and severity scores. Store every raw and normalized request-response pair in version-controlled storage, and log random seeds to manage the non-deterministic nature of LLMs.

This level of detail sets the stage for a reproducible evaluation process.

To ensure reproducibility, start by clearly defining your problem statement and success thresholds before testing. This prevents shifting goals and keeps your evaluation focused. Since large language models (LLMs) produce probabilistic outputs, run each test case at least three times and record aggregate metrics. This helps you measure stability and spot outliers.

Organize your documentation so that every claim ties back to verifiable data. Link textual conclusions in reports directly to the relevant results using file paths or result IDs. Geir Kjetil Sandve from the University of Oslo highlights the long-term benefits of this approach:

"Good habits of reproducibility may actually turn out to be a time-saver in the longer run".

OpenAI also stresses the importance of continuous logging: "Log as you develop so you can mine your logs for good eval cases". This creates a feedback loop where production logs and edge cases are routinely reviewed by experts and used to develop new test scenarios. Filip Lapiński from Synthmetric suggests:

"Store benchmarks with timestamps and model versions for trend analysis. Instrument both model and application layers: latency, token counts, and downstream user metrics".

Evaluating domain-specific AI models isn’t a one-and-done task - it’s an ongoing process that demands structure, collaboration, and careful planning. As OpenAI aptly states:

"Evaluation is a journey, not a destination: Evaluation is a continuous process".

Skipping a structured approach can lead to subjective, "vibe-based" decisions, which often result in technical debt, poor performance in production, and challenging model migrations. A well-defined checklist, on the other hand, turns high-level goals into measurable outcomes tied directly to user needs and business risks.

By using representative datasets, selecting the right metrics, and addressing challenges like prompt sensitivity and hallucinations, you create a dependable way to measure application quality. AWS Prescriptive Guidance highlights this idea:

"The evaluation system itself should be treated as a product, a complex software component that needs to be designed, versioned, and validated to make sure that it provides a reliable signal of application quality".

Top-performing teams align technical metrics with specific business goals set during the evaluation planning stage. They avoid drowning in excessive data by focusing on a few key custom metrics and adopt an eval-driven development approach, testing early and frequently throughout the lifecycle.

Reliable AI systems tailored to your unique context give you a competitive edge. This is where precise benchmarking plays a critical role - generic benchmarks often fail to reflect the nuanced demands of specific industries or regulatory requirements. A checklist bridges the gap by turning abstract promises into tangible, measurable results.

Use this checklist as a living framework to ensure your AI systems deliver consistent, reproducible performance. A structured evaluation process today not only prevents costly missteps but also ensures your AI solutions meet the specific needs of your domain.

To make sure your AI model aligns with industry expectations, begin by setting clear benchmarks tailored to your field. These benchmarks should address key aspects like accuracy, dependability, and fairness. It's also essential to use domain-specific data for training and testing, especially in sensitive areas like healthcare or finance, to ensure your model performs well in real-world applications.

Keep a close eye on your model’s performance by implementing automated benchmarks, conducting thorough error analysis, and seeking input from domain experts. Using these insights, make iterative updates to refine the model. This ongoing process helps your AI stay accurate, fair, and in step with industry regulations and best practices over time.

To assess how well an AI model performs in practical situations, begin by setting clear goals that align with what users actually need and any potential risks. Select appropriate metrics to evaluate the model, such as accuracy, precision, or recall - choosing the ones that best fit the specific context. Combine automated benchmarks with human reviews using realistic test datasets to ensure the model behaves as intended.

Dive into qualitative error analysis to pinpoint weaknesses and areas that need improvement. Keep a close eye on the model's performance over time to catch issues like model drift or unexpected errors. By focusing testing efforts on real-world applications and addressing problems that have the most significant impact on users, you can ensure the model remains dependable and effective.

Testing domain-specific AI models repeatedly - known as iterative testing - is crucial for fine-tuning the model to address the specific demands of its intended industry or application. Instead of relying on generic methods, this approach zeroes in on practical tasks and tricky edge cases, helping to identify flaws, biases, or inaccuracies that might otherwise slip through the cracks.

By revisiting and refining the model through iterative testing, teams can set clear benchmarks for success, track performance over time, and adjust to changing needs. This ongoing process ensures the AI stays dependable, relevant, and aligned with what users expect, resulting in a solution that people can trust and rely on.