Domain-specific GPTs outperform general-purpose models like GPT-4 in specialized industries by focusing on niche knowledge, terminology, and tasks using custom GPTs. These models excel in fields like finance, energy, and technical domains, delivering higher accuracy and efficiency. For example:

These results highlight the strength of specialized training in handling complex, industry-specific challenges. Domain-specific models are also more cost-effective and reduce hallucination rates, making them ideal for regulated fields like healthcare and finance. Organizations can further enhance performance by fine-tuning models with curated data and rigorous benchmarking.

Benchmarks act as a crucial yardstick for assessing AI performance, shedding light on how well models grasp complex topics and handle calculations. They’re indispensable for developers trying to pinpoint strengths and weaknesses, especially when comparing general-purpose AI systems to those tailored for specific industries. Let’s dive into some key benchmark categories.

MMLU (Massive Multitask Language Understanding) evaluates AI models across 57 diverse subjects, including STEM, humanities, and social sciences. Think of it as a broad intelligence test, designed to measure how well a model can handle a wide range of topics without being a specialist in any one area.

HellaSwag, on the other hand, zeroes in on commonsense reasoning and linguistic skills. It uses sentence completion tasks to see if models can predict logical continuations of everyday scenarios. One critical finding? How you prompt the model makes a huge difference - accuracy can swing wildly from 30% to 80%, even on the same tasks.

The financial sector has its own unique challenges, and several benchmarks have been developed to test AI models in this space:

In highly technical fields, benchmarks spotlight tasks where general-purpose AI models often struggle:

"General-purpose benchmarks can fall short in capturing [industrial] nuances, leading to inaccurate data relationships and fragmented insights." - Cognite Atlas AI Report

LiveBench tackles a big issue in AI testing: contamination, where models might already "know" the test data. To combat this, LiveBench updates its questions every six months, ensuring the tests remain fresh and genuinely challenging. This approach reflects a broader push across industries to evaluate models based on real capabilities, not rote memorization, bridging the gap from general reasoning to specialized tasks.

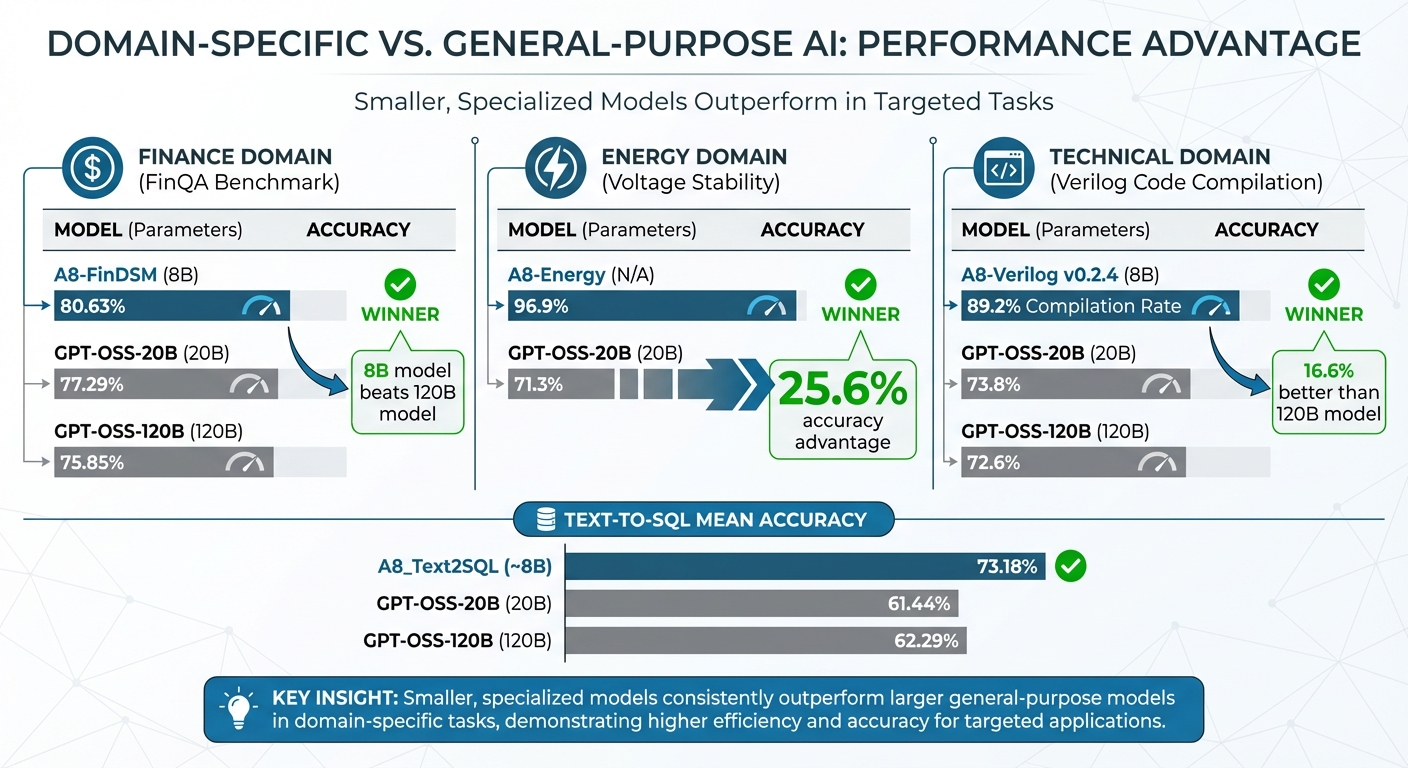

Domain-Specific vs General AI Models: Performance Comparison Across Industries

When it comes to specific fields, domain-focused GPTs consistently deliver better results compared to general-purpose models.

In August 2025, Articul8's benchmarks revealed that A8-FinDSM, an 8-billion-parameter model, achieved an impressive 80.63% pass@1 score on FinQA. This surpassed the much larger GPT-OSS-120B model, which scored 75.85% despite having 120 billion parameters.

The trend continued with the TFNS test, where A8-FinDSM scored 73.47%, edging out GPT-OSS-120B’s 72.53%.

| Model | Parameter Size | FinQA pass@1 (%) | TFNS pass@1 (%) |

|---|---|---|---|

| A8-FinDSM | 8B | 80.63 | 73.47 |

| GPT-OSS-20B | 20B | 77.29 | 68.84 |

| GPT-OSS-120B | 120B | 75.85 | 72.53 |

These results highlight how specialized training can outperform even significantly larger models.

"While general models like those from OpenAI and Meta democratize access, domain-specific models unlock transformative value in specialized fields." - Articul8

This same principle applies to technical energy applications, where precision is paramount.

The energy sector demands precision that general models often struggle to achieve. The A8-Energy model demonstrated 96.9% accuracy on specialized topics like voltage stability, far outpacing GPT-OSS-20B, which managed only 71.3%. This 25.6 percentage point gap underscores the reliability of domain-specific models in critical applications.

The disparity arises from what researchers call the "last mile problem." While general models handle broad concepts well, they falter when intricate domain knowledge and precise reasoning are required. In fields like energy, where errors in interpreting specifications can lead to severe consequences, this gap becomes especially critical.

This performance advantage extends to other specialized tasks, including hardware design and database management.

In Verilog code generation tasks, A8-Verilog v0.2.4, an 8-billion-parameter model, achieved an 89.2% compilation rate - a significant lead over GPT-OSS-120B, which only managed 72.6%, despite being 15 times larger.

| Model | Parameter Size | Compilation Rate (Avg) | Test Success Rate (Avg) |

|---|---|---|---|

| A8-Verilog v0.2.4 | 8B | 0.892 | 0.54 |

| A8-Verilog v0.2.4 70B | 70B | 0.892 | 0.608 |

| GPT-OSS-20B | 20B | 0.738 | 0.56 |

| GPT-OSS-120B | 120B | 0.726 | 0.55 |

For Text-to-SQL tasks, domain-specific models also shined, achieving mean accuracies of about 73%, while general OSS models lagged behind with scores between 61% and 62%.

| Model / Variant | Parameter Size | Mean Accuracy (%) |

|---|---|---|

| A8_Text2SQL | ~8B | 73.18 |

| GPT-OSS-20B | 20B | 61.44 |

| GPT-OSS-120B | 120B | 62.29 |

From finance to energy, and hardware design to SQL tasks, these results demonstrate that domain-specific models don’t just offer a slight edge - they deliver a decisive performance advantage in specialized, real-world scenarios.

When comparing domain-specific GPTs to general models, benchmarks highlight a clear distinction: domain-specific models are laser-focused on a particular area, while general models aim to cover a wide array of topics. Anshu, founder of ThirdAI, explains it well:

"A GPT model is a function that optimizes internal representation for the average loss over the union of all the information. At the same time, [a domain-specific model] is a function that optimizes the representation for average loss over information that are only related to [the domain]".

This targeted approach allows for better semantic alignment. For example, a GPT trained for the food industry will associate "apple" more closely with "apple pie" than the tech company - a subtlety that general models often miss . This precision not only improves performance on specific tasks but also brings down costs significantly.

Consider the cost difference: GPT-4 is 60–100 times more expensive for specialized biomedical tasks compared to GPT-3.5. Many organizations are now adopting a hybrid deployment strategy, using smaller, domain-specific models for routine tasks while reserving premium models for more complex reasoning. This approach typically reduces costs by 40–60% . For high-volume tasks, an 8-billion-parameter domain-specific model can outperform a 120-billion-parameter general model.

Another major advantage is the reduction in hallucination rates. Domain-specific models, trained on curated, high-quality data, are less prone to generating inaccurate information. General models, on the other hand, have shown hallucination rates as high as 32% in tasks like multi-label document classification for niche domains . This shift toward specialization is well summarized by Pravin Khadakkar, PhD:

"The evolution of language AI has shifted from a 'Jack-of-All-Trades' approach to a 'Master of One' strategy, emphasising specialised expertise over general versatility".

For industries with strict regulations, such as healthcare and finance, domain-specific models are invaluable. They offer tailored features like encryption, data isolation, and zero-retention APIs . These capabilities are crucial for ensuring data privacy and meeting regulatory requirements, making these models indispensable in such fields.

Creating a domain-specific GPT can unlock major performance gains, but it requires careful planning and rigorous testing. Here's how to approach it effectively.

Start by setting clear goals and measurable success metrics. Define exactly what tasks your model needs to handle, whether it’s technical troubleshooting, retrieving internal knowledge, or managing customer support. Then, establish specific performance metrics like accuracy or response time to evaluate success. Doing this upfront avoids the common issue of shifting goals after testing begins.

The next step? Data preparation and choosing the right implementation strategy. Curate domain-specific data tailored to your industry. Then decide between two main approaches:

Even with a small dataset - 50 to 100 examples - fine-tuning can deliver impressive results. For instance, a GPT-4o-mini model hit 91.5% accuracy, matching the performance of the larger GPT-4o model while costing less than 2% of its budget.

Once you’ve chosen your strategy, benchmarking becomes critical. Continuous evaluation during development ensures reliability. Experts recommend the 70/30 data rule: use 70% domain-specific data (including industry-specific terms, edge cases, and internal formats) and 30% public datasets like MMLU or HellaSwag. This balance ensures your model is both practical for your field and comparable to broader industry standards. Modern benchmarking tools use adaptive rubrics, which create unique pass/fail tests for each prompt. You can even deploy an LLM-as-a-Judge system, where high-capacity models grade outputs with over 80% agreement with human evaluations.

Successful teams follow OpenAI’s eval-driven development approach:

"Evaluate early and often. Write scoped tests at every stage".

This includes testing for issues like hallucinations, accuracy, and edge cases before deployment. Conor Bronsdon, Head of Developer Awareness at Galileo, emphasizes the importance of this:

"You can't rely on vendor marketing to predict how models will perform on your specific use cases. Without rigorous benchmarking, cost overruns, latency issues, and compliance violations stay hidden until production".

To avoid these challenges, set SMART criteria from the start. Anchor your tests with both a baseline from your current production model and an aspirational target model. Use adversarial prompts and extended context scenarios to push your model to its limits. These practices ensure your custom GPT meets internal needs while holding its ground against industry benchmarks.

For additional support, platforms like God of Prompt offer over 30,000 AI prompts and toolkits to simplify prompt engineering. These resources include categorized prompt bundles, guides, and tools for generating custom prompts, helping teams focus on benchmarking and refining their models instead of starting from scratch. With structured frameworks and ready-to-use templates, you can accelerate the development of domain-specific applications for marketing, SEO, productivity, or technical workflows.

Domain-specific GPTs have shown they can consistently outperform general-purpose models in specialized fields. Take A8-Energy, for instance - it achieved an impressive 96.9% accuracy compared to just 71.3% by GPT-OSS-20b. Similarly, in the financial sector, an 8-billion-parameter domain model scored 80.63% on FinQA, outpacing a much larger 120-billion-parameter general model, which managed 75.85%. These kinds of results highlight a major leap forward in how AI can be tailored for specific industries.

But it’s not just about accuracy. These domain-specific models are also cutting down on the time experts spend on repetitive tasks. A great example comes from Microsoft Research, where advanced prompting strategies like Medprompt reduced error rates on medical benchmarks by 27% - all without the need for costly fine-tuning. This shows that with the right frameworks and evaluation methods, even existing models can deliver specialist-level performance.

As Harsha Nori and colleagues put it:

"Prompting innovation can unlock deeper specialist capabilities and show that GPT-4 easily tops prior leading results for medical benchmarks".

The secret lies in aligning your evaluation criteria with real-world tasks. Whether it’s using FinQA for financial analysis or Text-to-SQL for database queries, tailoring your approach to the specific demands of your industry is key.

To make this process easier, practical tools are already available. For example, God of Prompt offers a library of over 30,000 categorized AI prompts and toolkits designed for platforms like ChatGPT, Claude, Midjourney, and Gemini AI. These resources include industry-specific prompt bundles, tools for creating custom prompts, and step-by-step guides to help teams implement specialized frameworks without starting from scratch. Whether you’re refining workflows in marketing, SEO, finance, or technical fields, these structured libraries can speed up development and ensure your models meet both internal goals and industry standards.

Domain-specific GPTs are particularly effective at handling specialized tasks because they are fine-tuned using data tailored to specific industries. This training helps them grasp technical jargon, formatting styles, and the unique context of their respective fields. The result? Outputs that are more precise, relevant, and dependable for niche applications.

These models shine in fields like healthcare, finance, and cybersecurity, where accuracy and compliance with industry standards are non-negotiable. Their knack for reducing errors and adhering to regulations makes them a go-to solution for professionals tackling complex, specialized problems.

MMLU (Massive Multitask Language Understanding) and HellaSwag are benchmarks designed to evaluate how well AI models perform in different areas. They each focus on distinct skills, making them valuable tools for understanding a model's capabilities.

MMLU dives into a model's knowledge and reasoning across 57 subjects, covering topics like math, history, and law. Its emphasis on academic and professional domains makes it perfect for testing broad, factual understanding and the ability to handle multiple tasks.

On the flip side, HellaSwag is all about common-sense reasoning. It challenges models to predict the most logical continuation of real-world scenarios, focusing on practical understanding and situational reasoning.

In essence, MMLU evaluates subject-specific knowledge and reasoning, while HellaSwag hones in on how well a model grasps and applies everyday context.

Domain-specific GPT models stand out for their efficiency and affordability. Unlike broader, general-purpose models, these are tailored for specific tasks and datasets, which means they require less fine-tuning and fewer resources. This focused design translates to lower computational demands and reduced operational costs.

When applied to specialized fields like healthcare or manufacturing, these models excel by meeting precise performance needs. They deliver reliable results without the heavy infrastructure typically associated with general-purpose AI, making them a practical and cost-efficient choice for businesses with specialized requirements.