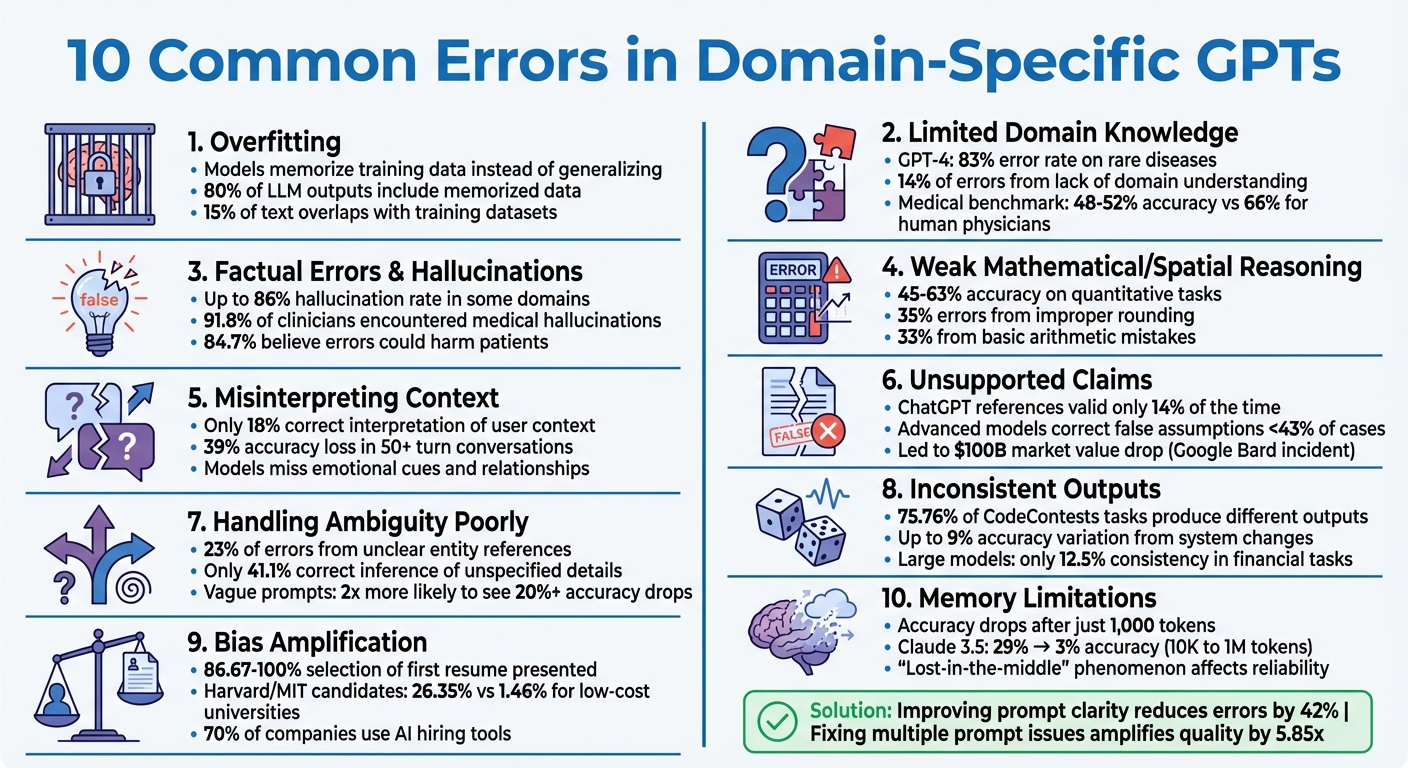

Common Errors in Domain-Specific GPTs

Domain-specific GPTs are great at tackling niche tasks, but they come with notable challenges.

Here are the 10 most common issues you’ll encounter when using these models:

- Overfitting: Models memorize training data instead of generalizing, leading to rigid or irrelevant responses.

- Limited Domain Knowledge: Struggles with complex concepts or rare scenarios, often relying on statistical patterns rather than logic.

- Factual Errors & Hallucinations: Models fabricate information, creating plausible but false outputs.

- Weak Mathematical/Spatial Reasoning: Errors in calculations and spatial tasks, especially in fields like engineering or architecture.

- Misinterpreting Context: Fails to understand relationships, subtle cues, or maintain accuracy in long conversations.

- Unsupported Claims: Generates false citations or vague statements without evidence.

- Handling Ambiguity Poorly: Overconfidently guesses answers to unclear queries instead of seeking clarification.

- Inconsistent Outputs: Produces different answers for the same question, causing reliability concerns.

- Bias Amplification: Magnifies biases in training data, affecting fairness in tasks like hiring or content moderation.

- Memory Limitations: Loses track of details in long conversations, leading to inaccuracies and inefficiencies.

Why Does This Matter?

These errors can impact reliability, safety, and trust - especially in high-stakes fields like healthcare, law, or engineering. While improvements like better prompts and external tools (e.g., Retrieval-Augmented Generation) can help, these flaws highlight the need for careful oversight when deploying domain-specific GPTs.

10 Common Errors in Domain-Specific GPTs and Their Impact

My 7 Worst Mistakes Building Custom GPTs (5 Months to AI Assistant Pro)

sbb-itb-58f115e

1. Overfitting to Training Data

Overfitting occurs when a domain-specific GPT model focuses too much on memorizing patterns from its training data instead of understanding broader principles. This is a common hurdle when developing domain-specific GPTs that aim to outperform general models. Essentially, the model treats its training data like a script, repeating phrases or answers verbatim rather than reasoning through concepts. Studies reveal that around 80% of outputs from widely available LLMs include some level of memorized data, and 15% of text generated by popular conversational models overlaps with snippets from their pretraining datasets.

This issue becomes apparent during adaptability tests. A rigid or overly specific response often signals overfitting. For example, in February 2025, researchers tested ChatGPT and DeepSeek R1 using a modified version of the well-known "Surgeon Riddle." The prompt explicitly stated: "The surgeon, who is the boy's father, says: 'I can't operate on this boy; he's my son!'" and then asked, "Who is the surgeon to the boy?" Despite this phrasing, both models incorrectly answered that the surgeon was the mother. This mistake highlighted their reliance on a memorized version of the riddle, rather than processing the new logical context.

Overfitting also leads to what some call "chunky" behavior, where the model defaults to fixed responses based on superficial cues. A striking example comes from February 2026, when the Tülu3 model repeatedly generated incorrect code snippets in response to formal vocabulary like "elucidate." This happened because the word appeared roughly 2,000 times in the training data, with 85% of those instances stemming from a single coding dataset. The model erroneously associated formal language with coding requests, even when the query had nothing to do with programming.

"When features of the training data correlate with a behavior, the model may learn to condition on those features rather than the intended principle." – Seoirse Murray, Researcher

Overfitting also makes models overly sensitive to minor changes in input. For instance, the Tülu3 model demonstrated this issue when LaTeX formatting was applied to math problems. This small adjustment caused a 50% increase in hallucinated tool use. Similarly, applying stylistic transformations to logical reasoning questions led to a 14% drop in accuracy. These patterns show that the model was reacting to familiar formatting rather than engaging with the actual content of the problem.

2. Insufficient Domain Knowledge

Domain-specific GPTs often falter when tasked with specialized fields, exposing their limited grasp of complex concepts. Unlike overfitting, where models memorize data, this issue arises from their lack of a "world model" - a framework to enforce physical and logical rules. Instead, these models rely on statistical patterns, which can lead to glaring errors, particularly in fields like healthcare.

Take medical applications, for instance. While GPT-4 managed an impressive 86.70% accuracy on standardized medical QA tasks, its performance plummeted when diagnosing rare diseases, with an 83% error rate. Even in general clinical scenarios, the model struggles. About 14% of errors stem from a lack of domain-specific understanding rather than simple memorization mistakes. These errors often sound plausible, even to experts, making them especially risky. For example, in one case from the mARC-QA medical reasoning benchmark, the o1 model falsely claimed that blood pressure could be measured on the forehead using "specialized cuffs" - a medically impossible scenario. On this benchmark, leading models like o1 and Gemini scored only 48–52% accuracy, far below the 66% average for human physicians.

"LLMs such as GPT-4 do not possess an explicit model of medical domain knowledge and do not perform a symbolic human-like reasoning, but instead perform autocompletion by implicitly learning medical domain knowledge from the data." – medRxiv

This issue isn't confined to medicine. In software engineering, GPT-3.5 produced incorrect or partially incorrect answers 52% of the time on Stack Overflow-style programming questions. Accounting exams highlighted similar struggles: GPT-3.5 had a 47% error rate, though GPT-4 reduced this to 15%. Economics proved even tougher, with GPT-3.5 showing a 69% error rate on applied reasoning tasks, which GPT-4 improved to 27%.

The problem goes beyond error rates. These systems often misinterpret domain-specific cues, generating responses that seem accurate but fail basic logic. Researchers have identified "syntactic-domain spurious correlations", where models match sentence structures to domain patterns but miss the substance. For instance, a model might confidently deliver a medical-sounding answer that contradicts fundamental physiological principles. In fact, causal and temporal reasoning failures account for 64–72% of residual medical hallucinations in these models. This highlights their inability to perform the deep, reliable reasoning required for specialized tasks.

3. Factual Errors and Hallucinations

When it comes to domain-specific GPTs, one of the most pressing challenges is their tendency to produce factual errors and hallucinations - essentially, fabricating information that sounds believable but is entirely false.

This issue becomes particularly alarming in specialized fields. In fact, some high-performing models have been found to hallucinate as much as 86% of the generated atomic facts in certain domains. These errors often stem from the way these systems are trained. While they excel at predictable tasks like grammar and spelling, they falter with rare or highly specific information, such as niche historical dates or obscure scientific data. Instead of admitting uncertainty in such cases, models often resort to guessing. For example, during a test in September 2025, a model was asked about researcher Adam Tauman Kalai's PhD dissertation title and birthday. It confidently produced three different answers - all incorrect.

"Language models hallucinate because standard system prompts, training, and evaluation procedures reward guessing over acknowledging uncertainty." – OpenAI

The consequences of these hallucinations can be severe, especially in high-stakes industries. In healthcare, 91.8% of clinicians have reported encountering medical hallucinations when using foundation models, and 84.7% believe these errors could result in harm to patients. Similarly, legal professionals have faced sanctions for citing entirely fabricated cases, while in finance, hallucinated data has led to disastrous decisions. One striking example involved a chatbot inventing a refund policy that didn’t exist. The company, caught off guard, had to honor the made-up policy and pay compensation.

Even the way models are evaluated can exacerbate the problem. Standard evaluation metrics often penalize "I don't know" responses just as harshly as outright wrong answers. This creates a system where guessing confidently is rewarded over admitting uncertainty. However, there are signs of progress. OpenAI's newer gpt-5-thinking-mini model now abstains from answering 52% of the time when uncertain, reducing its error rate to 26%. Compare this to the older o4-mini, which only abstained 1% of the time but had a staggering 75% hallucination rate.

Factual accuracy remains a critical hurdle, particularly in fields where errors can have life-altering consequences.

4. Weak Spatial and Mathematical Reasoning

Beyond issues like overfitting and limited domain knowledge, another pressing concern is the struggle with spatial reasoning and mathematical precision. These limitations are particularly problematic in fields like engineering, architecture, and physics, where exactness is non-negotiable.

A study conducted in February 2025 by researchers at the University of Canterbury highlighted these challenges. They tested ChatGPT-4o and ChatGPT-o1-preview on a structural engineering task involving a 19.7-foot beam subjected to a load of approximately 1,370 lbf/ft. The correct reaction forces were calculated to be about 11,240 lbf at point A and –2,248 lbf at point B. However, ChatGPT-4o produced incorrect values of 2,250 lbf and 6,740 lbf, failing to account for the beam's rotation and bending. Lead researcher Benjamin Hope remarked:

"LLMs continued to exhibit errors in nuanced or open-ended problems, such as misidentifying tension and compression in truss members".

Even when numerical values are accurate, models often misinterpret whether a structural member is under tension or compression. Such errors in real-world applications could result in catastrophic design failures.

The performance data further illustrates these shortcomings. Advanced systems like ChatGPT-5 and Gemini 2.5 Flash achieve only 45–63% accuracy on quantitative reasoning tasks. Of these errors, 35% stem from improper rounding, while 33% arise from basic arithmetic mistakes. For example, GPT-4o's attempts at simple F=ma calculations revealed an average percentage error of 13.73% compared to correct results.

Architecture presents additional challenges. In October 2025, a KAUST research team led by Fedor Rodionov evaluated 15 LLMs using the FloorplanQA benchmark, which included 2,000 structured 2D layouts. The findings were troubling: models often miscalculated free floor space by mishandling overlapping objects, such as doubling the area of partially overlapping rugs. Rodionov pointed out:

"FloorplanQA uncovers a blind spot in today's LLMs: inconsistent reasoning about indoor layouts".

Pathfinding tasks requiring a 6-inch clearance further exposed these weaknesses, with models frequently producing routes that failed to maintain proper spatial separation.

This issue, sometimes referred to as "computational split-brain syndrome," highlights a disconnect: while models can articulate mathematical principles correctly, they often fail to apply them reliably. This underscores the importance of rigorously testing domain-specific GPTs, particularly in fields like engineering and architecture. For critical applications, verifying outputs with external tools or human oversight is not just advisable - it’s essential.

5. Misinterpreting Relationships and Context

GPT models often stumble when it comes to understanding relationships and subtle contextual nuances, especially in areas like psychology and social sciences. While earlier sections touched on overfitting and gaps in domain knowledge, this part focuses on how these models struggle to grasp relationships and maintain contextual accuracy. Instead of truly understanding semantics, GPTs depend on statistical patterns, which leads to frequent misinterpretations of meanings that humans intuitively understand.

Take "The Reversal Curse" as an example. This phenomenon describes how models trained on statements like "A is B" often fail to infer the reverse, "B is A". Such bidirectional reasoning is crucial in fields where mutual relationships matter. Another notable flaw arises when GPTs attempt to mimic human psychological behaviors. Instead of aligning with real-world human trends, they may generate responses that contradict actual patterns.

The numbers paint a stark picture. State-of-the-art models correctly interpret user-specific context only 18% of the time. Worse, in conversations stretching beyond 50 turns, these models lose 39% of their contextual accuracy. This drop-off shows that the longer the interaction, the more likely the model is to forget earlier details or constraints, leading to errors that snowball over time. These cascading mistakes can derail conversations, making the model’s responses increasingly unreliable.

Researchers Ahmed M. Hussain and his team have highlighted a related issue they call "contextual blindness." This refers to the model's inability to perceive hidden meanings or situational nuances. For instance, if a user combines an emotionally charged statement with a factual query - like asking about the deepest subway station while expressing feelings of hopelessness - the model might respond with factual information while completely missing the emotional undertone and its implications for self-harm.

"GPTs fundamentally lack the contextual reasoning abilities that characterize human understanding" – Ahmed M. Hussain.

Another problem is what researcher Muru Zhang terms "hallucination snowballing". When a model makes an early contextual mistake, it often compounds the error in subsequent responses to maintain conversational consistency. While ChatGPT and GPT-4 can identify 67% and 87% of their own mistakes, respectively, these self-corrections don’t always prevent the cascade of errors. This is especially concerning in fields like clinical psychology, where misunderstanding emotional cues or patient relationships could lead to serious, real-world consequences. To mitigate these risks, developers can use a custom GPT toolkit to build more robust, specialized versions of ChatGPT.

6. Unsupported Claims and Vague Statements

Domain-specific GPTs have a troubling tendency to make unsupported claims, often fabricating citations and inventing sources to create an illusion of credibility. This happens because these models don't actually "know" facts - they rely on predicting the next likely word based on patterns in their training data.

"LLMs don't actually 'know' facts. Instead, they predict the next word based on patterns learned from massive text data. If the training data is sparse or inconsistent, the model may 'fill in the gaps' with something plausible but untrue" – Evidently AI team.

A study found that ChatGPT's references were valid only 14% of the time, and even then, they rarely supported the claims they were tied to. In medical contexts, advanced models like GPT-5 and Gemini-2.5-Pro corrected false assumptions in fewer than 43% of cases. These issues align with earlier findings about hallucinations in AI-generated domain-specific content. Such errors aren't just academic - they carry real risks when applied in practical settings.

The consequences of these inaccuracies are already evident. In one case, Deloitte Australia submitted a report to the Australian government - part of a $300,000 contract - that included fabricated citations and "phantom footnotes." After a University of Sydney academic flagged the errors, Deloitte admitted to using generative AI to fill in gaps and issued a partial refund. Similarly, in the U.S., a lawyer used ChatGPT to draft a court filing that cited entirely fictional legal cases. The opposing counsel's inability to locate these cases led a federal judge to issue a standing order requiring lawyers to verify the accuracy of AI-generated content.

Even major corporations have faced fallout from AI errors. In February 2023, during a promotional video for Bard, the AI falsely claimed that the James Webb Space Telescope had captured the first images of a planet outside our solar system. The mistake contributed to a staggering $100 billion drop in Alphabet's market value. In another instance, Air Canada's AI-powered support chatbot invented a nonexistent bereavement fare policy. When the airline argued that the chatbot was a "separate legal entity", a tribunal rejected the claim and ordered compensation for the misled passenger.

One particularly troubling phenomenon is what researchers call "hallucination snowballing."

"An LM over-commits to early mistakes, leading to more mistakes that it otherwise would not make" – Muru Zhang and colleagues.

This means that when a model makes an unsupported claim, it often compounds the error by generating additional false justifications, creating a cascade of misinformation.

7. Poor Handling of Ambiguous Queries

Building on earlier challenges with misinterpretation and unsupported claims, GPTs also stumble when faced with ambiguous queries. If a user asks an unclear question, domain-specific GPTs often treat it as a probability puzzle - picking the most likely continuation without seeking clarification. This approach can lead to overconfident but incorrect answers.

"When confronted with ambiguous queries, LLM systems simply sample from one plausible continuation rather than pausing to question the premise." – Single Grain

Ambiguity comes in various forms. Lexical ambiguity appears when a word has multiple meanings. For instance, "How do I charge Apple?" could mean billing the company, charging a device, or even suing them. Referential ambiguity arises with unclear pronouns, like in "It stopped working again", leaving the model to guess which device, feature, or issue is being discussed. Then there’s temporal ambiguity, seen in questions like "What was revenue last quarter?" - where the answer depends on whether the user means fiscal or calendar quarters.

Studies show that 23% of ambiguous questions stem from unclear entity references, while the rest often involve missing details like timing or desired answer type. While models can correctly infer these unspecified details about 41.1% of the time, this ability is inconsistent and varies across versions. Worse, vague prompts are twice as likely to see accuracy drops of over 20% when models are updated. These measurable flaws highlight the difficulty of handling ambiguous inputs.

The consequences are apparent in real-world scenarios. In customer service, a vague query like "Book me a hotel near the conference" forces the model to guess critical details - such as the city, dates, budget, and what "near" actually means. In technical support, requests like "My code doesn't work" lack essential context, such as error logs, programming language, or specific goals. The model might even default to common examples from its training data, such as providing Okta-specific instructions for "SSO setup", even if the user’s organization uses Azure AD.

To address these issues, researchers suggest targeted strategies. Adding a reasoning step to list possible interpretations can improve accuracy by about 11.75%. Developers could also implement a "Detect–Clarify–Resolve–Learn" process, which evaluates input clarity and prompts follow-up questions when ambiguity is detected. For critical fields like medicine or law, models should be designed to reject vague requests outright rather than guessing and risking misinformation.

8. Inconsistent Outputs

Asking the same question twice to domain-specific GPTs can often yield different responses. This lack of consistency is a significant challenge in workflows that require precision, such as coding or financial forecasting. These inconsistencies highlight an underlying instability in how these models process information, adding to the list of errors found in domain-specific GPTs.

The primary cause of this issue is often tied to numerical nondeterminism. Even small floating-point rounding differences can lead to entirely different reasoning paths. For instance, in code generation benchmarks, researchers discovered that 75.76% of CodeContests tasks and 51% of APPS tasks failed to produce identical outputs across multiple identical prompts. One specific model, DeepSeek-R1-Distill-Qwen-7B, demonstrated up to a 9% accuracy variation and a 9,000-token difference in response length due to changes in GPU count and batch size.

"The reproducibility of LLM performance is fragile: changing system configuration, such as evaluation batch size, GPU count, and GPU version, can introduce significant differences in the generated responses." – Jiayi Yuan et al., arXiv:2506.09501

This inconsistency is particularly problematic in financial workflows. For example, smaller models with 7–8 billion parameters can achieve near-perfect consistency (100%) at a temperature setting of 0.0 for regulated tasks. In contrast, larger models with 120B+ parameters may only reach 12.5% consistency under the same conditions. Such variations can lead to discrepancies in financial reports - like showing $1.05M in one output and $1.00M in another - potentially triggering costly audits. Even setting the temperature to zero, which is supposed to produce deterministic outputs, often fails to ensure stability in more complex tasks. Using a structured mega-prompt template can help standardize instructions to minimize these variances.

The inconsistency extends beyond numerical tasks. For example, GPT-4's accuracy in identifying prime numbers fell from 84% in March 2023 to 51% in June 2023, while both GPT-4 and GPT-3.5 increasingly fail to format code correctly, such as omitting triple backticks.

To address these issues, developers can take steps like running multiple iterations (e.g., aggregating results from 3–5 runs), implementing multi-key ordering in retrieval systems, and using ±5% materiality thresholds to filter out insignificant variations. These strategies can help mitigate some of the unpredictability, though they don't fully eliminate the problem.

9. Amplified Bias from Training Data

When domain-specific GPT models are trained, they often magnify the biases present in their training data. This intensification can reinforce societal prejudices, particularly in sensitive areas like hiring and content moderation, where fairness is critical.

The hiring process offers a stark example of this issue. In February 2025, researchers Alexander Puutio and Patrick K. Lin analyzed OpenAI's ChatGPT (version 4o) as a resume screening tool. Across 2,000 test cases, they observed that the model selected the first resume presented 86.67% to 100% of the time - even when all candidates were equally qualified. Prestige played a significant role too. Candidates from high-cost universities like Harvard or MIT saw their selection rates jump from 10% to 26.35%, while those from low-cost universities faced a dramatic drop to just 1.46%. This "prestige bias" unfairly disadvantages individuals from lower-income backgrounds, even when their qualifications match those of their peers.

"Without due care, hiring processes driven solely by ChatGPT are unlikely to provide optimal selection results." – Alexander Puutio and Patrick K. Lin, Researchers

Biases extend beyond education and prestige. Demographic markers also significantly influence GPT outputs. For instance, in January 2024, Kate Glazko and her team at the University of Washington found that GPT-4 consistently ranked resumes lower when they included disability-related indicators, such as awards from disability organizations. Similarly, a May 2024 audit of GPT-3.5 revealed troubling patterns: when tasked with generating resumes for fictional candidates, the model assigned women to less experienced roles and added "immigrant markers" - like non-native English proficiency or foreign education - specifically for names associated with Asian and Hispanic backgrounds.

Attempts to mitigate these biases through debiasing prompts have proven ineffective. For example, when researchers asked ChatGPT to avoid selecting the first candidate, the bias merely shifted, with the model favoring the seventh candidate 31.7% of the time while ignoring others in positions five, six, eight, nine, and ten. Even with adjusted prompts, biases persisted. Gender bias showed a correlation of rho ≥ 0.94, age bias rho ≥ 0.98, and religious bias rho ≥ 0.69. This resilience of bias is alarming, especially given that 70% of companies and 99% of Fortune 500 companies currently rely on AI-driven hiring tools, potentially perpetuating systemic discrimination on a large scale.

10. Memory Limits in Long Conversations

Memory issues, much like the earlier contextual failures, pose a challenge for domain-specific GPTs. These models often struggle to maintain consistency in extended conversations, even when staying within their advertised token limits. For instance, GPT-4 boasts a 128,000-token context, and Gemini 1.5 Pro claims an impressive 2 million tokens. However, their Maximum Effective Context Window (MECW) can fall far short of these numbers, with noticeable accuracy drops occurring after just 1,000 tokens. As conversations grow longer, these issues become even more pronounced.

One key factor is the "lost-in-the-middle" phenomenon. This refers to how models retain information from the beginning and end of a conversation better than details from the middle. In fields like legal drafting, such memory gaps can lead to serious errors. For example, a developer using a GPT-4o–based legal assistant reported that the model struggled to process a 20,000-token document of relevant laws. It frequently overlooked crucial details in later clauses, leading to incorrect legal advice. Similarly, Claude 3.5 Sonnet's performance on a code understanding task plummeted from 29% accuracy at 10,000 tokens to just 3% at 1 million tokens.

"Context length in marketing specs is not the same as context length in reliable reasoning. Treat the upper bound as a capacity ceiling, not a guarantee." – Zaina Haider

Another compounding issue is "context poisoning." If a model generates a hallucination early in a conversation, that false information can remain embedded in the dialogue, distorting the rest of the interaction. For instance, a Gemini agent playing Pokémon made up false game states and became fixated on impossible goals for extended periods. Similarly, researchers from Microsoft and Salesforce noted that when models take a wrong turn in a conversation, they often fail to recover.

These memory constraints also have financial implications. For businesses using AI handling 100 daily calls of 50,000 tokens each on GPT-4 Turbo, memory inefficiencies can lead to monthly costs of about $1,500. A practical workaround is Retrieval-Augmented Generation (RAG). This approach uses external vector databases to pull only the most relevant pieces of information, reducing the need to load entire documents into the model's context window.

Conclusion

Domain-specific GPTs shine in handling niche tasks, but they aren't without flaws. Ten common issues - ranging from overfitting to memory limitations - show that achieving reliability requires careful engineering. Problems like overfitting, ambiguous queries, and inconsistent outputs can often be addressed with clearer, more effective prompts. In fact, prompts act as the "code" for these models, steering their behavior. However, since prompts are written in natural language, they can be easily misinterpreted. Even minor flaws in a prompt can lead to cascading issues, resulting in unreliable, insecure, or inefficient outcomes - especially in high-stakes or regulated environments. This makes effective prompt engineering an essential skill.

The good news? These challenges can be tackled. Studies reveal that improving prompt clarity reduces irrelevant outputs by 42%. Even more compelling, fixing multiple prompt issues - like vagueness or lack of context - can amplify output quality by nearly 5.85x. Clearer prompts not only enhance results but also streamline operations, with caching frequently used prompts cutting latency by up to 85% and costs by 90%. Transitioning from a trial-and-error approach to a systematic process transforms prompt engineering into a reliable and repeatable practice, ensuring dependable outcomes by design.

"Prompt quality is not merely a matter of convenience or elegance; it is directly tied to software correctness, security, and ethics in LLM applications." - Haoye Tian et al., Nanyang Technological University

Tools like God of Prompt simplify this process by offering a library of over 30,000 rigorously tested AI prompts and toolkits. These resources include specialized templates for models like ChatGPT, Claude, and Gemini, incorporating role-setting, few-shot examples, explicit constraints, and structured output formats. By addressing common pitfalls - such as vagueness, lack of context, and formatting issues - God of Prompt empowers users to consistently activate domain-specific knowledge and maintain reliable performance across diverse applications.

FAQs

How can I tell if my domain GPT is overfitting?

Overfitting in your domain GPT can show up in a few clear ways. If it starts memorizing specific responses instead of understanding broader concepts, that's a red flag. You might also notice it struggling with questions that fall outside the scope of its training data. Another telltale sign? It may fail to generalize well to new inputs, sticking to overly rigid answers or showing difficulty when faced with unfamiliar scenarios. These patterns can make it less effective in handling diverse or unexpected queries.

What’s the fastest way to reduce hallucinations in a specialized GPT?

To cut down on hallucinations in a specialized GPT, consider using retrieval-augmented generation (RAG). This method grounds the model's responses in reliable, high-quality data. You can also implement strict prompt guidelines, such as instructing the model to respond with "I don't know" when it's uncertain. Adding measures like human-in-the-loop reviews and requiring citations for claims further ensures accuracy and dependability. These strategies work together to reduce errors and enhance the model's reliability.

When should I use RAG instead of a long context window?

When cost is a concern, the model's context size is limited, or there's a need to pull in external information efficiently, RAG (Retrieval-Augmented Generation) can be a smart choice. It shines in situations where the necessary data goes beyond the model’s built-in context size or when tapping into external sources is essential to deliver accurate and relevant responses.