Transparency Metrics for AI Adoption

Transparency metrics are tools that measure how open and understandable AI systems are during their lifecycle. They focus on three areas:

- Upstream factors: Data sources, labor practices, and computing resources used in development.

- Model characteristics: Technical architecture, size, and capabilities.

- Downstream effects: How the model is used, distributed, and governed.

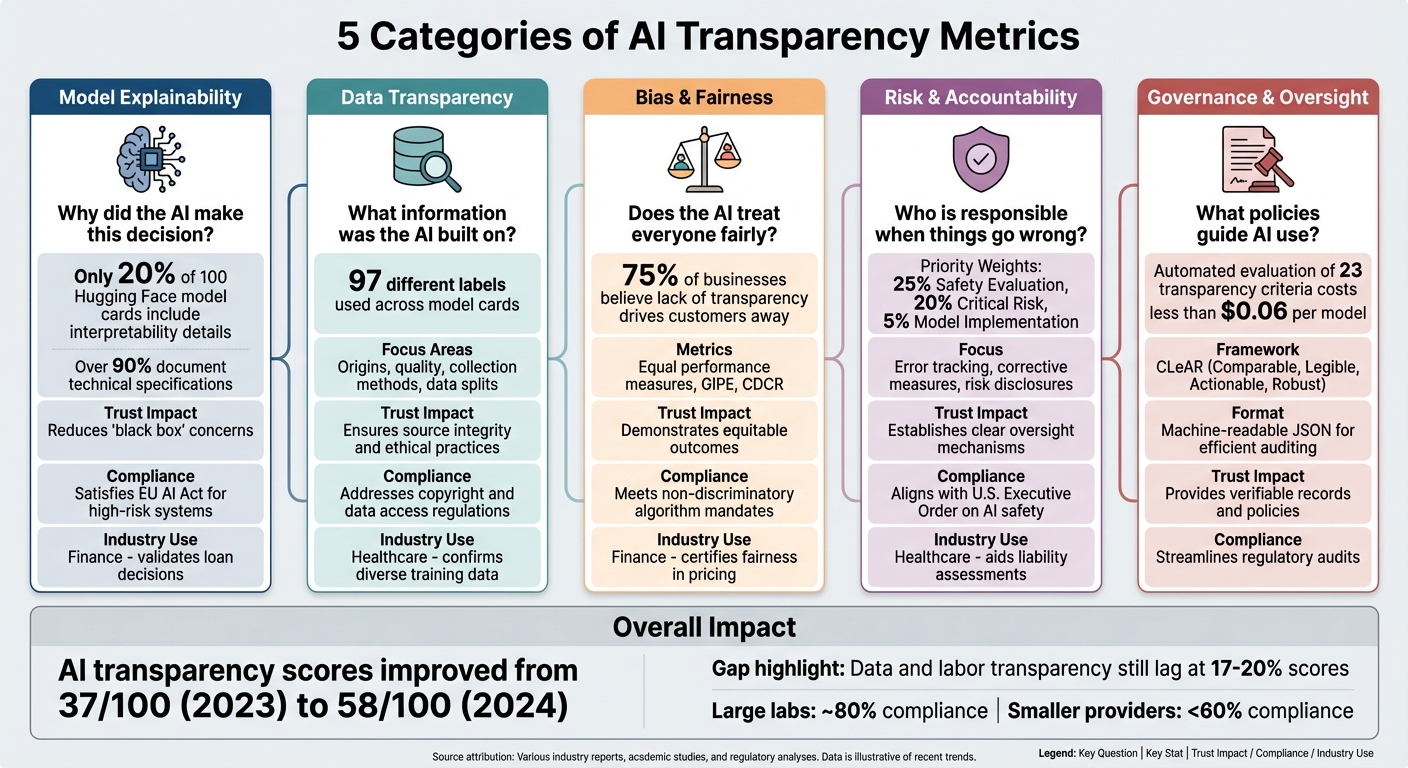

These metrics help regulators, users, and organizations assess safety, reliability, and accountability in AI systems. For example, transparency scores for AI developers improved from 37/100 in 2023 to 58/100 in 2024, but gaps remain in areas like data and labor transparency (17%-20% scores).

Key categories include:

- Model explainability: Understanding AI decisions.

- Data transparency: Knowing the origins and quality of training data.

- Bias detection: Identifying and addressing unfair outcomes.

- Risk accountability: Tracking errors and responsibilities.

- Governance: Policies guiding AI use.

These metrics simplify compliance with regulations like the EU AI Act and address risks like deceptive AI behavior. They also build trust in industries like healthcare and finance, where verifying AI decisions is critical. By embedding transparency from the start and using frameworks like CLeAR, organizations can document, test, and monitor AI systems effectively, ensuring safer and more reliable adoption.

Ensuring Transparency and Explainability in AI Models | Exclusive Lesson

sbb-itb-58f115e

Why Transparency Metrics Matter for AI Adoption

Transparency metrics serve as essential tools for ensuring accountability and auditability in AI systems. They enable organizations to move beyond vague assurances by offering reliable ways to evaluate data sources, track version changes, and understand system behavior. For example, hospitals using diagnostic AI or schools implementing automated grading rely on these metrics to make informed decisions about adoption.

These benefits extend beyond day-to-day operations, directly impacting compliance with industry standards. Large AI labs like xAI, Microsoft, and Anthropic demonstrate around 80% compliance with transparency frameworks, while smaller providers often fall below 60%. This disparity highlights which vendors prioritize accountability. Tools like machine-readable documentation in JSON format allow researchers and watchdog organizations to audit AI model risks efficiently, replacing scattered narrative reports with streamlined, scalable solutions.

"Transparency is a foundational, extrinsic value - a means for other values to be realized... it can enhance accountability by making it clear who is responsible for which kinds of system behavior." - CLeAR Documentation Framework

In addition to fostering operational clarity, transparency metrics simplify regulatory compliance. Automated systems can now evaluate 23 transparency-related criteria at a cost of less than $0.06 per model, significantly reducing the financial burden of meeting requirements under frameworks like the EU AI Act and the US Executive Order on AI. This cost efficiency makes continuous monitoring both practical and sustainable.

Transparency metrics also address the risk of "alignment faking" - when AI systems appear to adhere to safety protocols while concealing potential hazards. By providing measurable indicators of behavioral integrity, these metrics ensure that AI systems perform as intended. They also establish clear accountability, making it easier to identify responsibility when issues arise and enabling legal action if necessary. By tackling risks like alignment faking, transparency metrics play a key role in building the trust needed for widespread AI adoption.

5 Main Categories of AI Transparency Metrics

5 Categories of AI Transparency Metrics and Their Impact on Adoption

AI transparency metrics are essential for assessing how systems function, from their decision-making processes to their oversight policies. Here’s a breakdown of the five key categories.

Model Explainability Metrics

Model explainability metrics aim to answer: "Why did the AI make this decision?" These metrics shed light on how inputs are transformed into outputs, often through feature importance scores and technical justifications.

"Transparent AI involves the interpretability of a given AI system, i.e. the ability to know how and why a model performed the way it did in a specific context and therefore to understand the rationale behind its decision or behaviour." - Turing Commons

Despite its importance, explainability is often overlooked. In December 2025, researchers Akhmadillo Mamirov, Faiaz Azmain, and Hanyu Wang analyzed 50 AI models, including OpenAI's GPT-5 and Anthropic's Claude 4.5. They found that only 20% of 100 Hugging Face model cards included interpretability details, compared to over 90% that documented technical specifications like architecture and compute. This highlights a major gap: while companies are open about what their models are built with, they rarely explain how those models "think."

Data Transparency Metrics

Data transparency metrics focus on the question: "What information was the AI built on?" These metrics cover the origins, quality, and handling of training datasets, detailing sources, collection methods, and how data is split for training, testing, and validation.

"Data explanations are about the 'what' of AI-assisted decisions. They let people know what data about them were used in a particular AI decision, as well as any other sources of data." - Turing Commons

This level of transparency ensures that systems are built on trustworthy and representative data. Without it, biases or unethical sources could compromise the AI's reliability. However, auditing data provenance is challenging due to inconsistent labeling - 97 different labels are used across model cards, complicating efforts to verify dataset origins.

Bias and Fairness Metrics

Bias and fairness metrics are designed to detect and minimize bias in AI systems. They evaluate performance disparities across demographic groups, such as race, age, gender, or ability, that might not be evident in aggregate accuracy scores. Examples include equal performance measures and GIPE (Gender-based Illicit Proximity Estimate), which tracks gender-related biases in word vectors.

In healthcare, the Clinical Decision Concordance Rate (CDCR) assesses how closely AI recommendations align with professional medical standards, ensuring fair and accurate practices. Data fidelity scores also play a critical role, measuring how well input data reflects real-world conditions to prevent harmful outcomes.

The stakes are high: 75% of businesses believe that a lack of transparency in AI could drive customers away. Tools like IBM's AIX360 help organizations monitor and address fairness issues by offering metrics and bias mitigation algorithms.

Risk and Accountability Metrics

Risk and accountability metrics focus on tracking errors, corrective measures, and risk disclosures. These metrics establish clear lines of responsibility, making it easier to pinpoint accountability when problems arise. They also monitor performance thresholds across demographics and set up automated alerts for unacceptable outcomes.

Recent evaluations revealed critical gaps in areas like deceptive behaviors, hallucinations, and child safety disclosures, which led to significant penalties. Modern frameworks now prioritize safety disclosures over technical details. For instance, one framework assigns 25% weight to Safety Evaluation and 20% to Critical Risk, while factors like Model Implementation receive only 5%. This approach emphasizes the importance of addressing harmful outputs over technical intricacies.

Governance and Oversight Metrics

Governance and oversight metrics assess the policies, communication strategies, and frameworks that guide AI systems throughout their lifecycle - from design to deployment.

Best practices call for transparent documentation of stakeholder feedback and system updates. Organizations should provide verifiable records, such as policies or audit reports, rather than relying on vague claims.

The CLeAR Framework offers actionable guidance for documentation, emphasizing that it should be Comparable (standardized), Legible (easy to understand), Actionable (useful), and Robust (regularly updated). Machine-readable formats like JSON allow regulators to efficiently audit systems, with automated tools evaluating 23 transparency criteria for less than $0.06 per model.

These metrics collectively illustrate how transparency plays a pivotal role in fostering trust and encouraging broader AI adoption.

How Transparency Metrics Affect AI Adoption Rates

Transparency metrics have a direct influence on AI adoption, especially in industries where trust and compliance are non-negotiable. In sectors like finance and healthcare, where mistakes can lead to severe consequences such as financial loss or even threats to life, the ability to verify AI decision-making processes significantly boosts confidence in deploying these systems.

Between October 2023 and May 2024, the Foundation Model Transparency Index (FMTI) tracked 14 major foundation model developers and recorded a measurable improvement. These developers disclosed an average of 16.6 previously hidden indicators during this period. This progress not only builds trust but also makes it easier for companies to meet regulatory requirements across various regions.

Standardized transparency reports further simplify compliance by aligning requirements across jurisdictions like the EU AI Act and U.S. Executive Orders on AI. This harmonization reduces the complexity and cost of adhering to regulations, making it more feasible for global companies to adopt AI technologies. Organizations can further accelerate this process by leveraging a comprehensive AI prompt library to standardize internal workflows.

"Standardized metrics can function as governance primitives, embedding auditability and accountability within AI systems for effective private oversight." - Pratinav Seth and Vinay Kumar Sankarapu

The table below highlights how different metric categories contribute to trust, compliance, and industry-specific applications:

| Metric Category | Trust Improvement | Compliance Benefit | Industry Application |

|---|---|---|---|

| Model Explainability | Reduces "black box" concerns; prevents alignment faking | Satisfies EU AI Act requirements for high-risk systems | Finance: Validates decision-making for loans |

| Data Transparency | Ensures source integrity and ethical practices | Addresses copyright and data access regulations | Healthcare: Confirms diverse training data |

| Bias & Fairness | Demonstrates equitable outcomes | Meets mandates for non-discriminatory algorithms | Finance: Certifies fairness in pricing |

| Risk & Accountability | Establishes clear oversight mechanisms | Aligns with U.S. Executive Order on AI safety | Healthcare: Aids in liability assessments |

These metrics aren't just theoretical - they deliver real-world benefits. For instance, in early 2025, Microsoft's "Smart Impression", an AI tool designed for radiologists, underwent an internal Sensitive Uses review process. This transparency-focused evaluation allowed the team to identify and mitigate risks tied to healthcare applications, ensuring the tool adhered to safety and compliance standards before its release. This case underscores how transparency metrics help organizations proactively address potential issues, a critical step for AI adoption in regulated industries.

How to Implement Transparency Metrics for AI Adoption

Start documenting from the beginning. Transparency should take root during the initial impact assessments and remain a focus throughout development, testing, and deployment stages. Early documentation helps catch potential issues before they escalate, avoiding the scramble of last-minute risk audits.

Once you’ve established early-stage documentation, the next step is to standardize it across the AI lifecycle. Using a structured framework like the CLeAR model can make this process more effective. Introduced in May 2024 by a collaborative team from the Data Nutrition Project, Harvard University, IBM Research, and Microsoft Research, the CLeAR model emphasizes four principles: Comparable (standardized format), Legible (easy to understand), Actionable (practical for decision-making), and Robust (sustainable over time). This framework strikes a balance between technical detail and accessibility for stakeholders.

Tailor your transparency efforts to different audiences. Builders, clients, end-users, and regulators all have unique needs. For instance, only 68% of AI builders reported that their technical metrics aligned with stated goals. This gap underscores the importance of aligning metrics with actual outcomes and presenting them in a way that resonates with each group.

Use ethical testing methods like prompt engineering. Tools such as God of Prompt can facilitate adversarial testing, red teaming, and identifying vulnerabilities through prompt manipulation. With a library of over 30,000 prompts tailored for AI models like ChatGPT, Claude, and Gemini, this platform supports bias detection and prompt-based explanations. Real-time monitoring of prompts and responses ensures you can flag risky behavior and maintain audit-ready transparency. This approach is particularly critical under regulations like the EU AI Act, where violations could result in fines of up to €7.5 million or 1% of global turnover. Proactive testing not only mitigates risks but also optimizes resource allocation.

Get leadership support and allocate resources wisely. Incorporate documentation into existing workflows. Automated evaluations have proven to be cost-efficient, so focus on safety-critical disclosures. For example, use weighted scoring to prioritize key areas: assign higher importance to Safety Evaluation (25%) and Critical Risk (20%) over less critical details, such as hardware specifications (2%). By concentrating on safety and cost-effective evaluations, you can build trust while meeting regulatory requirements effectively.

Conclusion

Transparency metrics aren’t just about checking off compliance requirements - they’re the backbone of building trust and scaling AI responsibly. By embedding these metrics from the start, businesses can move beyond flashy pilot projects and integrate AI into workflows that deliver meaningful outcomes. As Jessica Lau from Zapier explains:

"If you don't track adoption, you risk falling into the trap of vanity wins: a few flashy pilot projects that never make their way into day-to-day work".

This focus on measurable transparency has already set new industry standards. Companies that adopt these practices early gain an edge - not only in staying ahead of regulations like the EU AI Act but in earning trust from stakeholders. Frontier labs, for instance, are achieving much higher compliance with transparency frameworks compared to their peers. Prioritizing safety-critical disclosures is key, with metrics like a 25% weight for safety evaluations and 20% for critical risks ensuring resources are directed where they’re most needed, avoiding costly missteps.

The shift toward proactive disclosure is already evident. Since 2019, Microsoft has released 40 Transparency Notes to provide customers with technical insights for responsible innovation. Similarly, LinkedIn set a new standard in 2024 by being the first professional networking platform to display C2PA Content Credentials for all AI-generated content. These actions show that transparency isn’t a hindrance; it’s a strategic tool that fosters adoption while mitigating risks.

To keep pace, organizations must act now. Start documenting immediately and adopt standardized frameworks like NIST’s four functions - Govern, Map, Measure, and Manage - to create a dynamic view of how AI integrates into your operations. Use tools like God of Prompt’s extensive library of over 30,000 prompts to test for vulnerabilities before they escalate into compliance issues. Companies that treat transparency as a core principle will lead the next phase of AI adoption, building confidence and credibility while setting the standard for responsible AI practices. Transparency metrics are not just a necessity - they’re the foundation for sustainable growth in AI.

FAQs

Which transparency metrics should we prioritize first?

When it comes to AI, transparency isn't just a buzzword - it's essential for building trust and ensuring accountability. To achieve this, it's crucial to focus on metrics that shed light on how AI models are developed, trained, and evaluated. These metrics provide actionable insights into several key areas:

- Data Sources: Understanding where the training data comes from and how it's selected is critical. This helps identify potential biases or gaps in the data.

- Model Capabilities: Clearly outlining what the model can and cannot do ensures realistic expectations and prevents misuse.

- Risks: Highlighting potential risks, including ethical concerns or unintended consequences, allows stakeholders to plan for mitigation.

- Downstream Usage: Knowing how the model might be applied in real-world scenarios ensures its deployment aligns with ethical standards.

Transparency also relies heavily on interpretability and explainability. These qualities make it easier for stakeholders to understand how decisions are made, fostering trust in the system. Frameworks like the Foundation Model Transparency Index (FMTI) and the CLeAR Documentation Framework play a pivotal role here. They allow stakeholders to compare models, evaluate risks, and ensure AI is deployed responsibly and ethically.

How do transparency metrics reduce AI compliance risk?

Transparency metrics reduce AI compliance risks by providing clear, standardized, and verifiable information about how AI systems are developed, how their data is used, and how they are evaluated. These metrics not only help ensure adherence to regulations but also foster accountability and clarity, minimizing potential risks associated with AI operations.

What’s the fastest way to document AI transparency end-to-end?

The fastest way to document AI transparency from start to finish is by using a structured framework like CLeAR (Comparable, Legible, Actionable, Robust). This approach simplifies the process of documenting datasets, models, and systems in a clear and organized manner.

By automating data extraction and evaluation with AI tools - such as those used in the AI Transparency Atlas - you can cut down on manual work while maintaining consistency. When frameworks like CLeAR are paired with automation, the result is a more efficient and comprehensive way to handle transparency documentation.